Human motion state discrimination method based on densely connected convolutional neural network

A convolutional neural network and dense connection technology, applied in the field of human motion state discrimination, can solve unfavorable machine learning and other problems, achieve the effect of reducing the number of supporting measurement equipment and the number of features, low complexity, and reduced complexity

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0023] The present invention will be further described in detail below in conjunction with the accompanying drawings and specific embodiments.

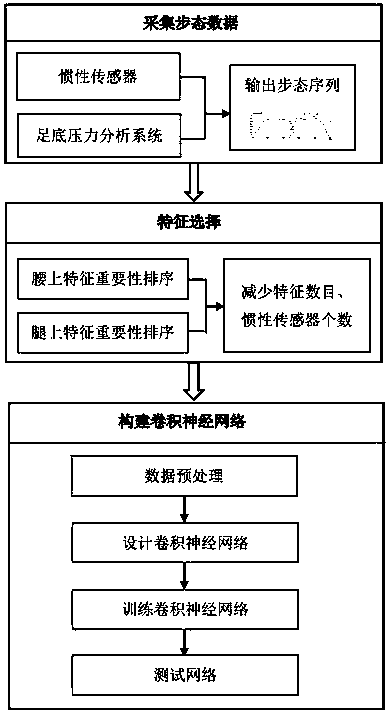

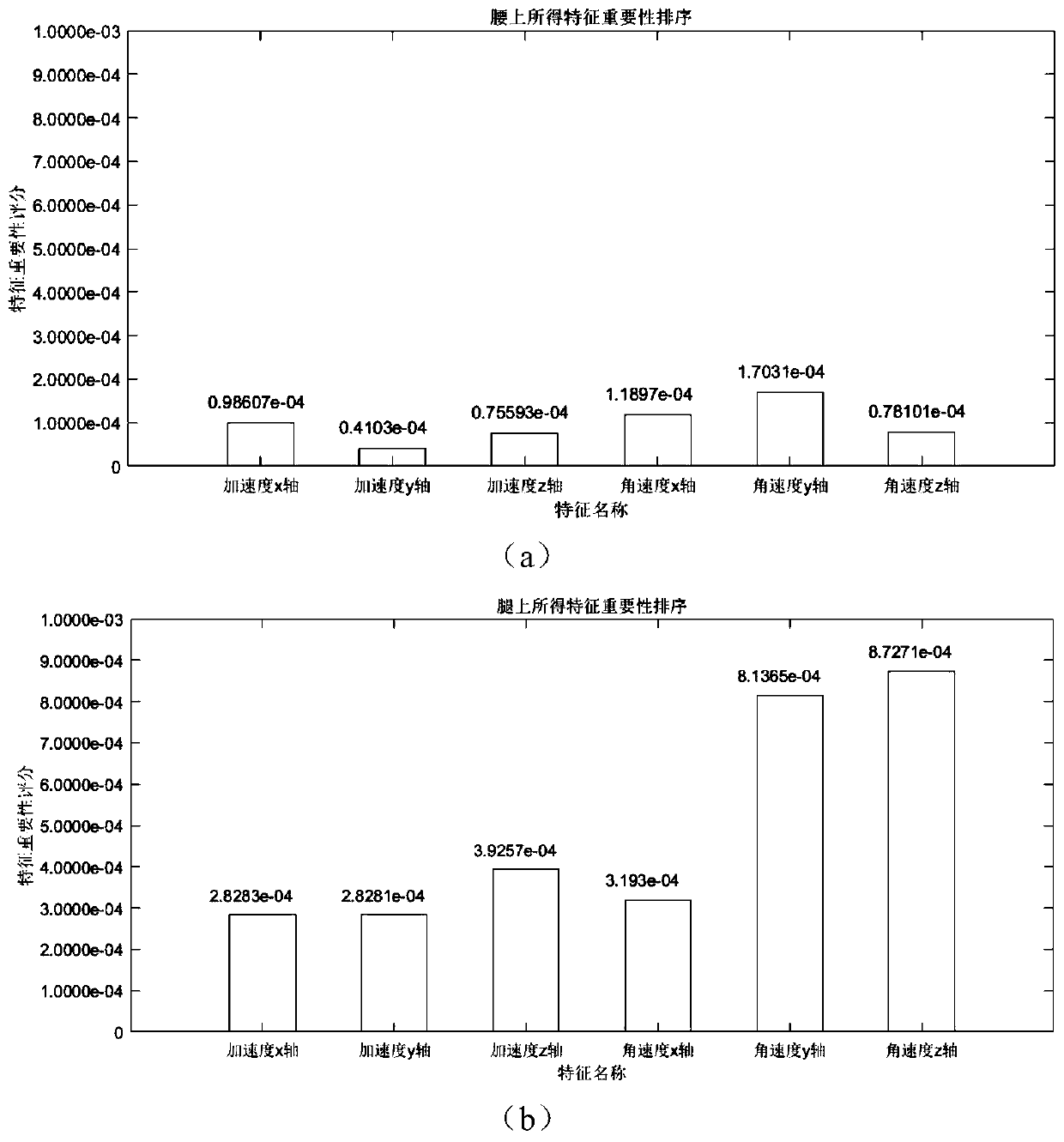

[0024] According to an embodiment of the present invention, a motion intention recognition method based on a dynamic time series of a moving object is proposed. At the same time, the acceleration, angular velocity information and plantar pressure information of the left and right legs and waist of the moving object are collected, which are combined to divide the movement phase and distinguish the movement state. Afterwards, a motion state discrimination method is proposed, which is realized by constructing a densely connected convolutional neural network.

[0025] The following specifically introduces the motion state discrimination method flow process based on the gait information convolutional neural network provided by the present invention, and its steps include:

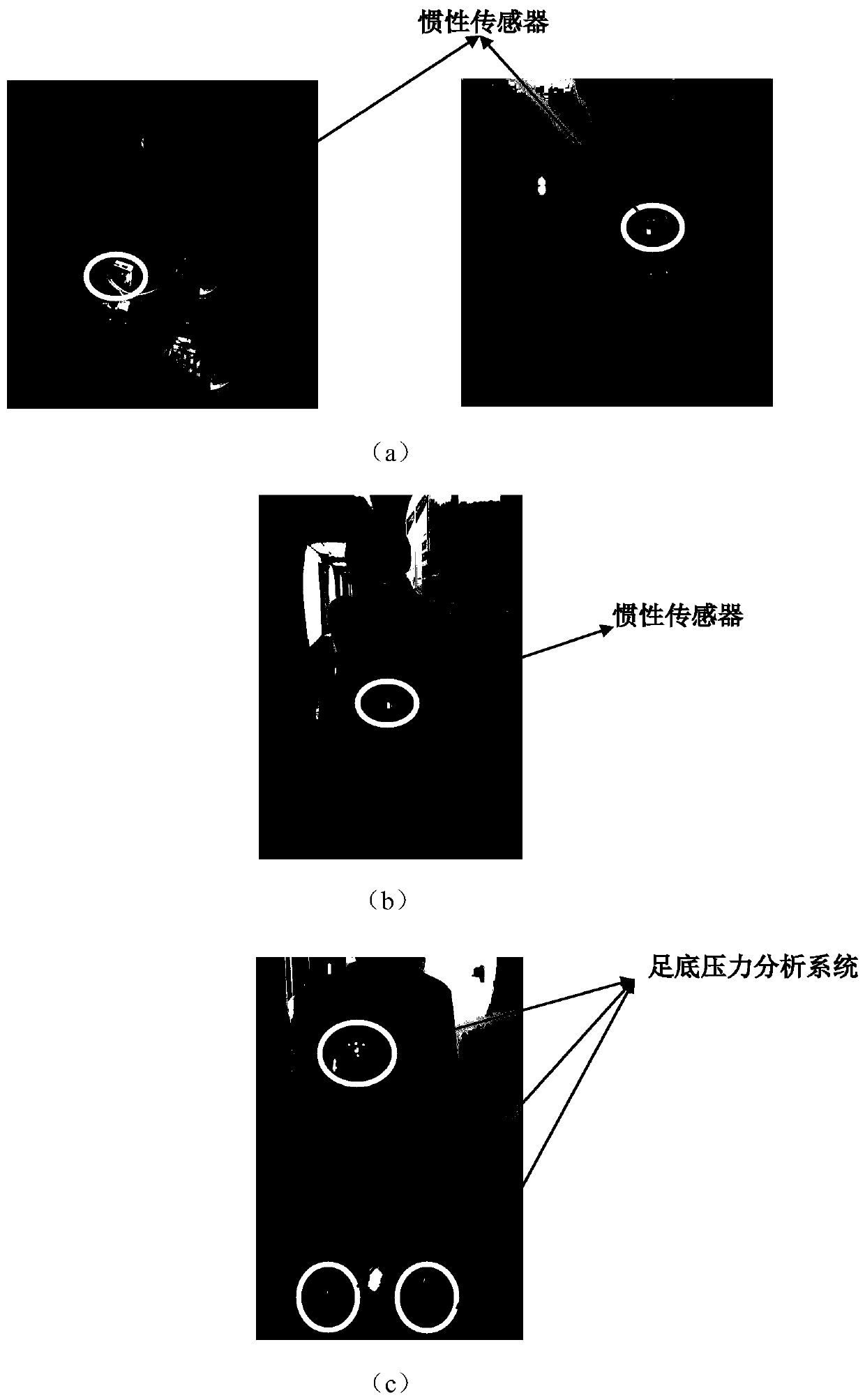

[0026] 1. Gait data collection: The method of combining the plant...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com