Reinforcement learning-based AUV behavior planning and motion control method

A technology of reinforcement learning and motion control, applied in three-dimensional position/channel control, biological neural network model, neural architecture, etc., can solve the problems of over-reliance on artificial experience, limited training experience, and low level of intelligence

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

specific Embodiment approach 1

[0075] This embodiment is an AUV behavior planning and action control method based on reinforcement learning.

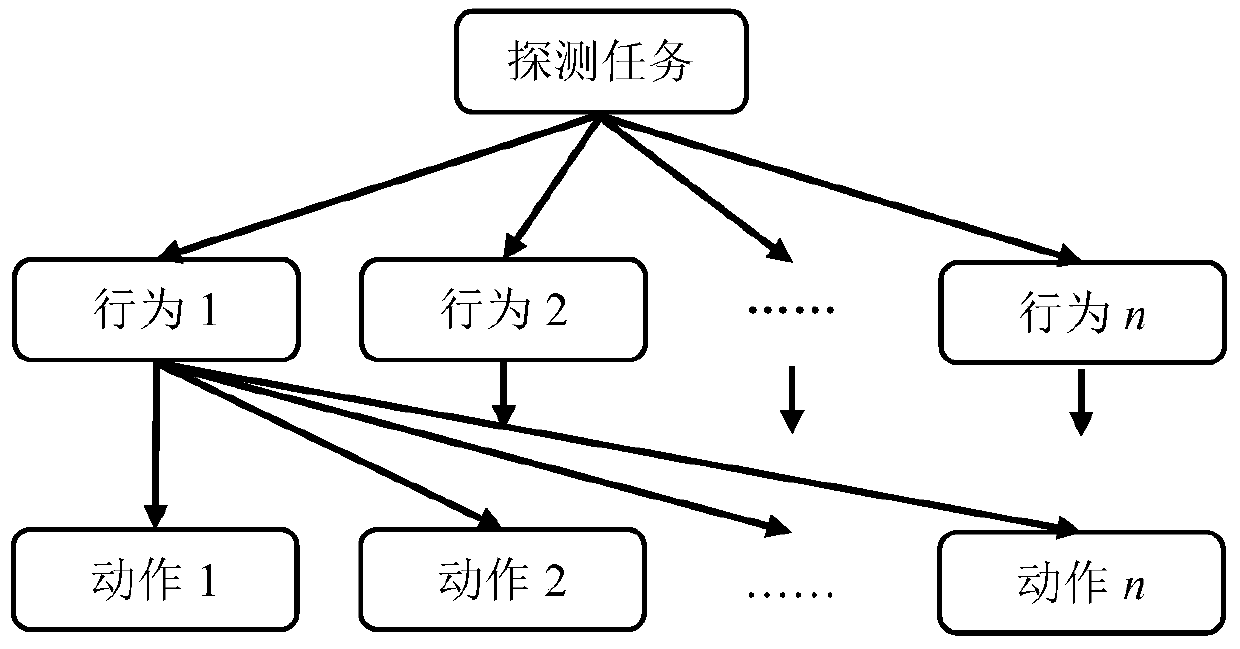

[0076] The present invention defines a three-layer structure of intelligent underwater robot tasks, namely: task layer, behavior layer and action layer; AUV behavior planning is performed when a sudden state is encountered, and the Deep Deterministic Policy Gradient (DDPG) controller is used to control the AUV. motion control.

[0077] The implementation process includes the following three parts:

[0078] (1) Hierarchical design of intelligent underwater robot tasks;

[0079] (2) Behavior planning system construction;

[0080] (3) Design based on DDPG control algorithm;

[0081] Further, the process of the content (1) is as follows:

[0082] In order to complete the stratification of underwater robot tunnel detection tasks, the concepts of intelligent underwater robot tunnel detection tasks, behaviors and actions are defined: the underwater robot tunnel detectio...

specific Embodiment approach 2

[0165] The process of establishing an AUV model with fuzzy hydrodynamic parameters described in the first embodiment is a common AUV dynamic modeling process, which can be realized by using the existing technology in the field. In order to use the above process more clearly, this embodiment The process of establishing an AUV model with fuzzy hydrodynamic parameters will be described. It should be noted that the present invention includes but not limited to the following methods to establish an AUV model with fuzzy hydrodynamic parameters. The process of building an AUV model with fuzzy hydrodynamic parameters includes the following steps:

[0166] Establish the hydrodynamic equation of the underwater robot:

[0167]

[0168] Among them, f—random disturbance force; M—system inertial coefficient matrix, satisfying M=M RB +M A ≥0; M RB —The inertia matrix of the carrier, satisfying and m A —Additional mass coefficient matrix, satisfying — Coriolis force-centripetal f...

Embodiment

[0184] The main purpose of the present invention is to allow the underwater robot to independently complete behavior decision-making and action control according to the current environmental state in the underwater environment, so that people can get rid of the complicated programming process. The specific implementation process is as follows:

[0185] 1) Use programming software to build a behavior planning simulation system for intelligent underwater robots based on deep reinforcement learning, and obtain the optimal decision-making strategy of the robot through simulation training. The specific steps are as follows:

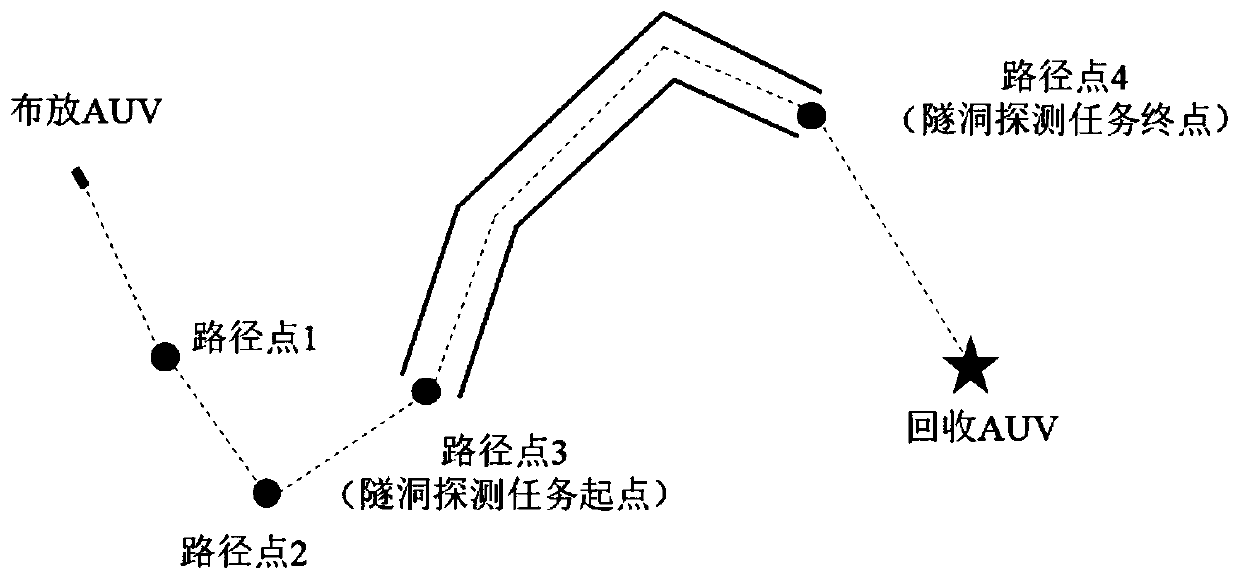

[0186] 1.1) Establish an environment model, determine the initial position and target point, and initialize the algorithm parameters;

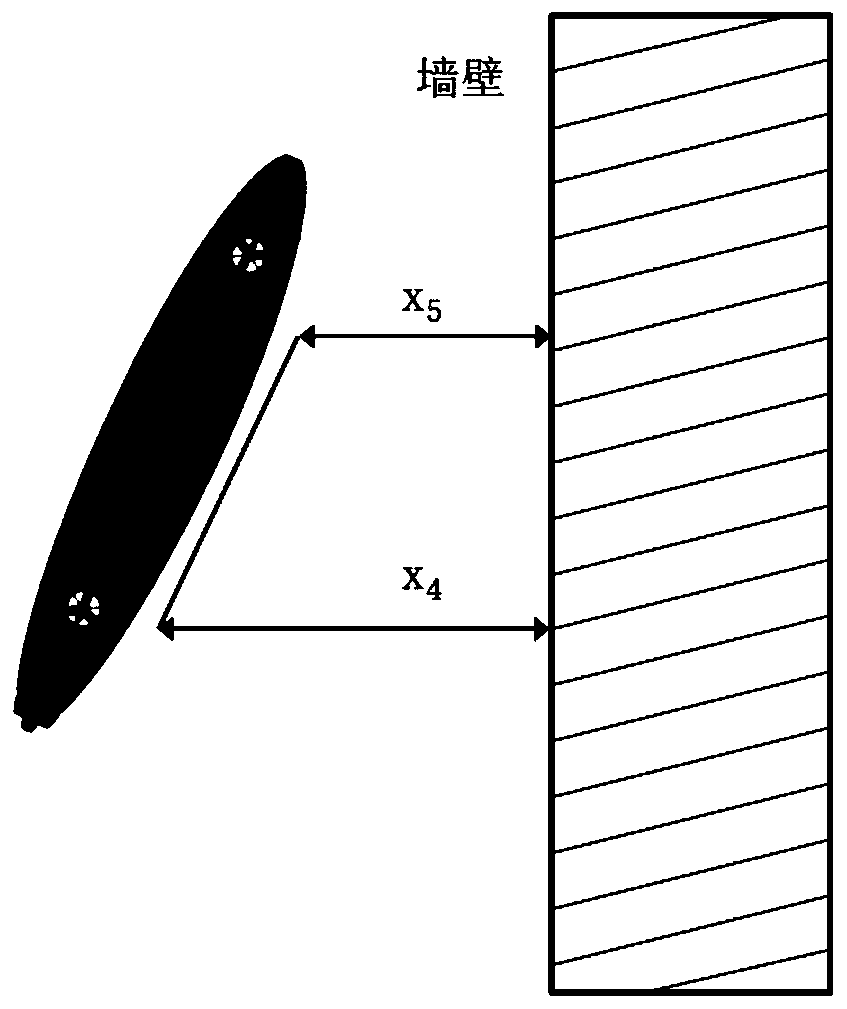

[0187] 1.2) Determine the current state of the environment and the robot task at time t, and decompose the task into behaviors: approaching the target, wall tracking, and obstacle avoidance;

[0188] 1.3) According to the curr...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com