Figure action recognition method based on VR practical training equipment

A technology for action recognition and characters, applied in the input/output process of data processing, input/output of user/computer interaction, instruments, etc. The effect of a simple, versatile, and simplified judgment method

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0011] The technical solutions of the present invention will be described in further detail below through specific implementation methods.

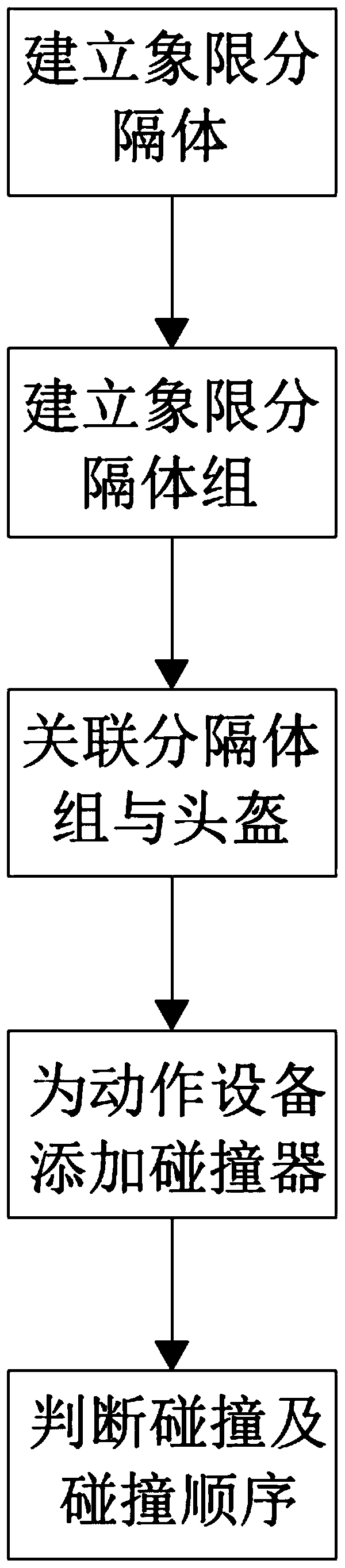

[0012] Such as figure 1 As shown, a method for character action recognition based on VR training equipment includes the following steps: S01, in the VR engine, establish a plurality of virtual quadrant separators; S02, associate the established quadrant separators to form a The separator group whose state information and position information are changed synchronously; S03. The center of the control separator group is always consistent with the center of the helmet in the VR engine; S04. Name each quadrant separator; A collider is added to the device for position or motion judgment; S06, by judging the collision and collision sequence between the collider and the quadrant divider, the judgment and tracking of the motion is realized.

[0013] In practice, a VR device includes a helmet, a handle, and at least two locators. The locator is us...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com