A virtual social method based on avatar expression transplantation

A technology of expression and facial features, applied in virtual social, virtual social field based on Avatar expression transplantation

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

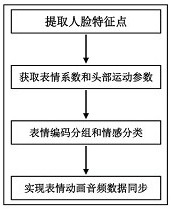

[0030] see Figure 1 ~ Figure 4 , based on the virtual social method of Avatar expression transplantation, it is characterized in that, the specific steps are as follows:

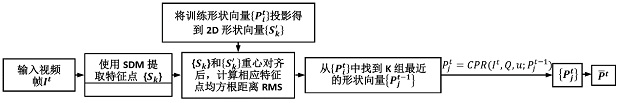

[0031] Step 1. Use SDM to extract face feature points from the real-time input video stream:

[0032] The supervised descent method SDM that minimizes the nonlinear least squares function is used to extract face feature points in real time, that is, the direction of descent that minimizes the average value of the NLS function of different sampling points during training; in the test phase, through OpenCV face Detect and select the region of interest of the face and initialize the average 2D shape model, so the solution to the face alignment problem becomes finding the gradient direction step size, so the direction of learning descent is used to minimize NLS, thereby realizing real-time 2D face features point extraction;

[0033] Step 2. Facial semantic features are used as the input of the DDE model train...

Embodiment 2

[0046] This embodiment is basically the same as Embodiment 1, especially in that:

[0047] 1. The first step uses SDM to extract face feature points from the real-time input video stream, and learns a series of descending directions and scales in this direction from the public image set, so that the objective function converges at a very fast speed to the minimum value, thereby avoiding the problem of solving the Jacobian matrix and the Hessian matrix.

[0048] 2. Based on the virtual social method of Avatar expression transplantation, it is characterized in that: utilize the DDE model of CPR training in the described step 2, obtain the method for expression coefficient and head movement parameter: Blendshape expression model realizes expression by the linear combination of basic posture For re-enactment of animations, a given facial expression of different people corresponds to a similar set of basic weights, making it convenient to transfer the performer's facial expression ...

Embodiment 3

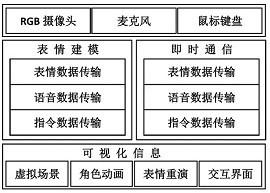

[0052] A virtual social method based on Avatar expression transplantation, see figure 1 , the main steps are: use SDM to extract face feature points from the real-time input video stream; 2D facial semantic features are used as the input of the DDE model trained by CPR, and the output expression coefficients and head motion parameters are transplanted to Avatar; the DDE model output expression coefficients for expression encoding grouping and emotion classification; through the network transmission strategy to realize the synchronization of expression animation audio data, such as figure 2 shown.

[0053] 1. Use SDM to extract face feature points from the real-time input video stream:

[0054] The supervised descent method SDM that minimizes the nonlinear least squares function is used to extract face feature points in real time, that is, the direction of descent to minimize the average value of the NLS function of different sampling points is learned during training, and th...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - Generate Ideas

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com