Model texture generation method based on generative adversarial network

A network and texture technology, applied in the field of computer graphics, can solve the problems of large texture color difference, overlapping colors at texture boundaries, cracks, etc., to achieve the effect of saving production costs, reducing production costs, and ensuring diversity

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0051] In order to describe the present invention more specifically, the method for generating model textures of the present invention will be described in detail below in conjunction with the drawings and specific embodiments.

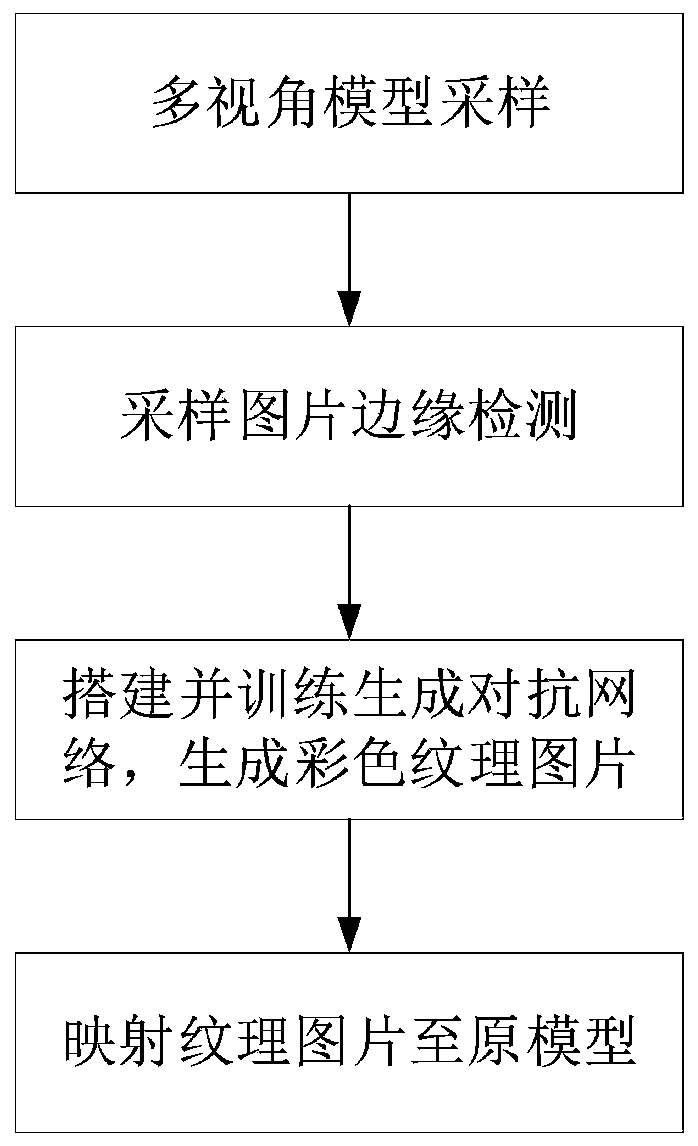

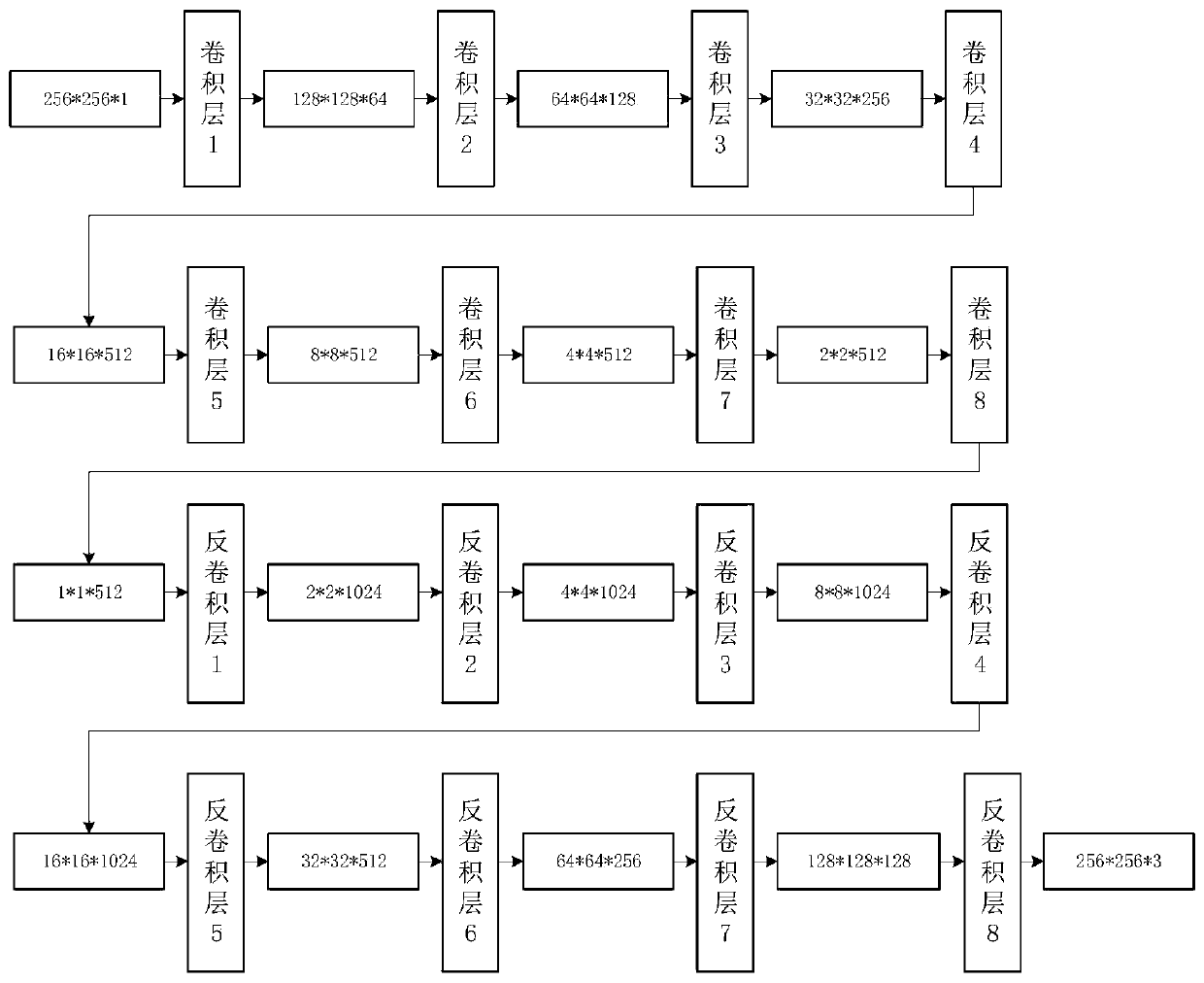

[0052] Such as figure 1 As shown, a model texture generation method based on generative confrontation network includes the following steps:

[0053]In step (1), for the input model, that is, a 3D model without texture maps, according to a given sampling rule, sample pictures of the model are obtained from multiple perspectives, specifically including the following steps:

[0054] 1-1. By setting the coordinates of the model, move the center position of the model to the coordinate origin of the virtual world,

[0055] 1-2. Set the initial position of the camera, the orientation of the lens and the size of the viewport,

[0056] 1-3. According to the multiple sampling points set, model sampling is carried out in sequence, specifically:

[0057] 1-3-1...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D Engineer

- R&D Manager

- IP Professional

- Industry Leading Data Capabilities

- Powerful AI technology

- Patent DNA Extraction

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2024 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com