End-to-end context-based knowledge base question and answer method and device

A technology of context and knowledge base, applied in the field of knowledge base question answering, can solve the problem of single representation of independent status relations, achieve the effect of increasing richness, easy capture, and avoiding error propagation

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

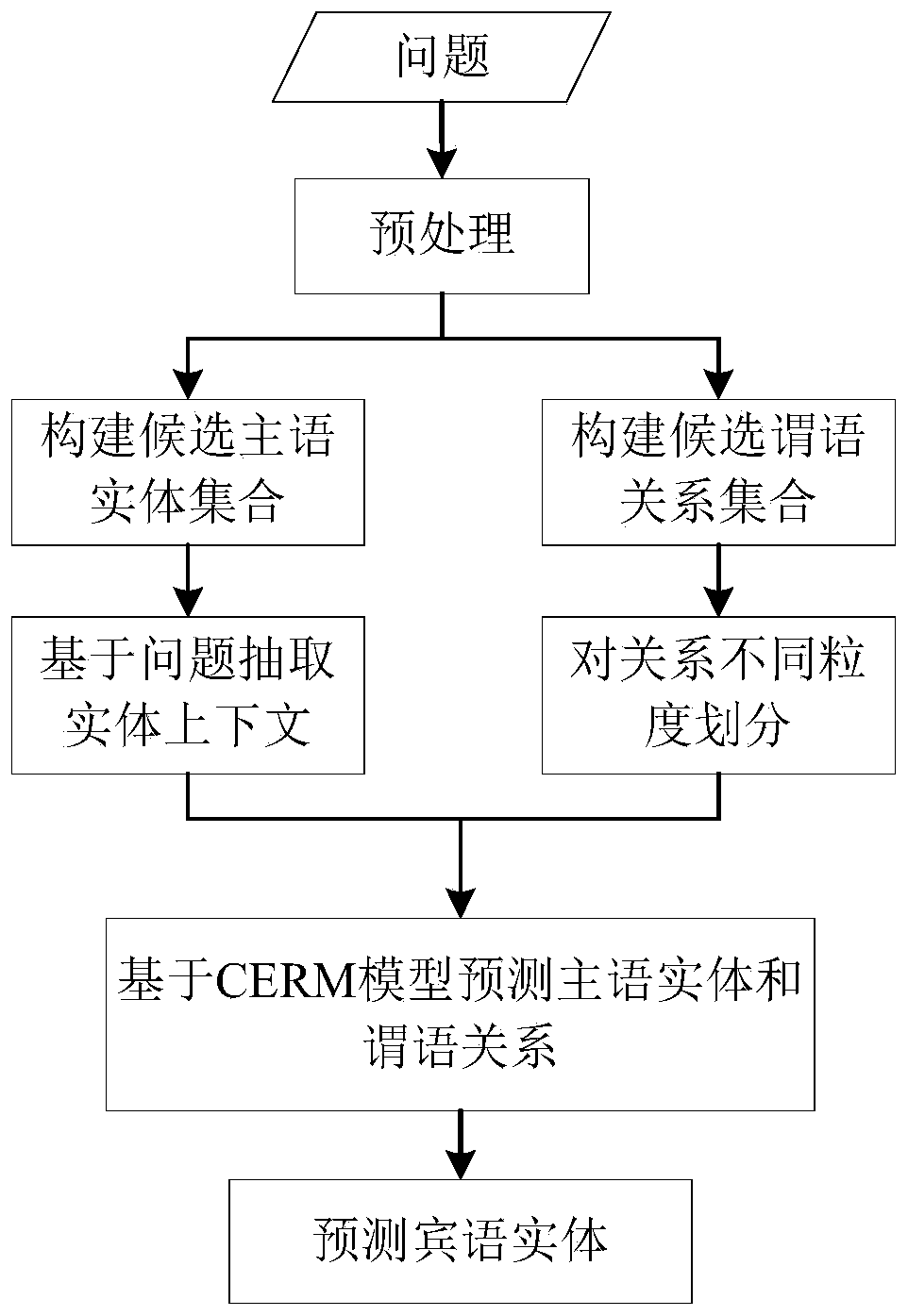

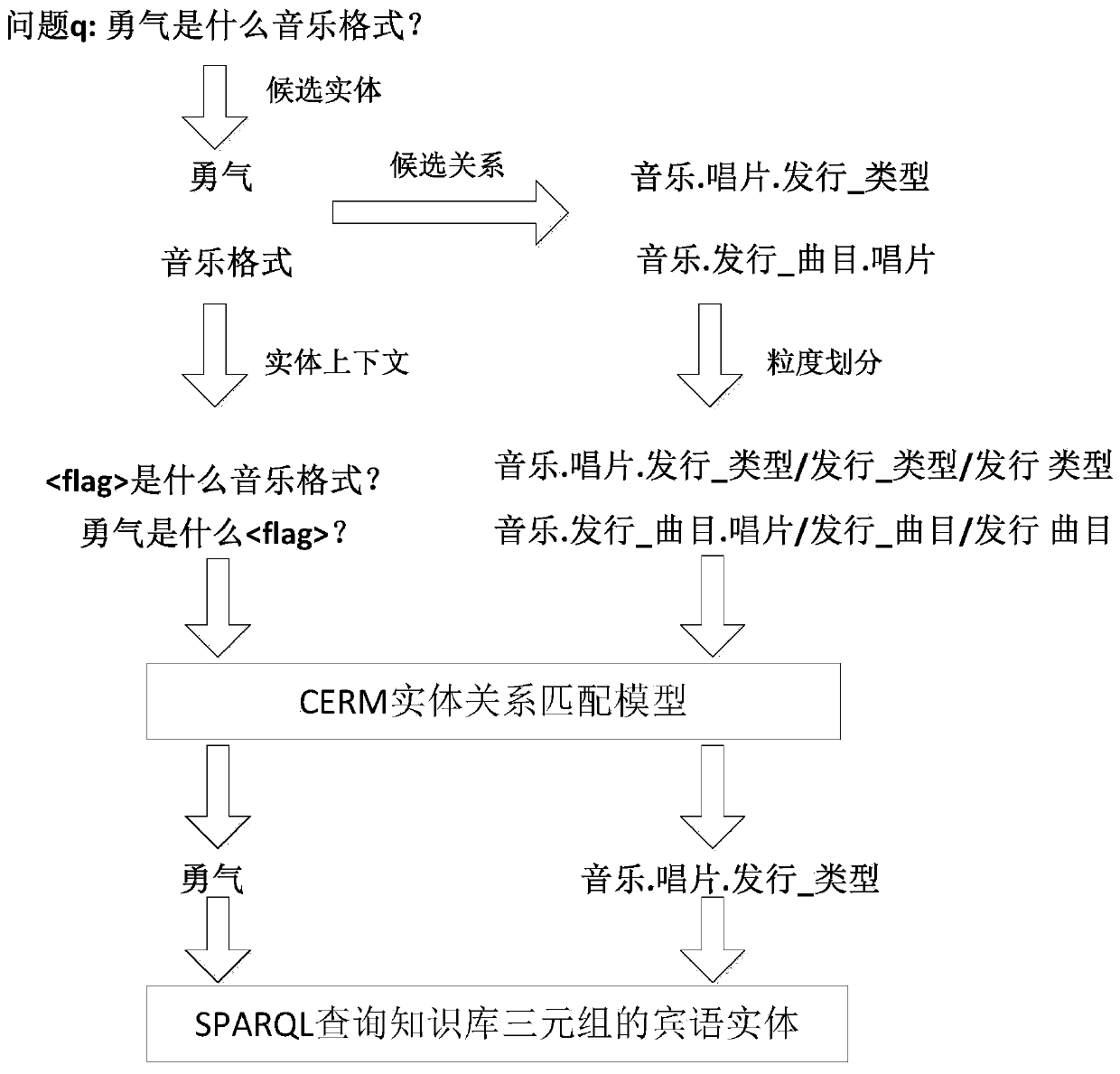

Method used

Image

Examples

specific Embodiment approach

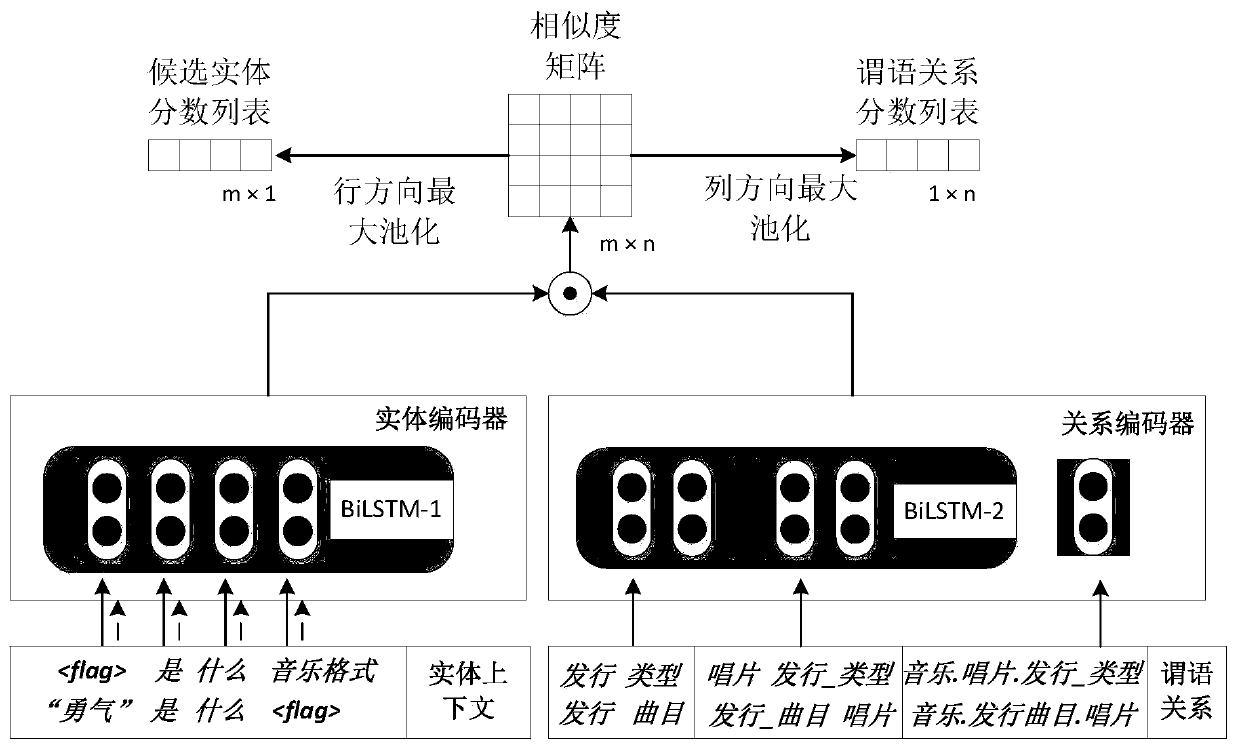

[0047] b) Relational encoder: The phrases and words after the relational division are regarded as a sequence, and the deep neural network Bi-LSTM is used to convert them into distributed vectors, and the representation of the relational level itself directly uses the initialization vector of the vocabulary. The specific implementation is as follows: Let the matrix V∈D v×d It is a word embedding matrix, initialized randomly, and continuously updates the word representation during the training process. Where v is the number of words in the vocabulary, and d is the dimension of the word vector. First, find the representations of words with three granularities from the word embedding matrix respectively; then use the deep neural network Bi-LSTM to model the phrase and word respectively, and learn the phrase representation h(p * ) and word representation h(ω * ), select the last hidden state of the deep sequence model as the feature vector of the relationship "phrase level" ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com