Robot positioning precision evaluating method based on monocular vision

A technology for robot positioning and accuracy evaluation, applied in the field of robot vision, can solve the problems of increased complexity, large degree of environmental transformation, and high application cost, and achieves the effect of simple evaluation method, saving use cost, and improving work efficiency

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0039] The present invention will be further described below in conjunction with specific embodiments:

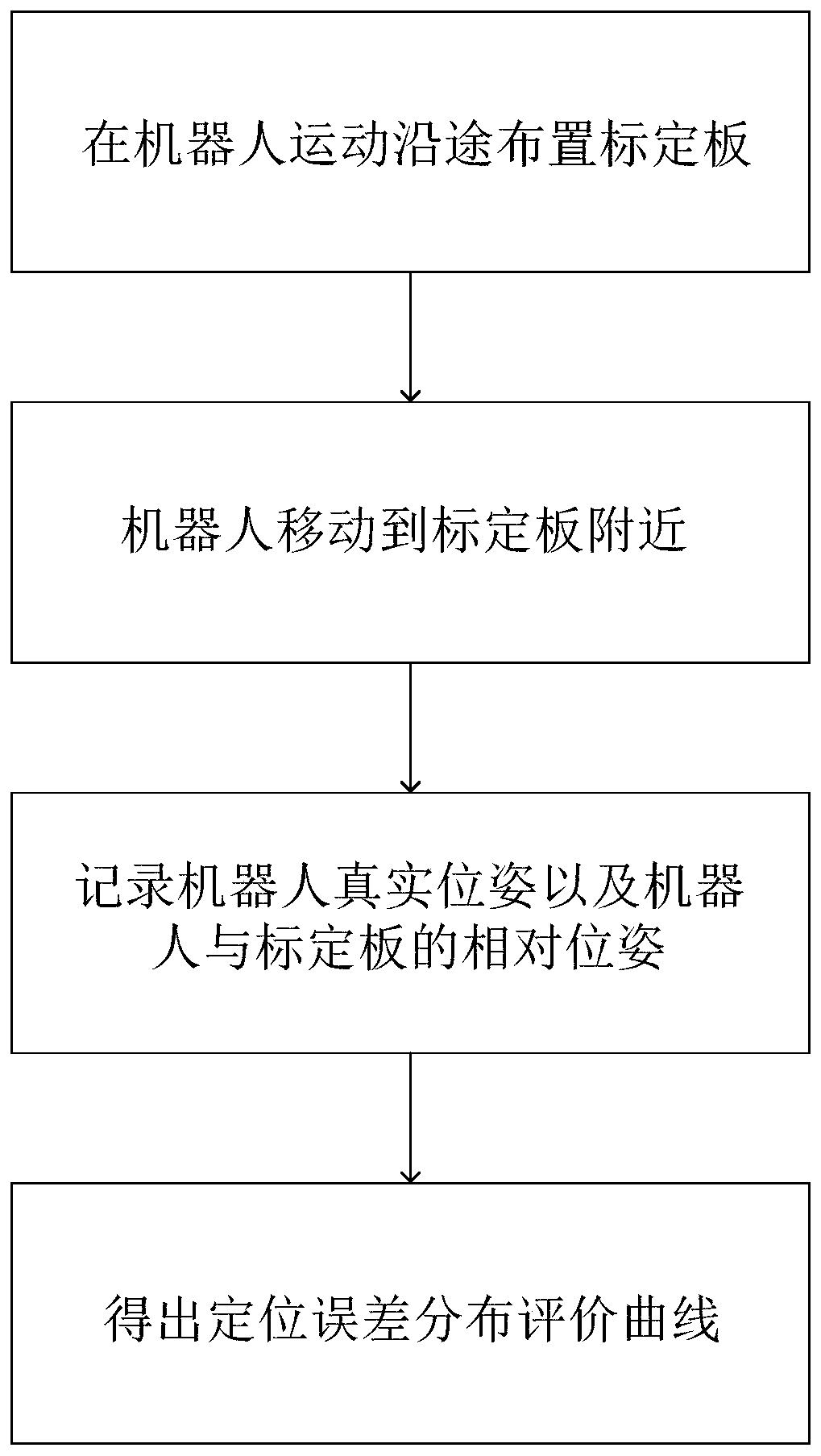

[0040] See figure 1 with 2 As shown, the method for evaluating robot positioning accuracy based on monocular vision in this embodiment includes the following steps:

[0041] S1: Arrange the calibration board in the visual range along the robot movement;

[0042] S2: The robot moves to a calibration board in the motion space;

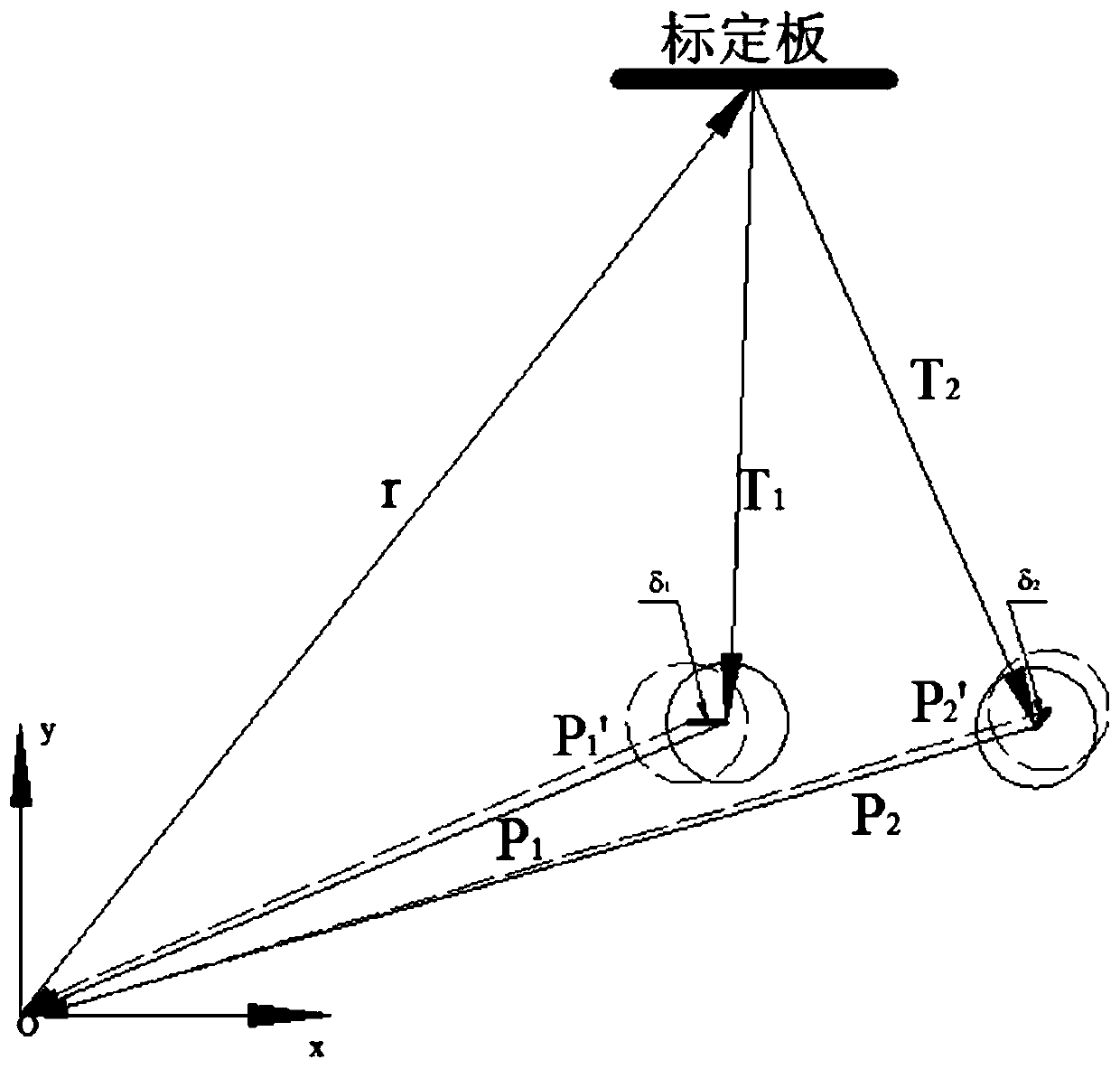

[0043] S3: When the robot approaches the calibration board, record the real-time pose information of the robot's current algorithm and the relative pose information of the robot and the calibration board;

[0044] Among them, in step S3, the real-time pose information of the robot is obtained by a positioning algorithm.

[0045] The relative pose information of the robot and the calibration board is obtained by the camera calibration method. The specific calculation steps are as follows:

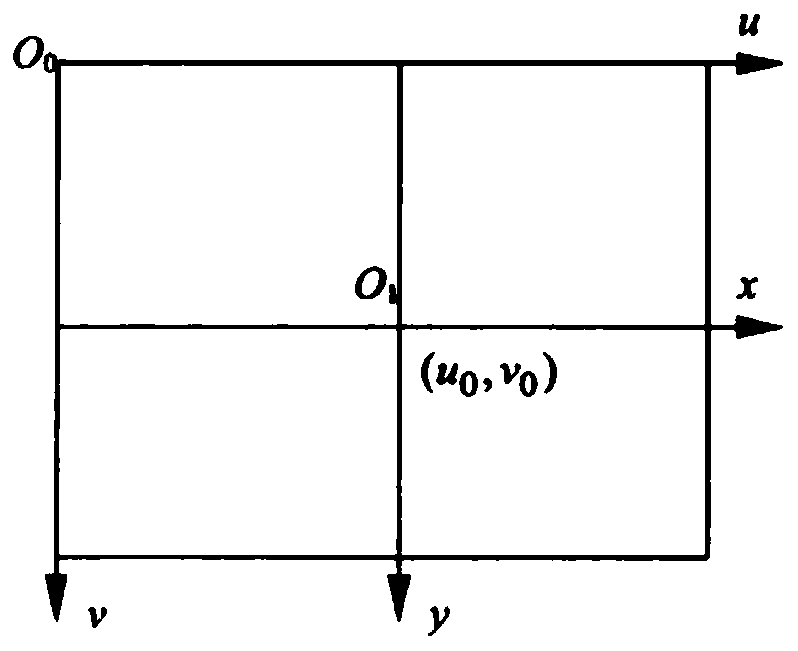

[0046] The image plane coordinate system is converted to the image pixel ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - Generate Ideas

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com