Unstructured light field rendering method

An unstructured, light field technology, applied in 3D image processing, image data processing, instruments, etc., can solve problems such as long time, inability to render images, lack of realism, etc., to improve realism, flexible rendering algorithms, The effect of eliminating ghosting

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0024] The following examples are used to illustrate the present invention, but are not intended to limit the scope of the present invention.

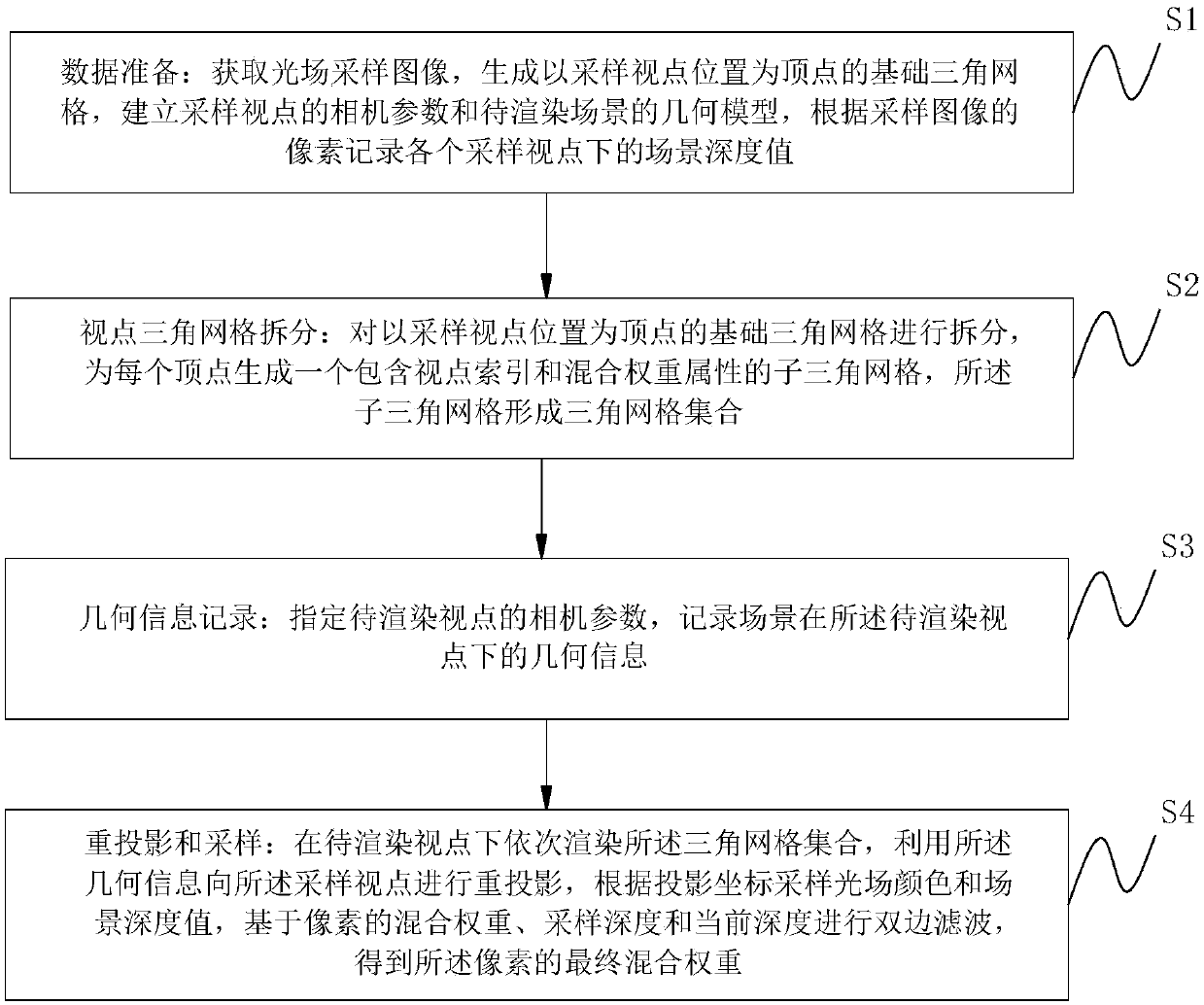

[0025] see figure 1 , this embodiment provides an unstructured light field rendering method, the rendering method includes the following steps:

[0026] S1: Data preparation: Obtain the sampled image of the light field, generate a basic triangular mesh with the position of the sampled viewpoint as the vertex, establish the camera parameters of the sampled viewpoint and the geometric model of the scene to be rendered, and record the scene under each sampled viewpoint according to the pixels of the sampled image depth value;

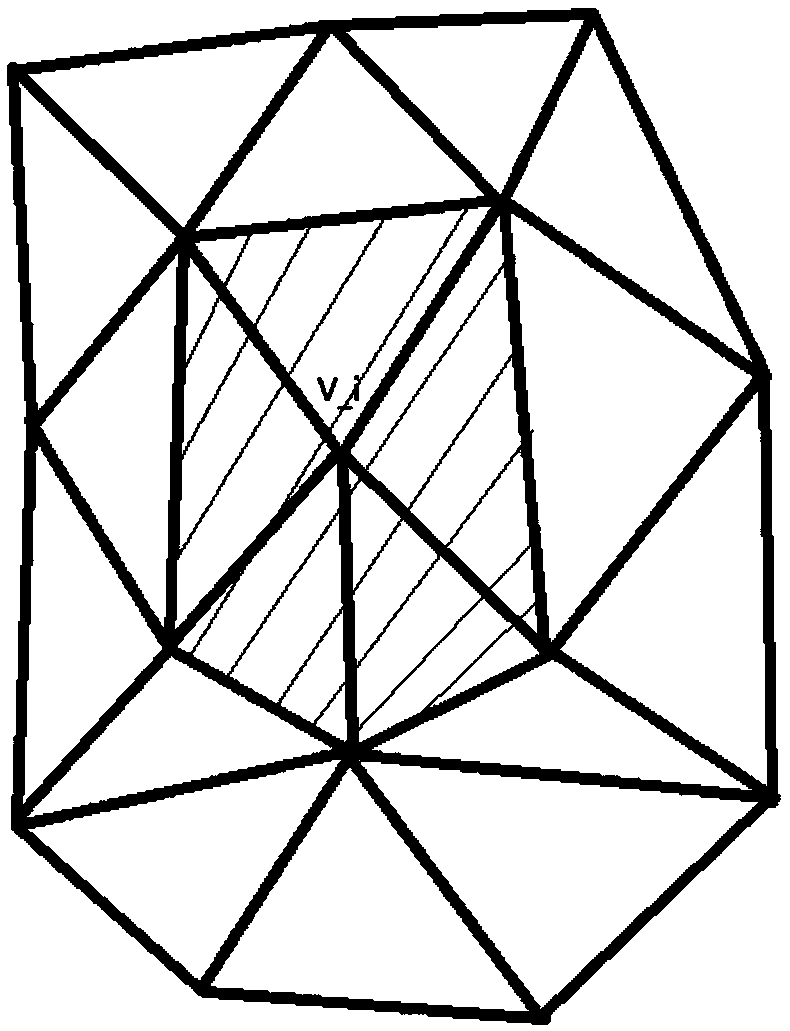

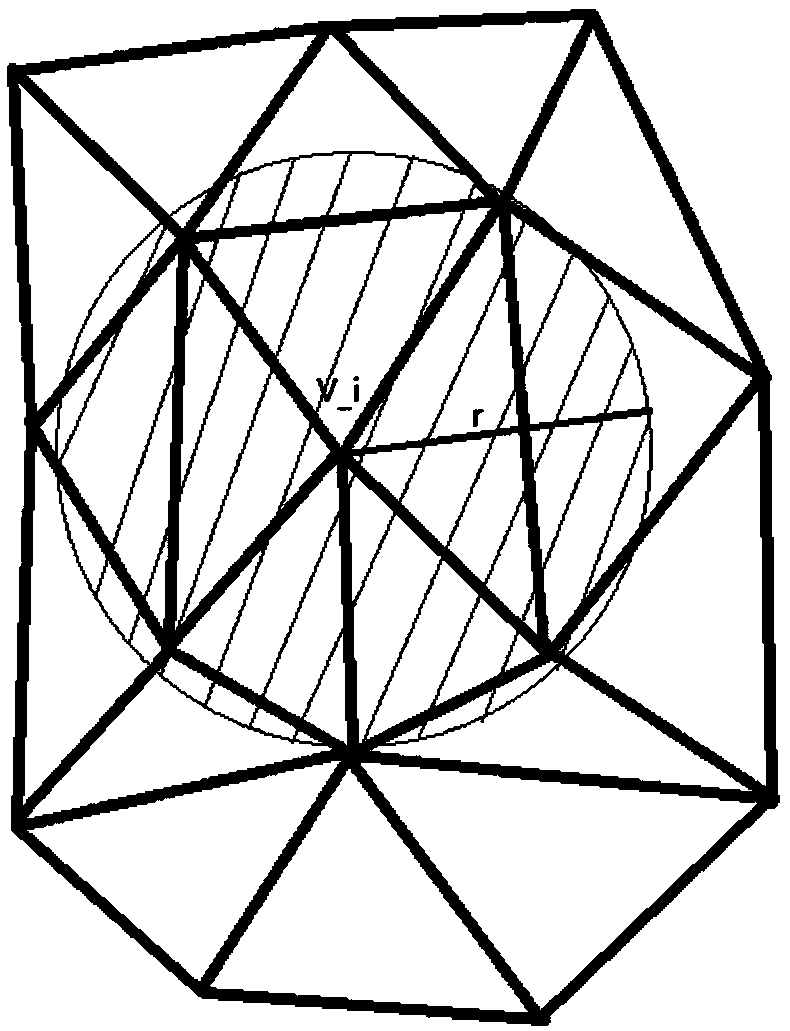

[0027] S2: Viewpoint triangular mesh splitting: Split the basic triangular mesh with the sampled viewpoint position as a vertex, and generate a sub-triangular mesh containing viewpoint index and mixed weight attribute for each vertex, the sub-triangular mesh Form a set of triangular meshes;

[0028] S3: Geometry...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D Engineer

- R&D Manager

- IP Professional

- Industry Leading Data Capabilities

- Powerful AI technology

- Patent DNA Extraction

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2024 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com