Face detection model training method, face key point detection method and device

A face key point and face detection technology, which is applied in the field of data processing, can solve problems such as inaccurate face key point detection, and achieve the effects of enriching application scenarios, improving performance, and improving accuracy

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

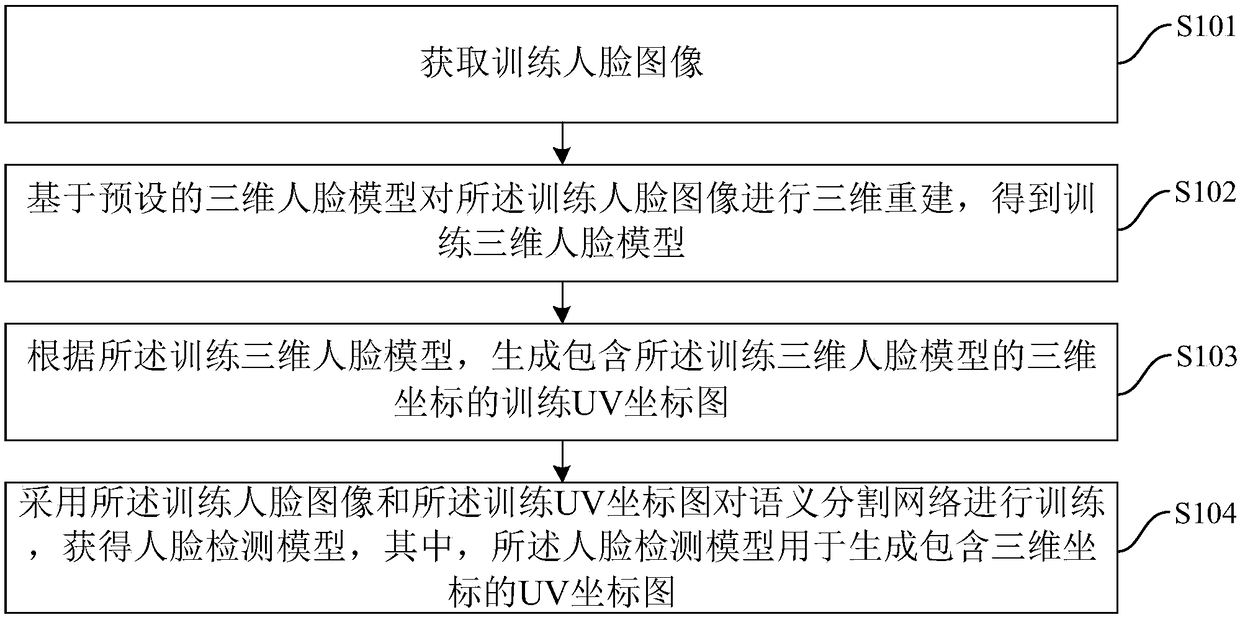

[0045] figure 1 It is a flow chart of a training method for a face detection model provided by Embodiment 1 of the present invention. This embodiment of the present invention is applicable to the situation where the training face detection model generates a UV coordinate map containing three-dimensional coordinates. The training device of detection model is carried out, and this device can be realized by the mode of software and / or hardware, and is integrated in the device that carries out this method, specifically, as figure 1 As shown, the method may include the following steps:

[0046] S101. Acquire a training face image.

[0047] Specifically, the training face image may be a two-dimensional image containing a human face, and the storage format of the two-dimensional image may be a format such as BMP, JPG, PNG, or TIF. Among them, BMP (Bitmap) is a standard image file format in the Windows operating system. BMP adopts a bitmap storage format. The image depth of BMP file...

Embodiment 2

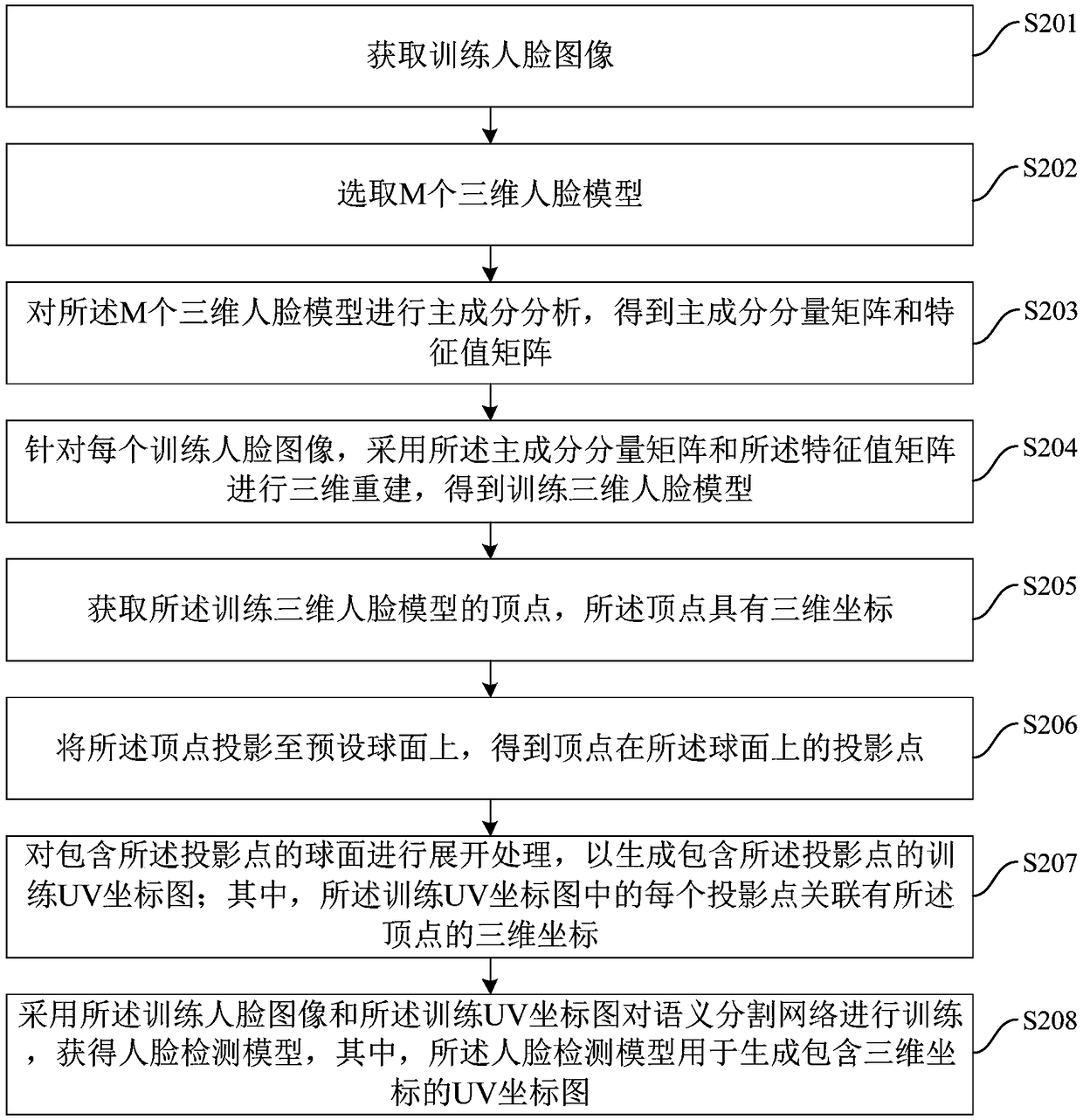

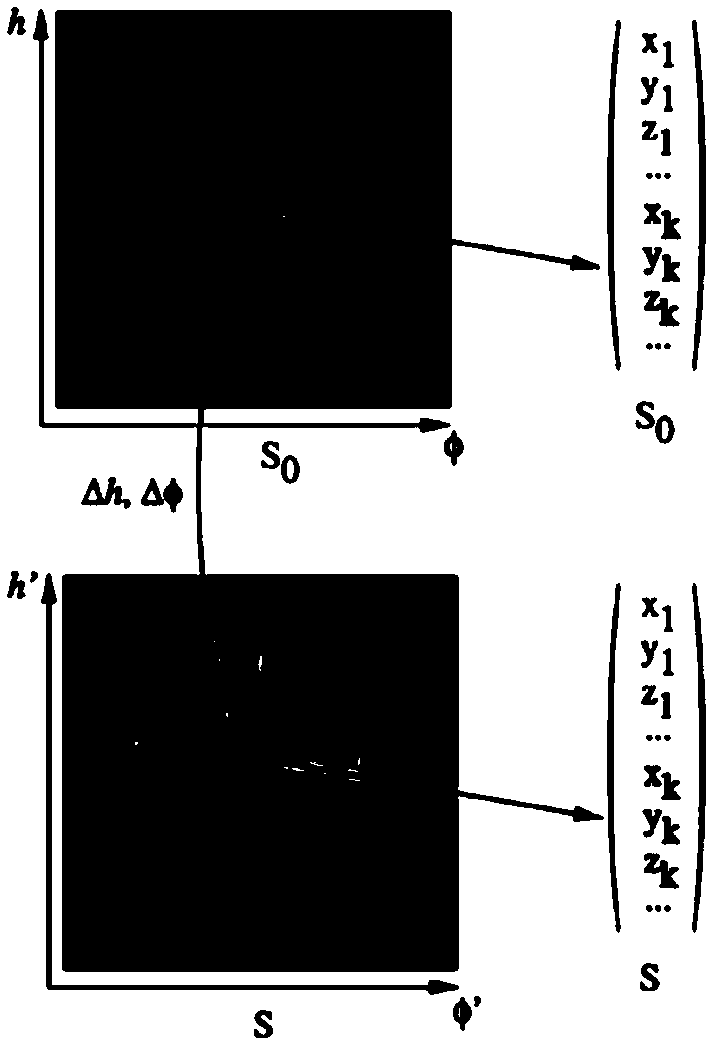

[0060] Figure 2A It is a flowchart of a face detection model training method provided by Embodiment 2 of the present invention. On the basis of Embodiment 1, the embodiment of the present invention optimizes the three-dimensional reconstruction and generation of training UV coordinate maps. Specifically, as Figure 2A As shown, the method may include the following steps:

[0061] S201. Acquire a training face image.

[0062] S202. Select M three-dimensional face models.

[0063] In the embodiment of the present invention, M three-dimensional human face models can be selected from the preset three-dimensional human face model library, and the selected three-dimensional human face models can be preprocessed, and the preprocessed three-dimensional human face models can be aligned by using the optical flow method , to obtain the aligned 3D face model.

[0064] The 3D face model is generated by 3D scanning. Different scanners have different imaging principles. There may be miss...

Embodiment 3

[0119] Figure 3A It is a flowchart of a method for detecting key points of a human face provided by Embodiment 3 of the present invention. This embodiment of the present invention is applicable to the situation of detecting key points of a human face through a face image. device, the device can be implemented by means of software and / or hardware, and integrated in the device for performing the method, specifically, such as Figure 3A As shown, the method may include the following steps:

[0120] S301. Acquire a target face image.

[0121] In this embodiment of the present invention, the target face image may be a face image to which video special effects are to be added. For example, in the process of live video streaming or short video recording, when the user selects operations such as color contact lenses, adding stickers, and face-lifting to add video special effects, the live video app detects the user's operation and intercepts a frame from the video frames collected ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com