Hand-drawn sketch retrieval method based on deformable convolution and depth network

A deep network and hand-drawing technology, applied in the field of computer vision and deep learning, can solve redundancy and other problems, achieve the effect of reducing interference, improving retrieval accuracy, and retaining feature expression ability

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0032] Below in conjunction with accompanying drawing and specific embodiment the present invention is described in further detail:

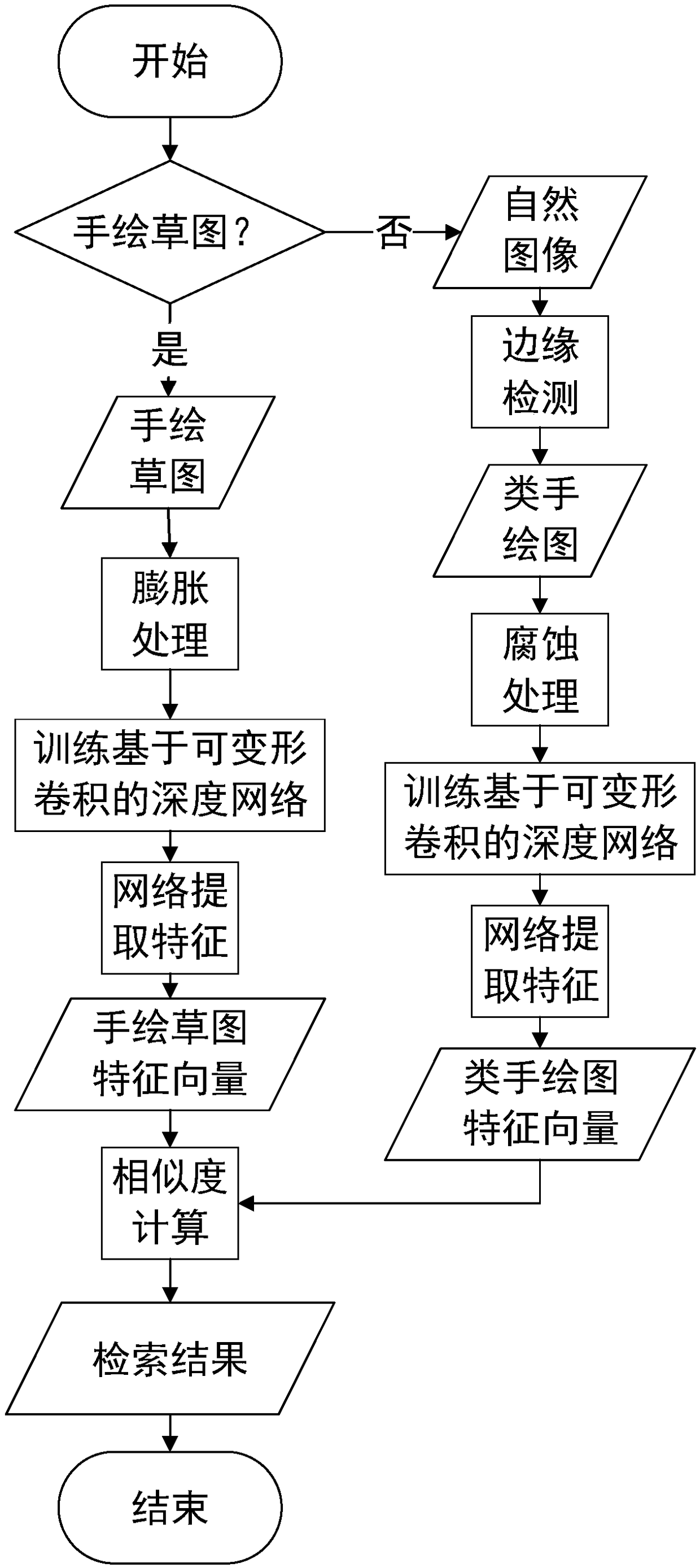

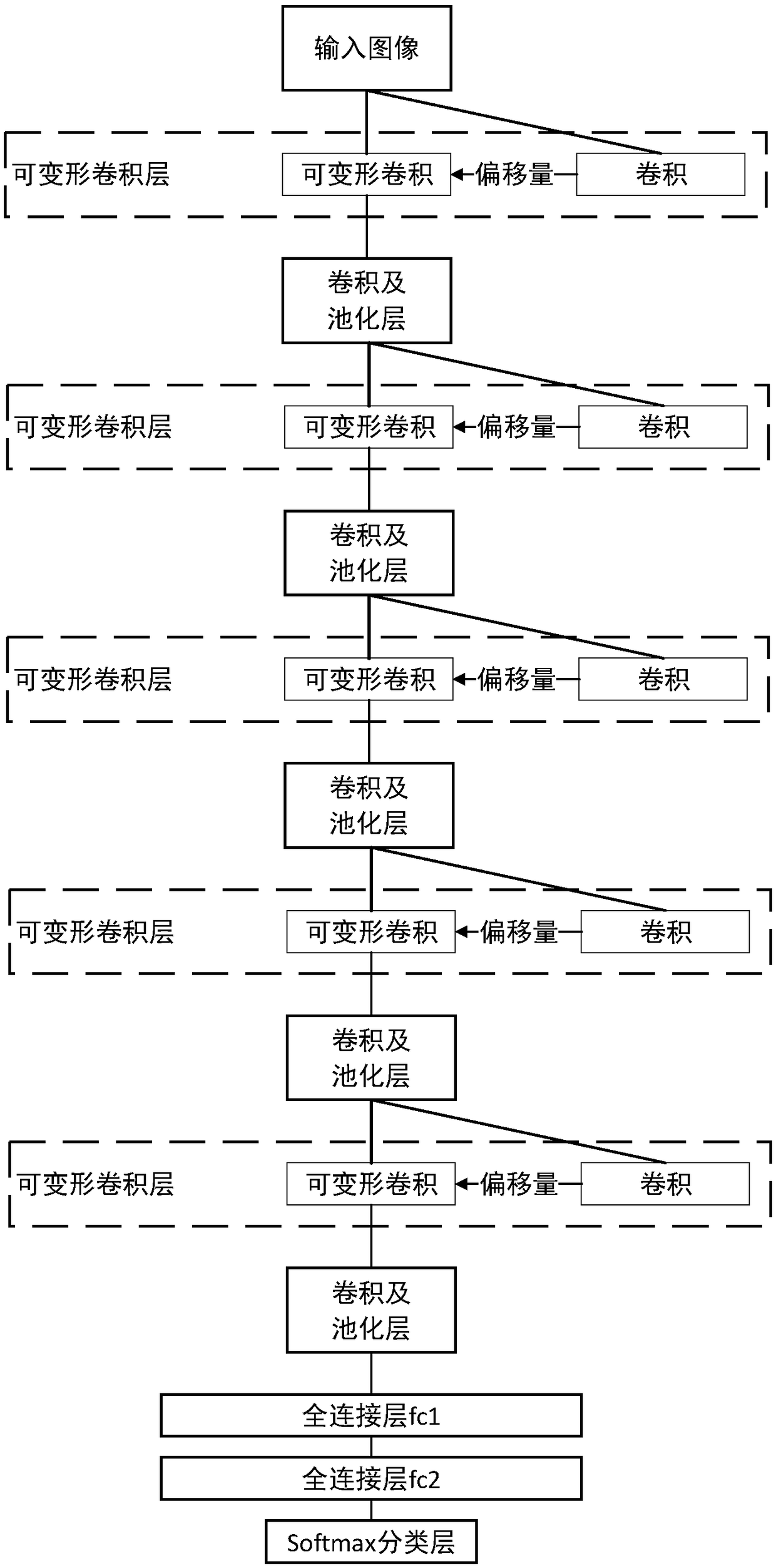

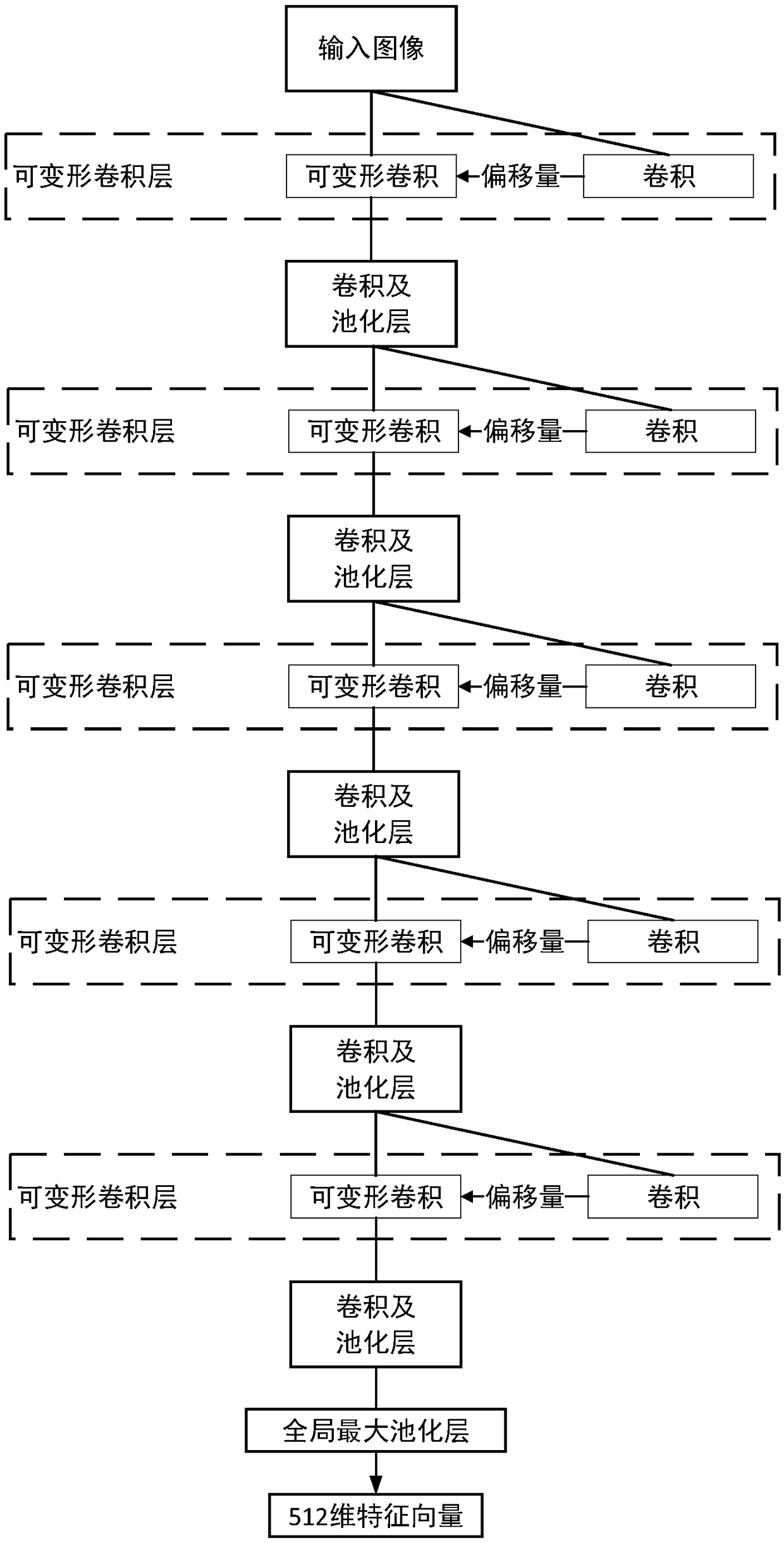

[0033] combine figure 1 , figure 2 with image 3 As shown, the hand-drawn sketch retrieval method based on deformable convolution and deep network includes the following steps:

[0034] s1. Obtain hand-painted images to be retrieved and natural images in the database

[0035] The method of the present invention is applicable to all natural picture libraries and hand-painted image data sets, wherein, the training data in the present invention comes from the public data set Flickr15k image data set, because this data set is currently recognized by everyone in this field, And the data set contains a large number of hand-painted images and natural picture data.

[0036] s2. Use the edge detection algorithm to detect the edge of the natural image to obtain a hand-like drawing, that is, the edge map

[0037] s3. Preprocessing the hand-drawn sket...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com