Target identification and capture positioning method based on deep learning

A deep learning and target recognition technology, applied in the field of machine vision, can solve problems such as poor generalization ability and robustness

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0056] The present invention will be further described below in conjunction with the accompanying drawings and specific embodiments.

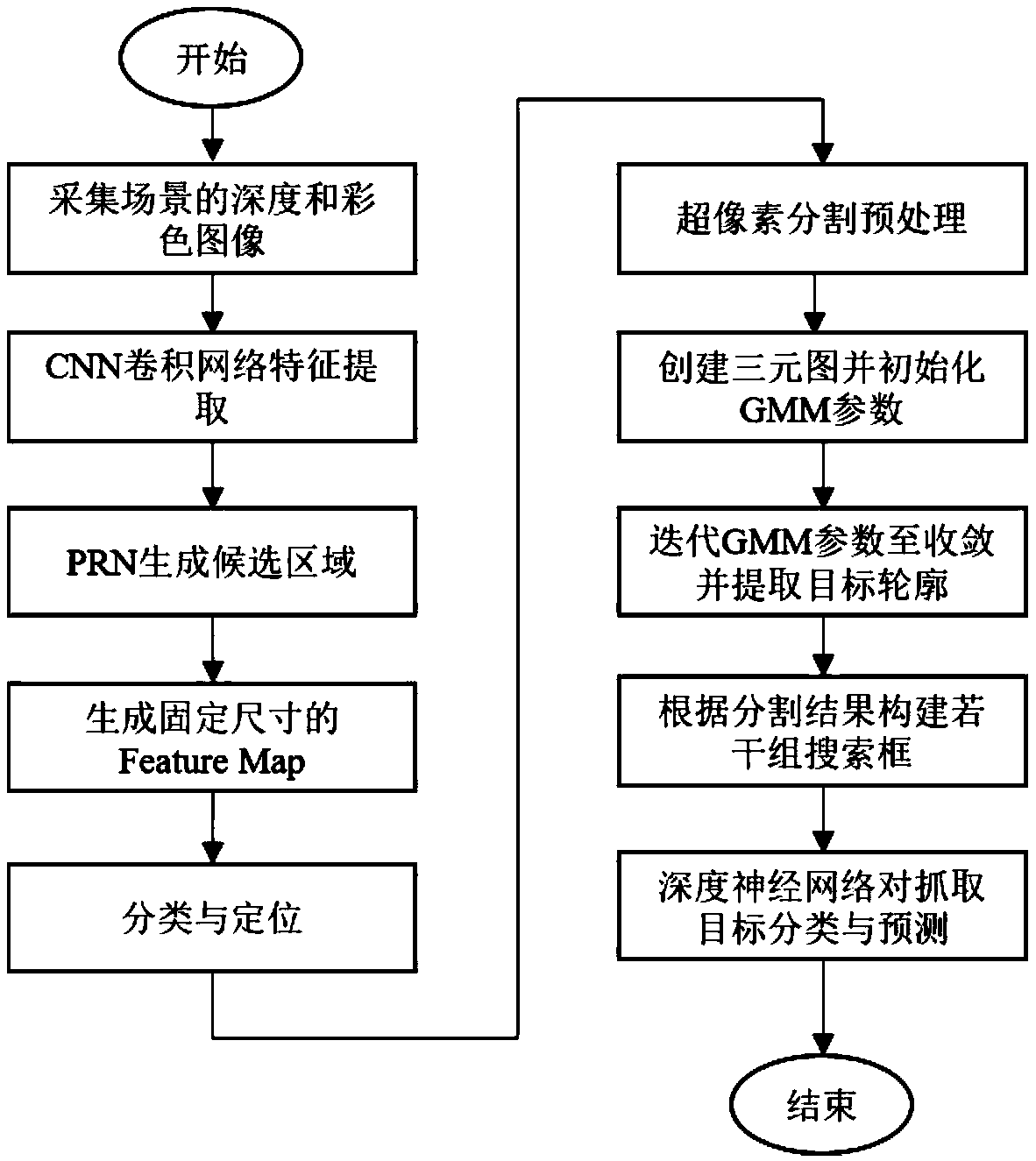

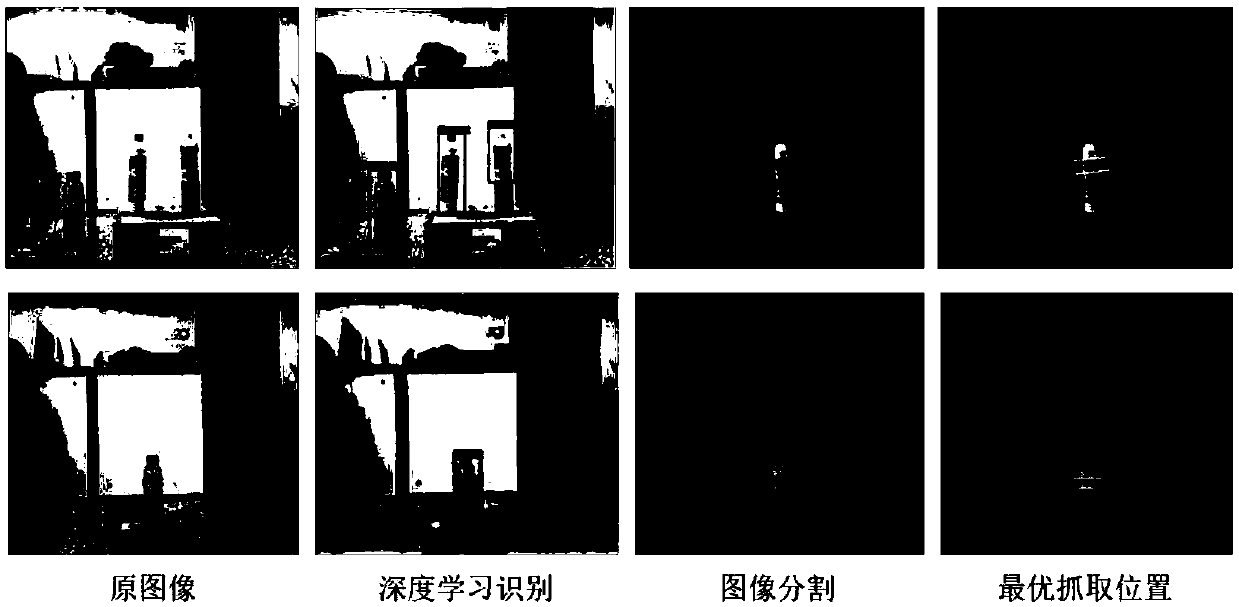

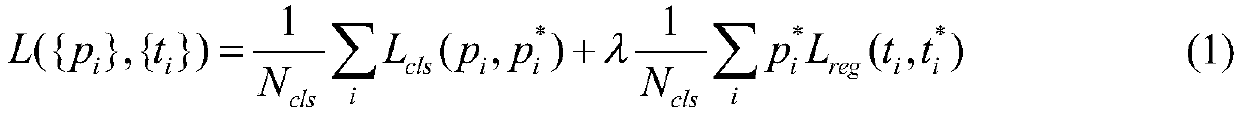

[0057] In order to solve the problems existing in the above-mentioned traditional vision algorithms, a target recognition and grasping positioning method based on deep learning is proposed. First, use the Kinect camera to collect the depth and color images of the scene, use the Faster RCNN deep learning algorithm to identify the scene target, select the captured target area according to the recognized category, and use it as the input of the GrabCut image segmentation algorithm to extract the outline of the target, and then obtain The specific position of the target, and then use the position information as the input of the cascade neural network to detect the optimal grasping position, and finally obtain the grasping position and posture of the manipulator. The overall flow of the method involved is attached figure 1 As shown, the specific im...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D Engineer

- R&D Manager

- IP Professional

- Industry Leading Data Capabilities

- Powerful AI technology

- Patent DNA Extraction

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2024 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com