Mobile robot image visual positioning method in dynamic environment

A mobile robot and image vision technology, applied in the field of visual positioning and navigation, can solve problems such as poor algorithm robustness, achieve the effects of correcting positioning errors, overcoming complexity, and accurate motion trajectories

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

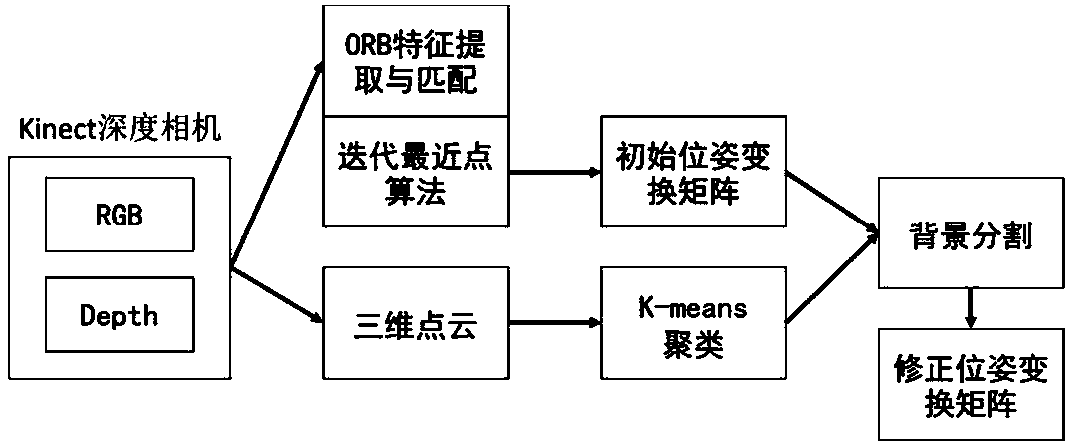

[0036] The technical solution of the present invention will be described in further detail below in conjunction with the accompanying drawings.

[0037] In step 1, the Kinect depth camera is fixedly installed on the front of the wheeled mobile robot, and each frame image of the environment where the robot is located is obtained through the camera, and each frame image includes an RGB color image and a depth depth image.

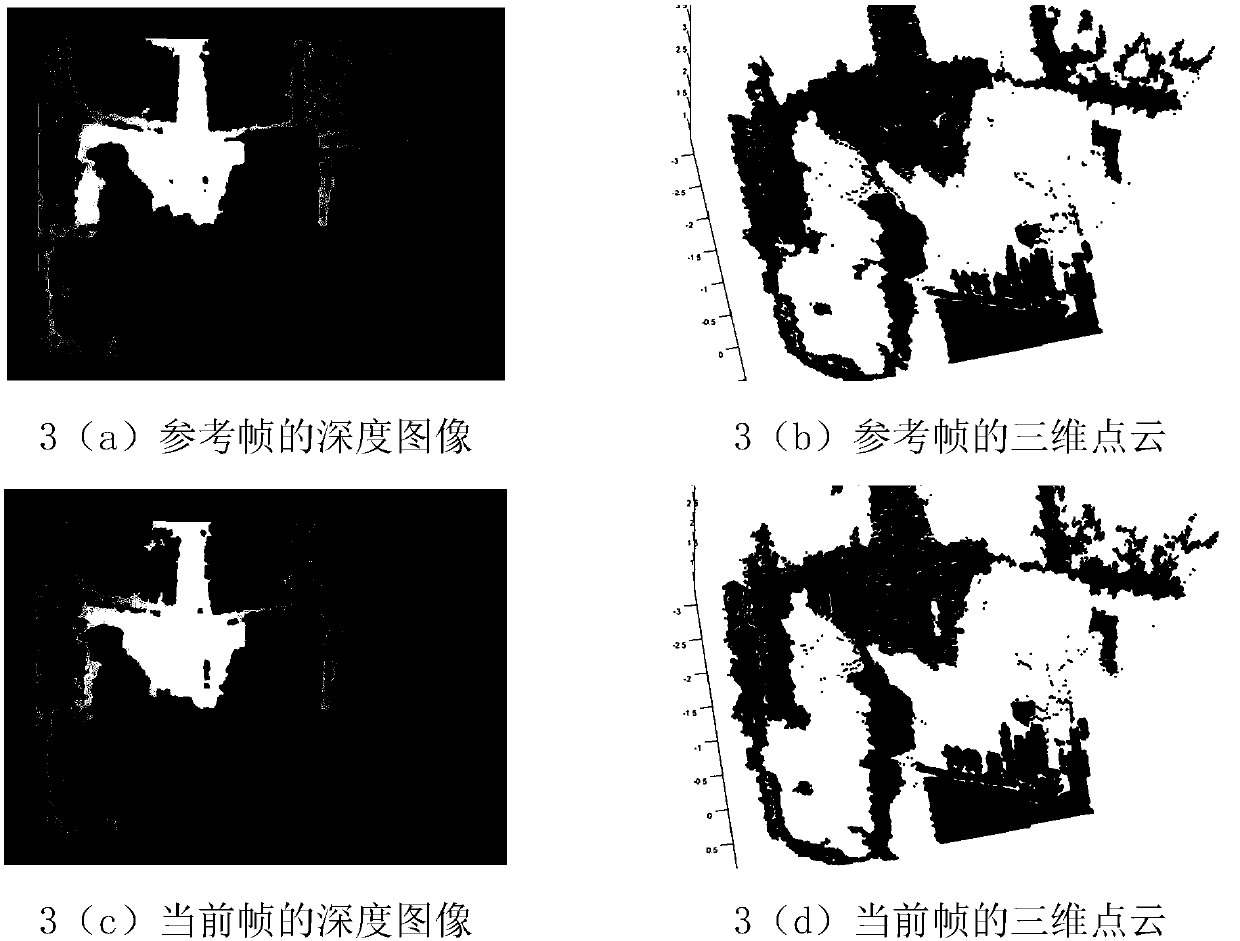

[0038] Step 2, according to the pinhole camera model, obtain the three-dimensional point cloud containing the environmental information of each frame depth depth image for each frame depth depth image processing of two adjacent frames before and after;

[0039] Such as figure 2 As shown, the coordinates of the pixel points in the image coordinate system after the spatial point is imaged by the camera are [u v], and the coordinates in the world coordinate system are [x y z], and the world coordinate system is the reference frame coordinate system or the curre...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com