Mobile type multi-modal interaction method and device based on enhanced reality

A technology of augmented reality and interactive methods, applied in the field of human-computer interaction, can solve the problems of poor user experience, lack of natural and intuitive, efficient interaction methods, lack of portability and mobility, etc., and achieve the effect of efficient interaction

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment

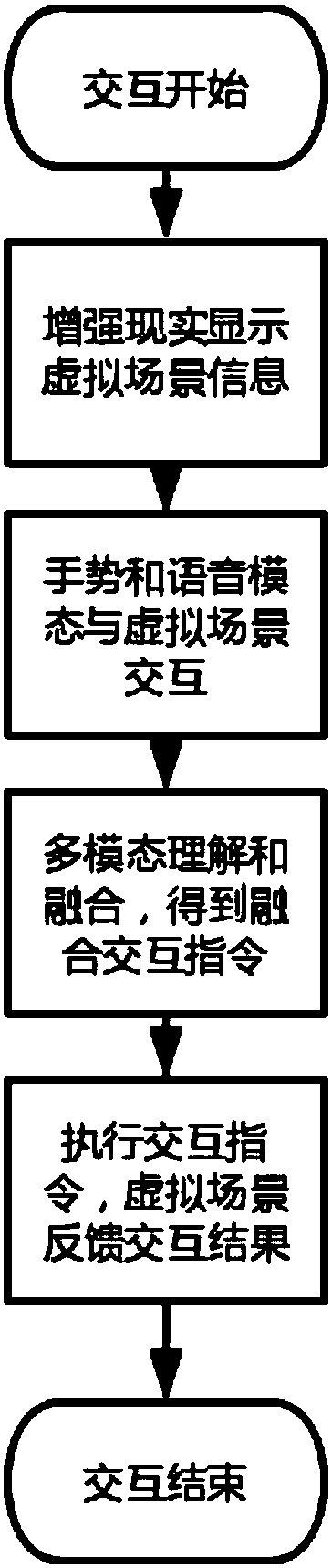

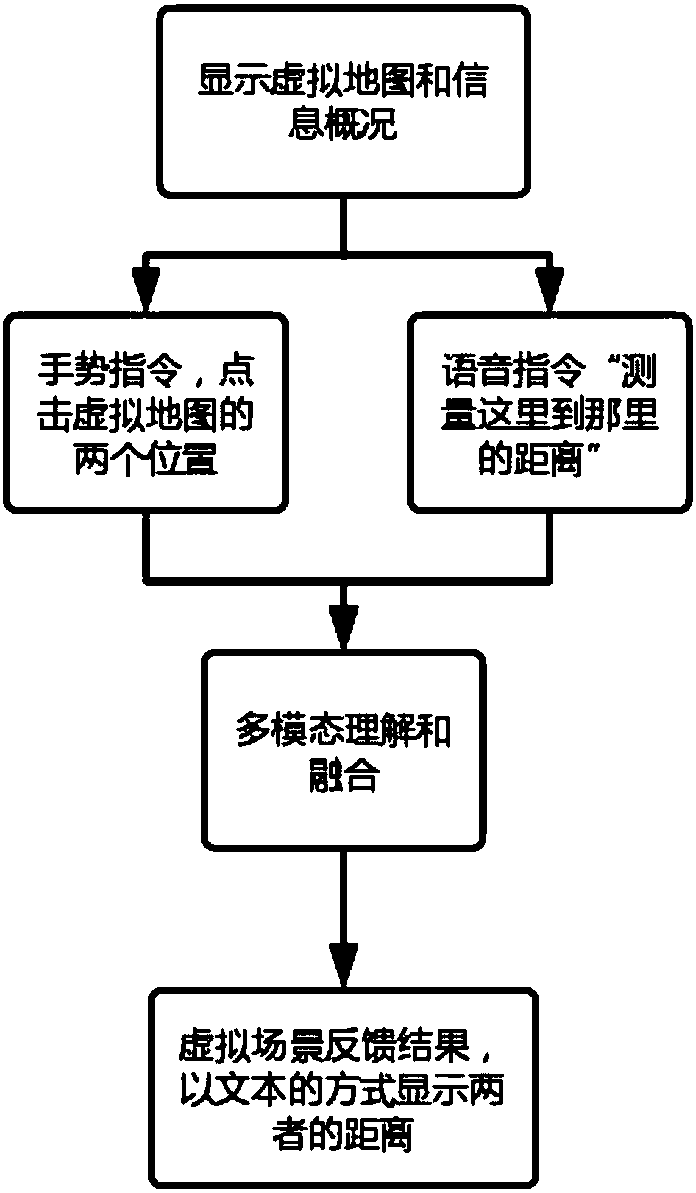

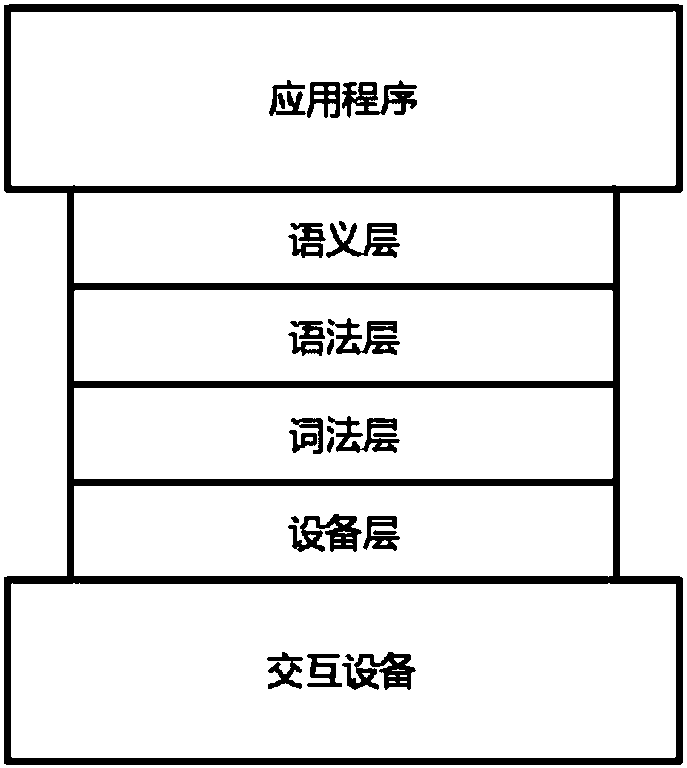

[0051] In this embodiment, a mobile multi-modal interaction method and device based on augmented reality are respectively provided, which realizes a natural and intuitive human-computer interaction mode with low learning load, high interaction efficiency and portability and mobility. The human-computer interaction interface is displayed through augmented reality, and the augmented reality virtual scene includes interactive information such as virtual objects; users send interactive instructions through gestures and voices, and understand different modal semantics through multimodal fusion methods, and integrate gestures and voices The modal data is used to generate multi-modal fusion interaction instructions; after the user interaction instructions are applied, the results are returned to the augmented reality virtual scene, and the information is fed back through the change of the scene.

[0052] Such as Figure 7 As shown, an augmented reality-based mobile multimodal interac...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com