Deep learning-based complex road condition perception system and apparatus

A technology of complex road conditions and deep learning, applied in the fields of instruments, character and pattern recognition, computer parts, etc., can solve the problem that cannot achieve practical value, different features cannot be shared in different classifiers, and cannot improve the efficiency of detection, etc. question

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

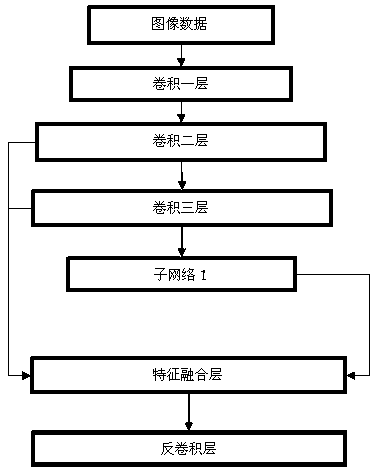

[0025] A complex road condition perception system based on deep learning. This system includes five steps: the first step is to collect image sensor data; the second step is to preprocess the image data, including white balance, gamma correction, and denoising processing; the third step is to use The first deep neural network is used for feature extraction; the fourth step is to use the features extracted by the second deep neural network for target positioning; the fifth step is to use the third deep neural network for target recognition in the positioning area to obtain the final detection result .

Embodiment 2

[0027] In the complex road condition perception system based on deep learning described in embodiment 1, the third step is to perform feature multiplexing; the fourth step is to separate the foreground and the background; the third step, the In the fourth step, the network used in the fifth step is integrated into a deeper deep neural network, wherein the network used in each step is processed as a sub-network of the entire network.

Embodiment 3

[0029] In the complex road condition perception system based on deep learning described in Embodiment 2, the sub-network is a multi-scale neural network, which performs feature extraction on different scales, and finally uses a special sub-network to extract features at different scales. Integration is performed to obtain the final multi-scale features.

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com