Buffer method and system for multilevel pipeline parallel computing

A technology of parallel computing and buffering method, which is applied in the field of data processing, can solve the problems of wasting time and power consumption, and achieve the effect of less error-prone, simple and efficient error, and data transmission

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0044] The following describes in detail the embodiments of the present invention, examples of which are illustrated in the accompanying drawings, wherein the same or similar reference numerals refer to the same or similar elements or elements having the same or similar functions throughout. The embodiments described below with reference to the accompanying drawings are exemplary and are only used to explain the present invention, but not to be construed as a limitation of the present invention.

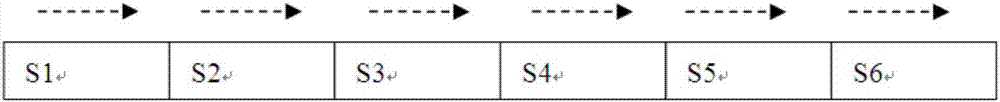

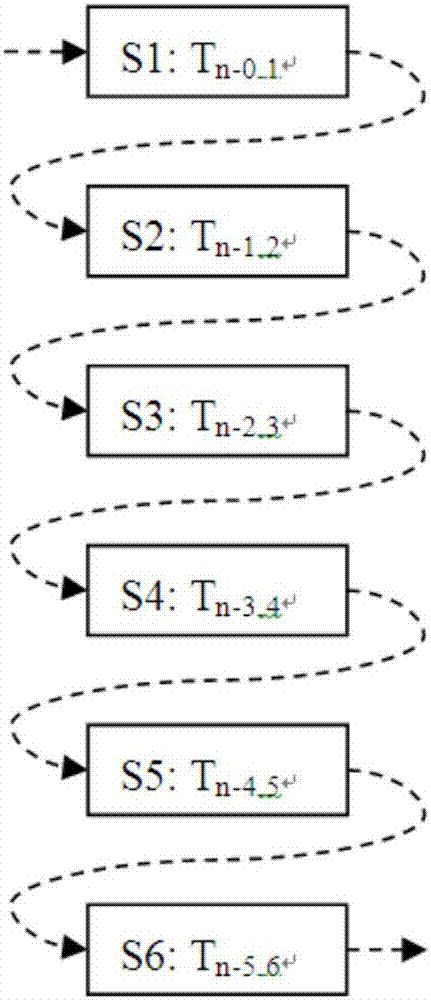

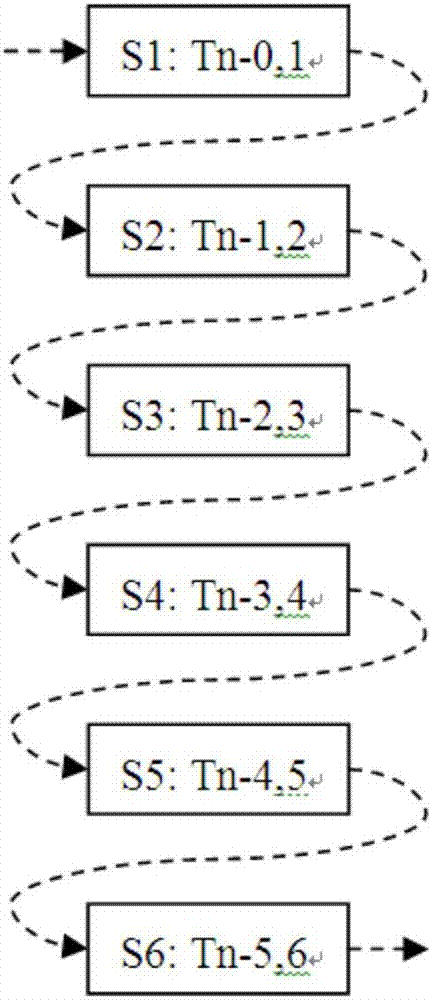

[0045] Traditional serial computing processes sequential tasks one by one. The processing of each task includes the processing of several subtasks, and each subtask has its own processing module. The following is an example of dividing each task of the pipeline into six levels of subtasks. As shown in Figure 1(a), a task T n The processing sequence of T n After processing S1, S2, S3, S4, S5, and S6, the task T n+1 to be processed. If task T n The processing end time point of T i...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com