Method and system for vision-centric deep-learning-based road situation analysis

A deep learning and vision technology, applied in the field of image processing, can solve problems such as limited horizontal spatial information, reduced resolution, and low resolution

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0037] In order to facilitate the understanding of the present invention, the present invention will be described more fully below with reference to the associated drawings. Hereinafter, embodiments of the present disclosure will be described with reference to the accompanying drawings. Wherever possible, the same reference numbers have been used for the same parts in the various drawings. Apparently, the described embodiments are only some but not all of the embodiments of the present invention. Based on the disclosed embodiments, those skilled in the art can derive other embodiments consistent with this embodiment, and all these embodiments belong to the protection scope of the present invention.

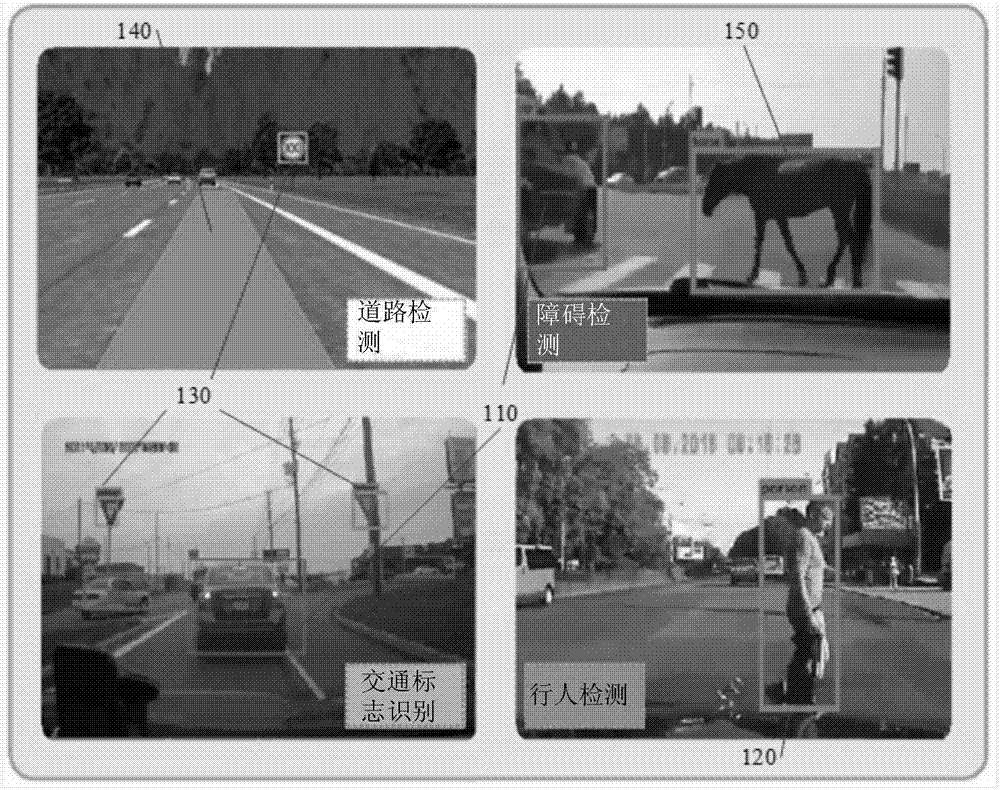

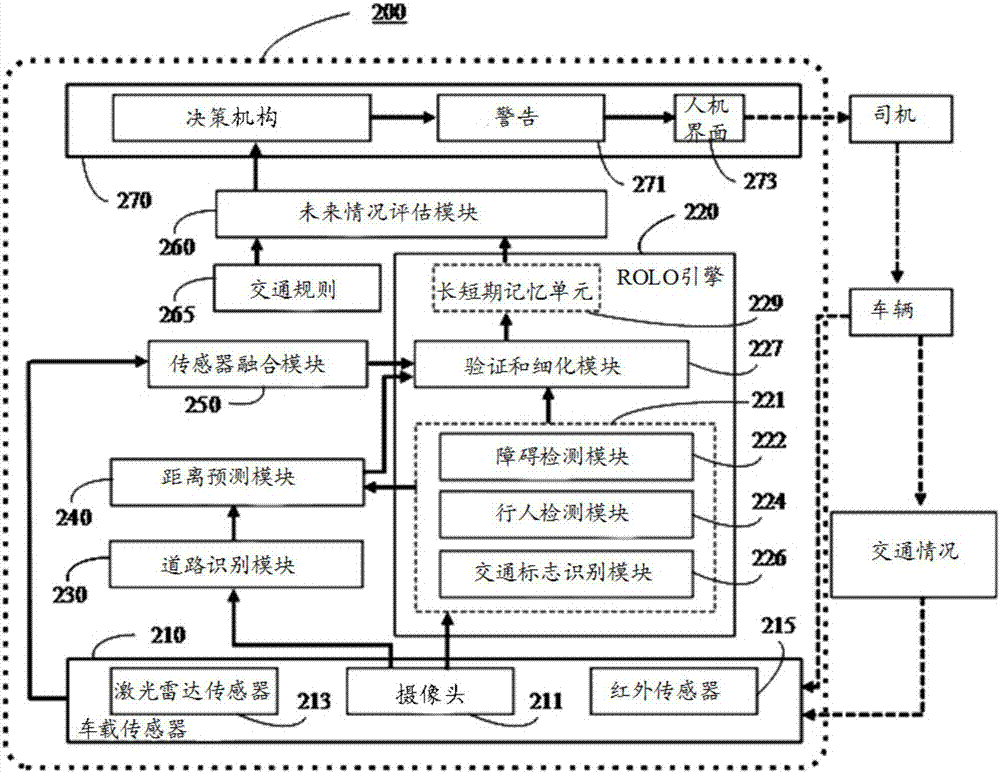

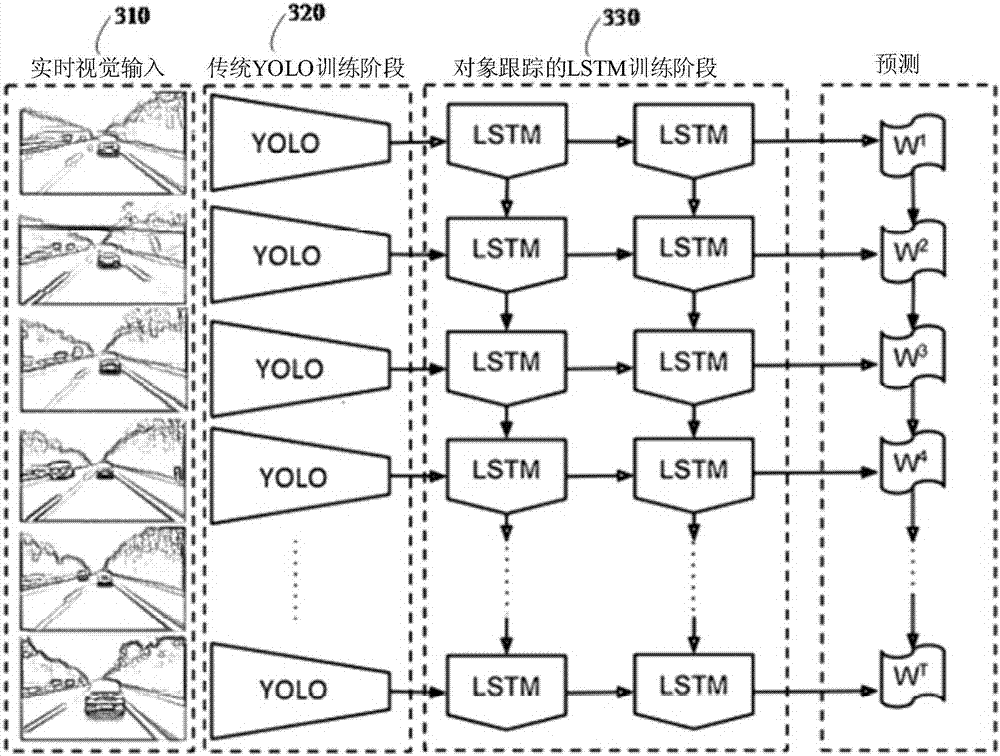

[0038] According to different embodiments of the disclosed subject matter, the present invention provides a vision-centric deep learning-based road condition analysis method and system.

[0039] The vision-centric deep learning-based road condition analysis system is also known ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D Engineer

- R&D Manager

- IP Professional

- Industry Leading Data Capabilities

- Powerful AI technology

- Patent DNA Extraction

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2024 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com