Local information and global information fusion-based target classification identification method

A technology of local information and global information, applied in the field of target recognition, can solve the problems of high classification cost and low classification accuracy, and achieve the effect of realizing intelligence and improving classification accuracy

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

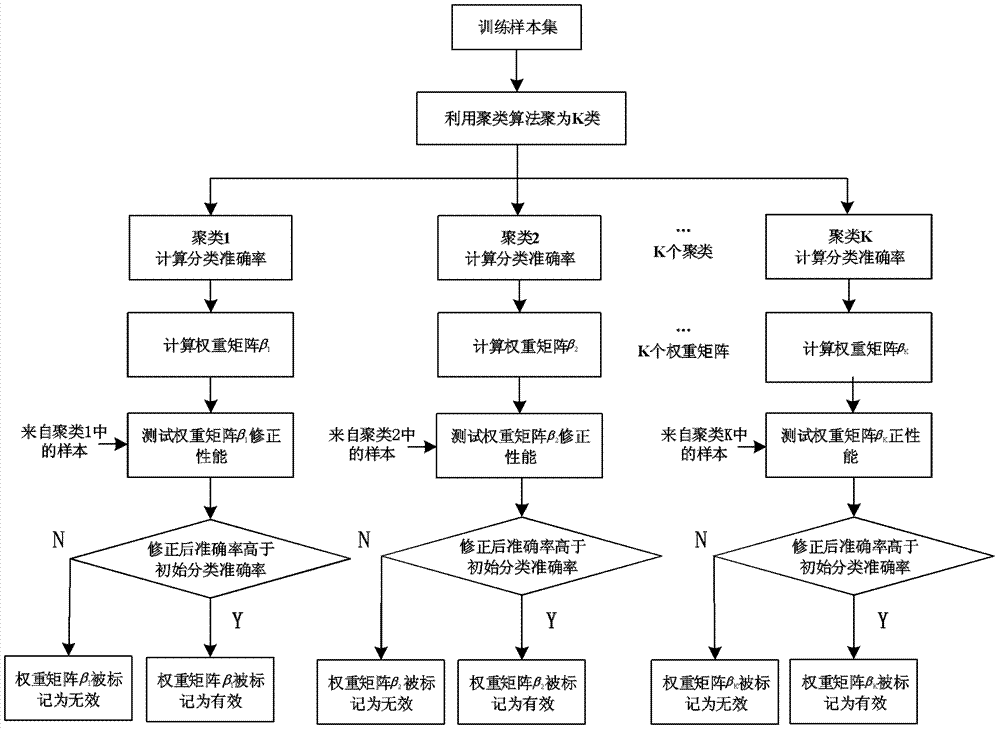

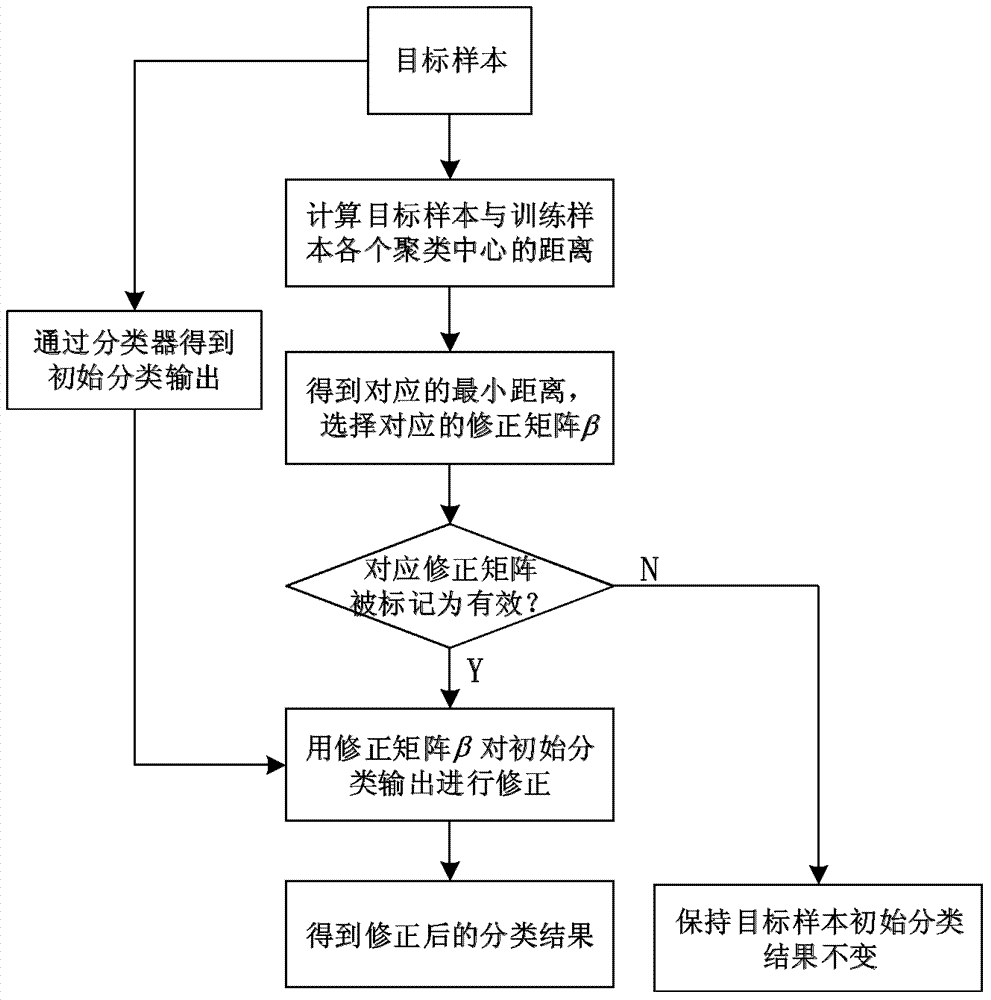

Method used

Image

Examples

Embodiment

[0053] Embodiment: Actual Data Category Recognition Technology

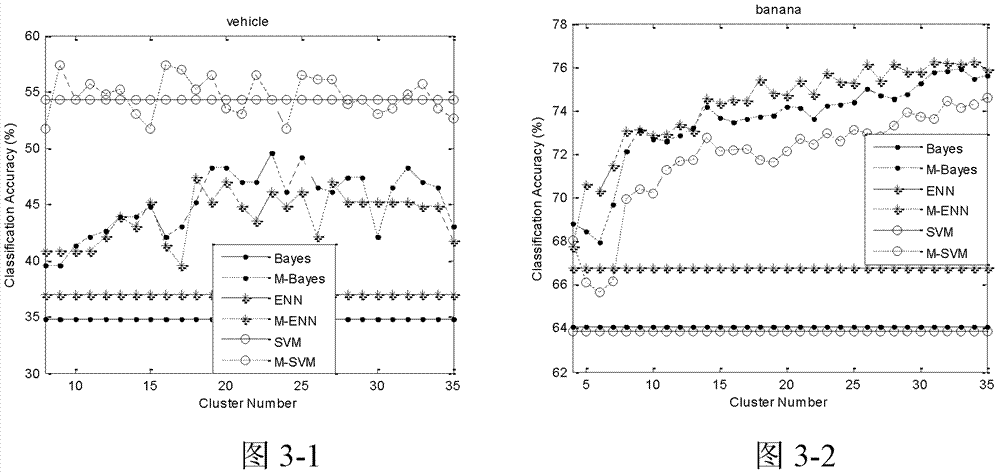

[0054] The newly proposed idea can be applied in many classification processes, and the use of specific clustering algorithms and basic classifiers can be selected according to actual requirements. The effect of the present invention was tested by using a real data set as a test sample. In this experiment, the basic classifiers used are: Naive Bayesian classifier, SVM classifier, and ENN classifier. The invention optimizes the output result of the basic classifier to obtain better classification results, improves the accuracy of classification, and reduces the complexity of classification.

[0055] Through the UCI database to obtain multiple groups of actual data sets as test samples, the performance of the present invention is tested through the classification output of 3 basic classifiers. The basic information of the basic dataset is shown in Table 1:

[0056] Data

[0057] Table 1

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D Engineer

- R&D Manager

- IP Professional

- Industry Leading Data Capabilities

- Powerful AI technology

- Patent DNA Extraction

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2024 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com