Pedestrian retrieval method for carrying out multi-feature fusion on the basis of neural network

A multi-feature fusion and neural network technology, which is applied in neural learning methods, biological neural network models, special data processing applications, etc., can solve the problem that one feature distance cannot be used to represent the similarity, and the similarity between other pedestrians and pedestrians to be queried low, complex retrieval process and feature distance, to achieve the effect of solving low accuracy and retrieval similarity, improving convenience, and easy application

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

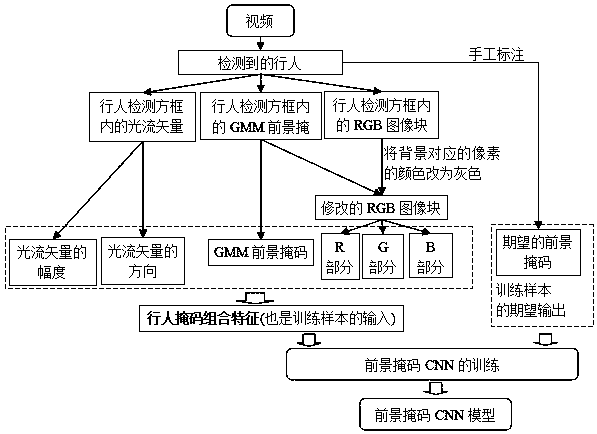

[0030] Attached below figure 1 ~9 further describes the pedestrian retrieval method based on neural network for multi-feature fusion.

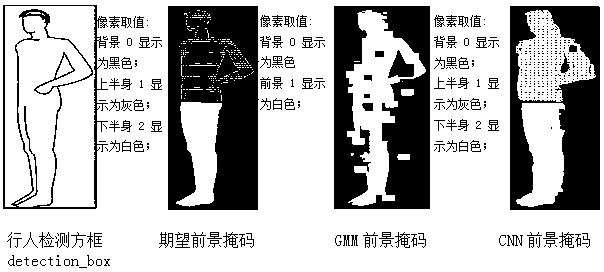

[0031] (1) Dimensions of the foreground mask:

[0032] For detected pedestrians, adopt GMM (Gaussian Mixture Model) to calculate the GMM foreground mask in the pedestrian box, change the color of the part corresponding to the background in the GMM foreground mask in the RGB image block contained in the box to gray, To eliminate the interference of the background area; then scale the height and width to the standard size PxQ, and the CNN foreground mask is a PxQ-dimensional matrix, and each element has only 3 values: background 0, upper body 1 and lower body 2; see Figure 4 , the following data constitute the combination feature of the pedestrian mask, all of which are PxQ dimensional matrices: the magnitude of the optical flow vector, the direction of the optical flow vector, the GMM foreground mask, the R part, the G part, and the B part of...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D Engineer

- R&D Manager

- IP Professional

- Industry Leading Data Capabilities

- Powerful AI technology

- Patent DNA Extraction

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2024 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com