Video content description method based on semantic information guidance

A technology for semantic information and video content, applied in special data processing applications, instruments, electrical digital data processing, etc., can solve the problems of cumbersome research methods and chaotic timing, and achieve the effect of improving accuracy and ensuring temporal and spatial correlation.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment

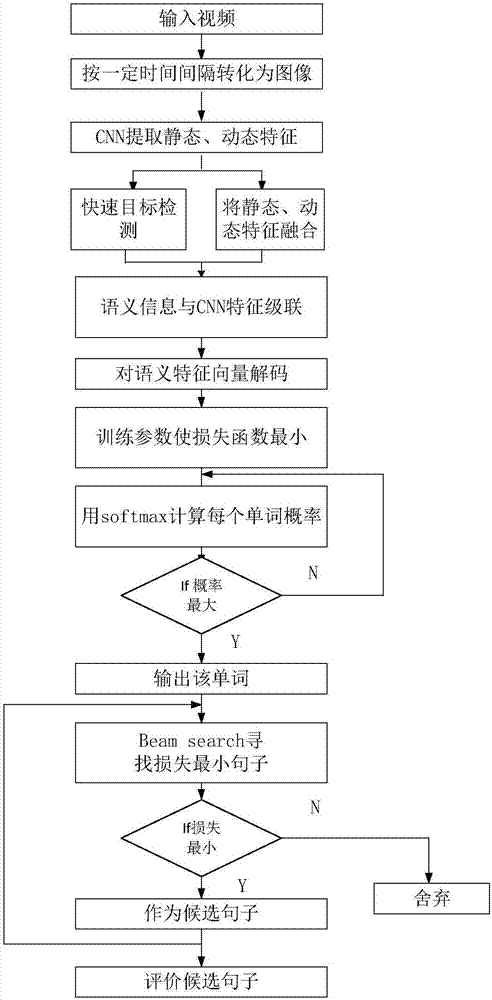

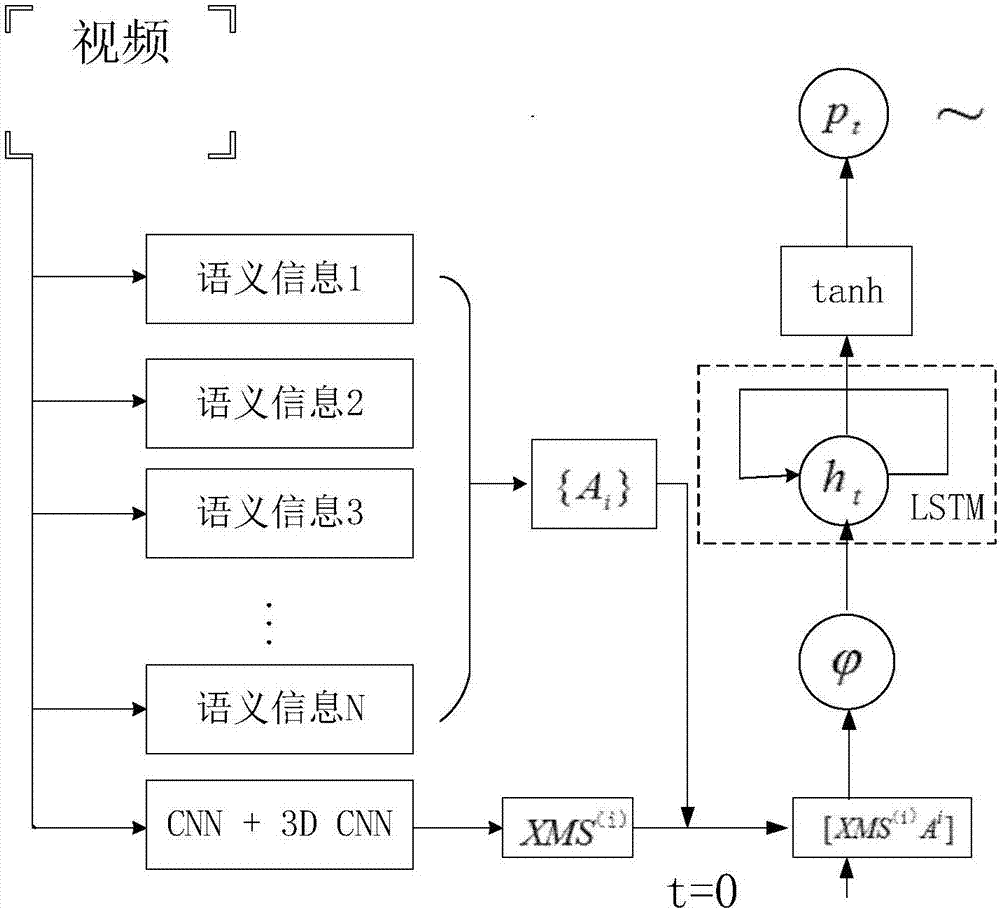

[0070] Combine below figure 2 , giving video content description specific examples of training and testing implementation, the detailed calculation process is as follows:

[0071] (1) There are 280 frames in a certain video, which can be divided into 28 blocks, and the first frame of each block is taken, so the video can be converted into 28 consecutive pictures;

[0072] (2) According to the method listed in formula (1), use the pre-trained convolutional neural network to extract the static features in the 28 pictures and the dynamic features of the entire video, and use the cascade method to fuse the two;

[0073] (3) Use the pre-trained faster-rcnn to perform fast target detection on 28 pictures to form 28 81-dimensional semantic information vectors;

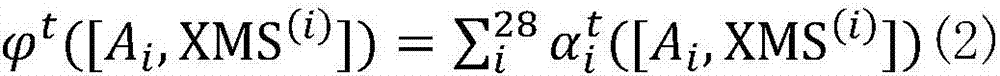

[0074] (4) Concatenate the semantic information vector of each frame with the original feature vector extracted by CNN+3-D CNN to form a 1457-dimensional semantic feature vector. According to the methods listed ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D Engineer

- R&D Manager

- IP Professional

- Industry Leading Data Capabilities

- Powerful AI technology

- Patent DNA Extraction

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2024 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com