Scene three-dimensional data registration method and navigation system error correction method

A technology of three-dimensional data and scenes, applied in the field of navigation systems, can solve the problems of accumulation of errors and increase of positioning errors, so as to ensure the success rate, improve the accuracy, and eliminate the effect of data mismatch.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment

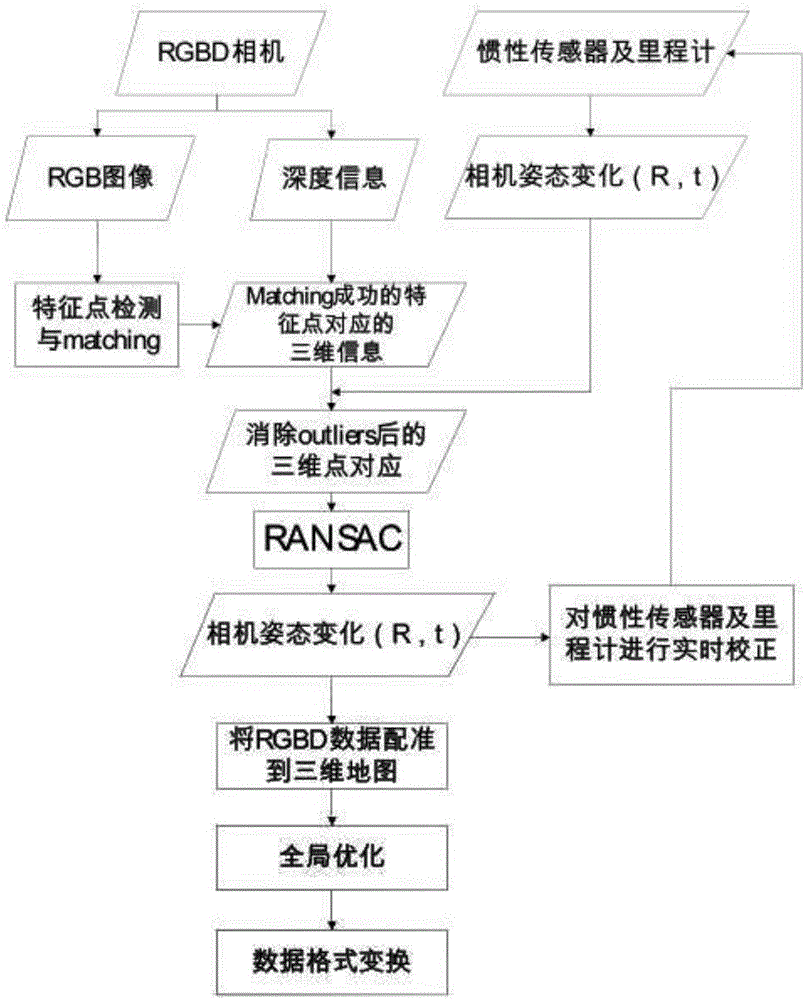

[0036] Refer to 1, this embodiment shows a method for scene 3D data registration:

[0037] Including the following steps:

[0038] 1): Inertial sensor and visual sensor for spatial calibration and time synchronization;

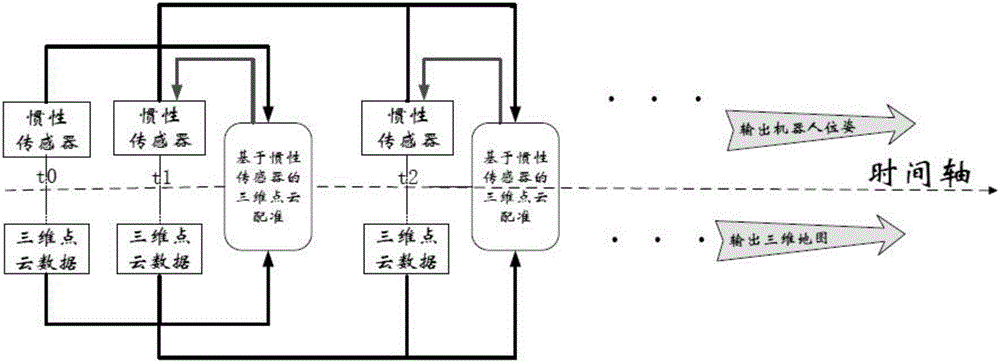

[0039] 2): The inertial sensor outputs the pose information at two adjacent moments, and then transmits the pose information to the visual sensor, and the visual sensor performs data registration on two adjacent frames of data based on the pose information given by the inertial sensor;

[0040] 3): Calculate the attitude change of the visual sensor through the data registration of the visual sensor;

[0041] 4): Real-time correction of the inertial sensor by using the attitude change of the visual sensor;

[0042] 5): After data registration by the visual sensor, the data is registered to the 3D map.

[0043] Visual sensor RGBD camera composition;

[0044] In step 2), the working steps of the visual sensor are as follows:

[0045] 2a) The RGBD camera capt...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com