Remote sensing image fusion method based on contourlet transform and guided filter

A remote sensing image fusion and contourlet transform technology, applied in the field of image processing, can solve the problems of reduced image contrast, unclear expression of image edge characteristics, etc., and achieves the effects of high speed, clear edge detail information, and high efficiency.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

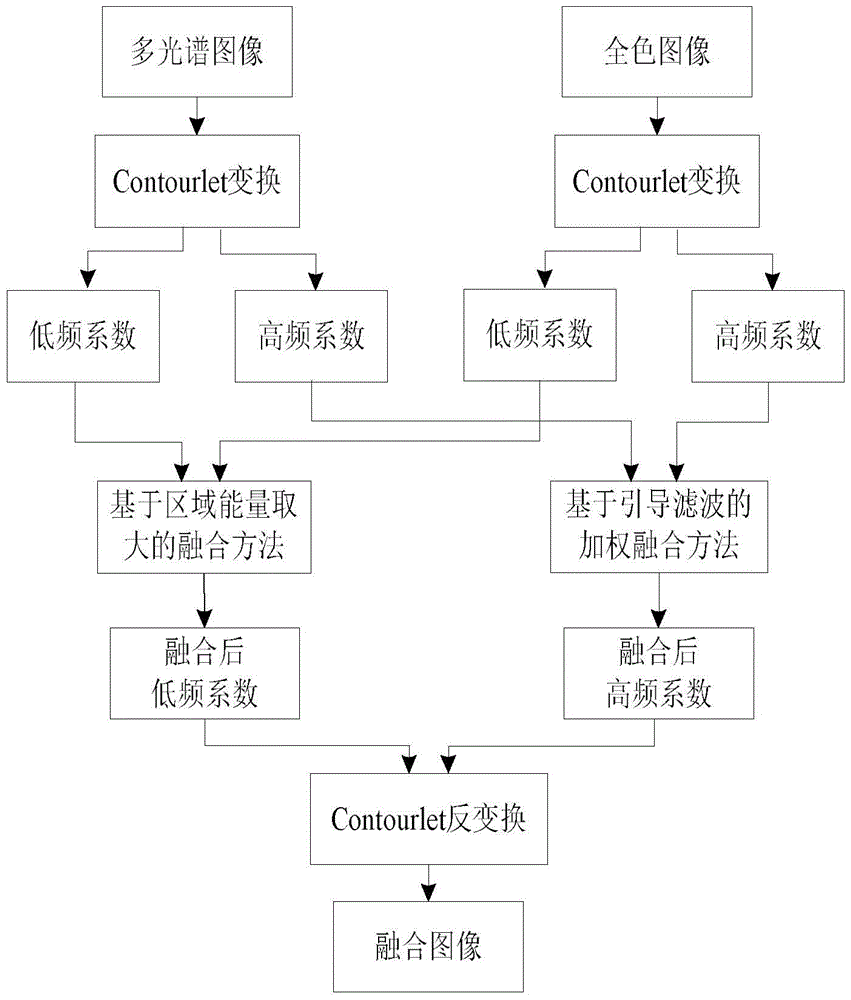

[0024] The present invention proposes a remote sensing image fusion method based on contourlet transformation and guided filtering, see figure 1 , including the following steps:

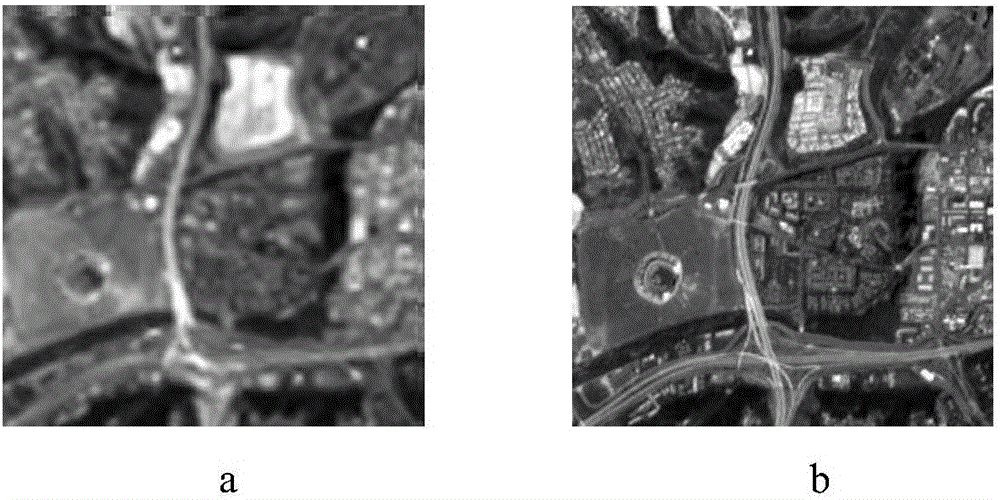

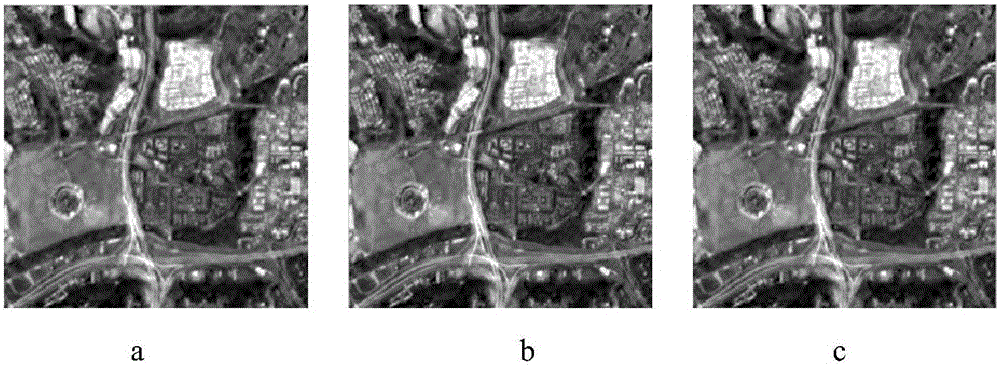

[0025] (1) Two multi-spectral images and panchromatic images to be fused are obtained by shooting the same target. These two images are obtained by shooting the same target at the same time by equipment with different imaging characteristics, such as image 3 shown, where image 3 are two remote sensing images representing surface information, image 3 a is the multispectral image, image 3 b is a full-color image. Carry out contourlet transformation respectively for these two source images, the number of layers of contourlet transformation in the present invention is 3 layers. Conturlet decomposes to obtain the high-frequency coefficients and low-frequency coefficients corresponding to the source image.

[0026] (2) The high-frequency coefficients of the two source images are fused using a weig...

Embodiment 2

[0031] The remote sensing image fusion method based on contourlet transformation and guided filtering is the same as embodiment 1, see figure 2 , wherein the weighted fusion method based on guided filtering described in step (2), this weighted fusion method can also be referred to as the weighted fusion rule based on guided filtering, the fusion process includes the following steps:

[0032] (2a) with H 1 and H 2 to represent a set of high-frequency coefficients corresponding to a multispectral image and a panchromatic image in a certain scale direction, and H 1 and H 2 Based on the comparison of each pixel, the weight map W is obtained 1 and W 2 :

[0033]

[0034] (2b) The weight map obtained by direct comparison contains noise, and the edges are not aligned, so the weight map W 1 and W 2 Apply guided filter filtering, two high frequency coefficients H 1 and H 2Respectively as the guide image, when using the guide filter for filtering processing, the parameter s...

Embodiment 3

[0044] The remote sensing image fusion method based on contourlet transformation and guided filtering is the same as embodiment 1-2, see figure 2 Among them, the low-frequency fusion method based on the large area energy described in step (3), this fusion method is also called the low-frequency fusion rule based on the large area energy, and its fusion process includes the following steps:

[0045] (3a) Calculate the area energy of the two low-frequency coefficients respectively. In this example, a window range with an area radius of l=4 is selected. The selection of the window range is related to the specific image content, and has little influence on the experimental results, and can be selected by oneself during the experiment. use C 1 To represent the low-frequency coefficients corresponding to the multispectral image respectively, use C 2 to represent the low-frequency coefficients corresponding to panchromatic images, and the low-frequency coefficients C of multispect...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com