Robot under-actuated hand autonomous grasping method based on stereoscopic vision

A stereo vision and under-actuated technology, applied in the direction of manipulators, program-controlled manipulators, instruments, etc., can solve the problem that complex objects cannot obtain grasping points, etc.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

specific Embodiment approach 1

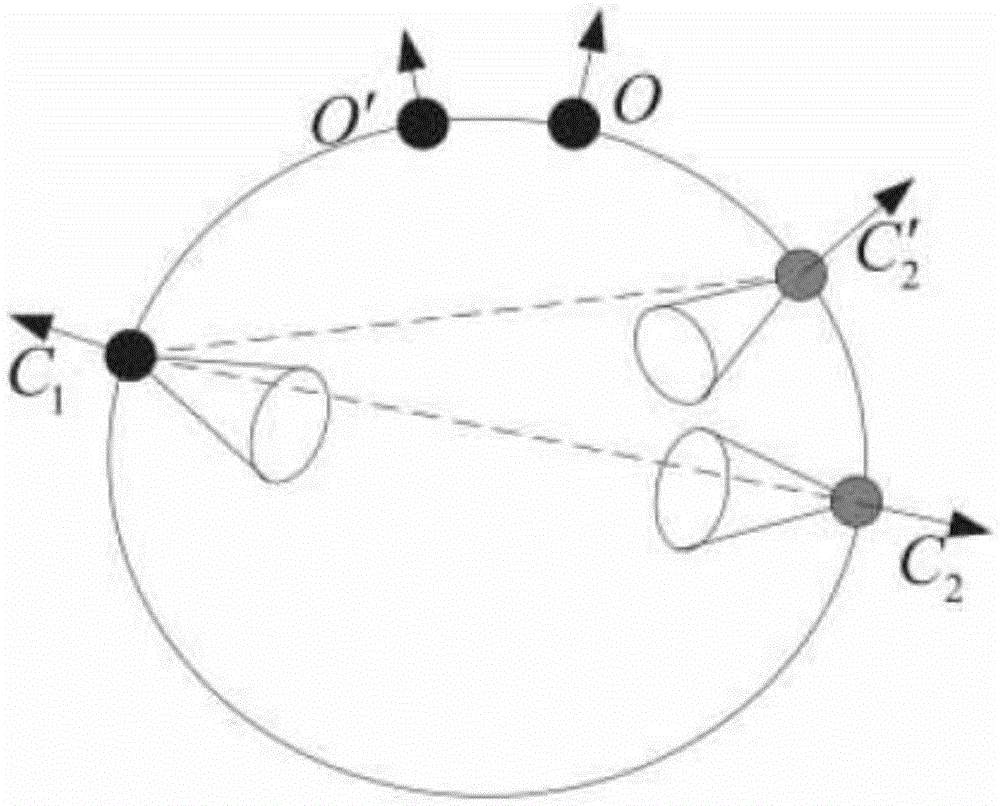

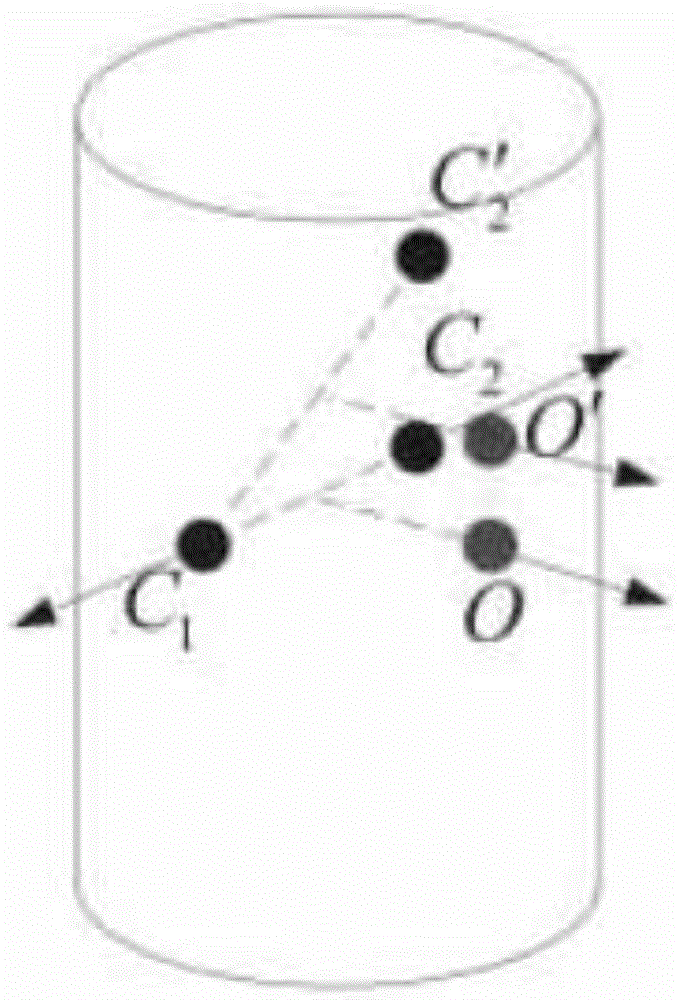

[0051] The autonomous grasping method of the underactuated hand of the robot based on the stereo vision comprises the following steps:

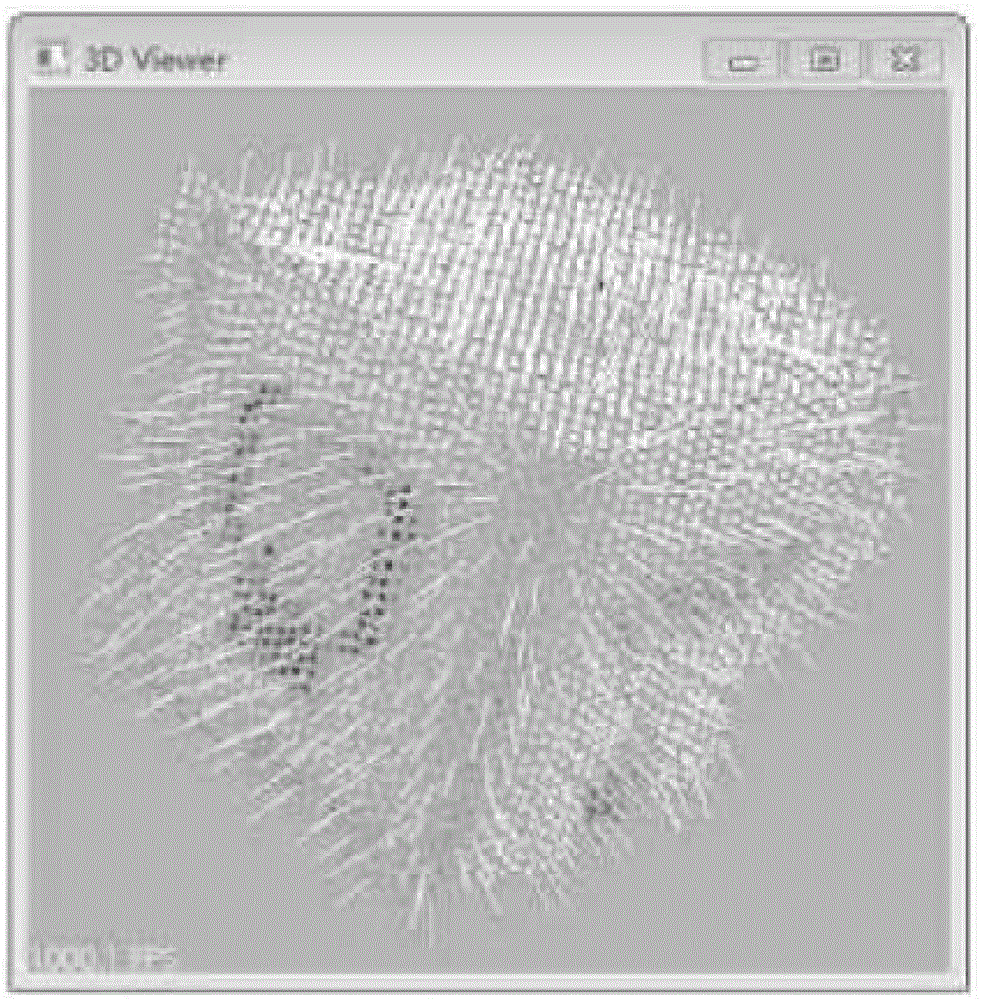

[0052] Step 1. For the object to be captured and its environment, obtain the RGB-D point cloud of the object and the environment through the Kinect sensor, and filter the point cloud;

[0053] The Kinect sensor is a 3D vision sensor launched by Microsoft in November 2010. It includes a color camera and a depth camera, which can directly obtain the color map and depth map in the scene, and then generate the point cloud in the scene; however, due to The point cloud generated by Kinect contains the point cloud of all objects in the scene. The number is huge and the features are complex. It takes a lot of machine time to process and brings trouble to the subsequent processing; therefore, it is necessary to perform certain processing on the obtained point cloud. Preprocessing, extract the point cloud of the object in the point cloud, and perform f...

specific Embodiment approach 2

[0098] The specific steps of the process of filtering the point cloud described in step 1 of the present embodiment are as follows:

[0099] Step 1.1, use the radius outlier removal filter (RadiusOutlierRemoval filter) to remove outliers;

[0100] A small number of outliers due to noise can be removed by using the radius outlier removal filter provided by the PCL library; the filtering process is as follows, assuming that point A is the point that needs to pass the filter, first use the Kd_tree search algorithm to count the points A is the center, and r is the total number of points inside the ball with radius. When the number of points is less than the threshold n, it is considered an outlier point;

[0101] Step 1.2, using an average filter to make the surface of the object smoother.

[0102] The influence of white noise can be removed by using the average value filter; the filtering process is as follows, assuming that point A is the point that needs to be filtered, first ...

specific Embodiment approach 3

[0104] The specific steps of establishing the grasping planning scheme based on the Gaussian process classification described in step 3 of this embodiment are as follows:

[0105] After obtaining the above features, the grasping scheme can be obtained through the machine learning method with a teacher; the reasons for obtaining the grasping scheme by using the machine learning method of Gaussian process classifier: 1) The difference between the actual feature and the ideal feature The errors are generated by noise, so they obey the Gaussian distribution; so these errors can be learned by the Gaussian process; 2) Compared with the support vector machine and neural network, the construction of the Gaussian process classifier is simpler, only need to determine Its kernel function and mean function are enough, and fewer parameters are used at the same time, which makes parameter optimization easier and parameters are easier to converge; 3) Gaussian process classifier can not only g...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - Generate Ideas

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com