Motion characteristics extraction method and device

A feature extraction and action technology, applied in the field of pattern recognition, can solve the problems of lack of action behavior patterns, low action recognition rate, and poor robustness, so as to avoid instability and inaccuracy of depth information, improve accuracy and Robustness, good representation effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

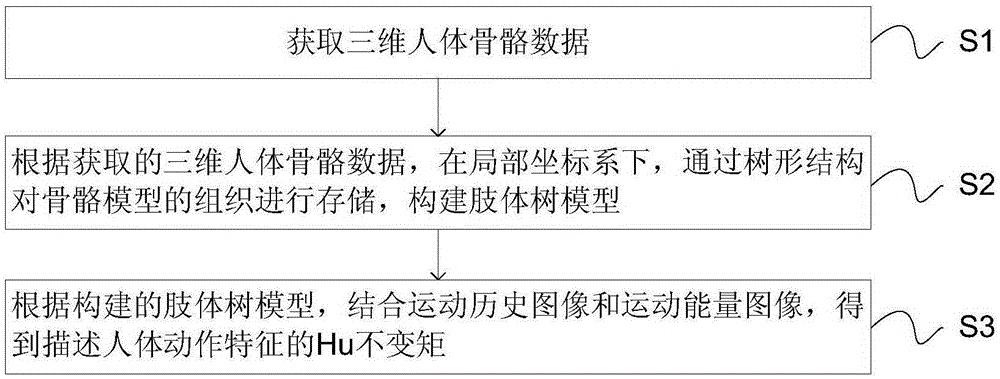

[0049] see figure 1 As shown, an action feature extraction method provided in an embodiment of the present invention includes:

[0050] S1, acquiring three-dimensional human skeleton data;

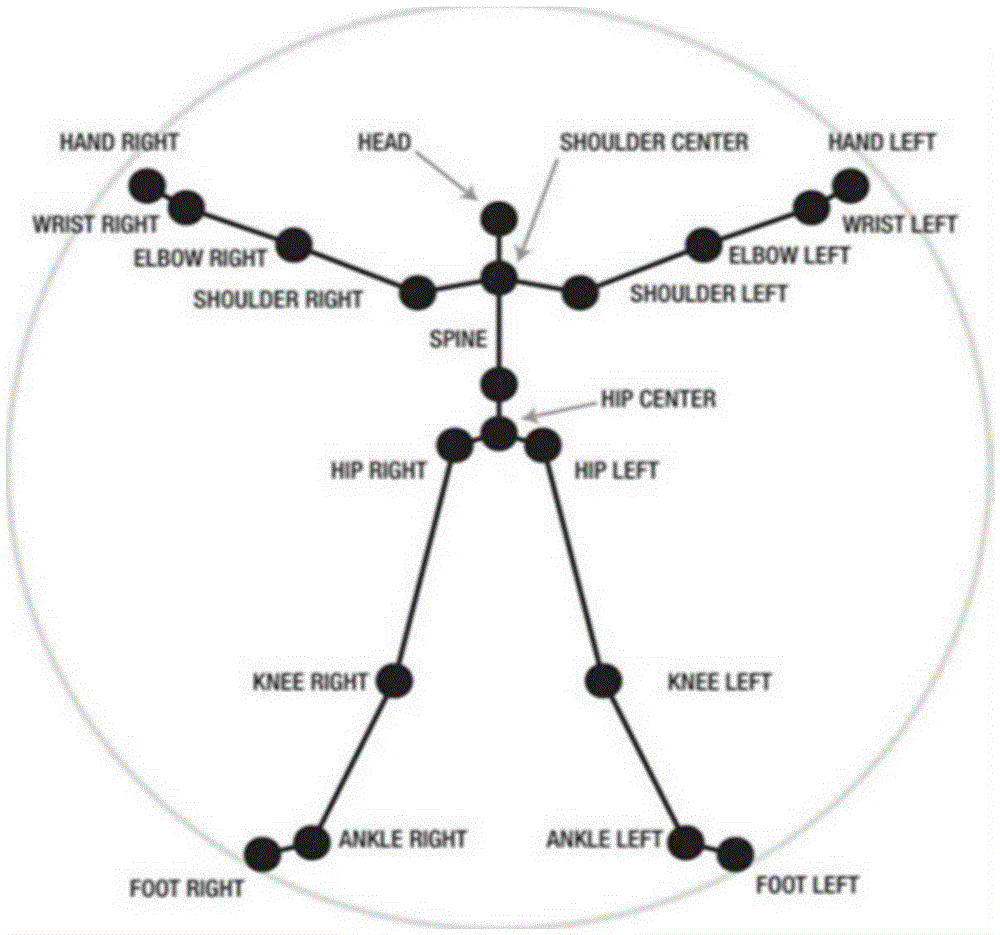

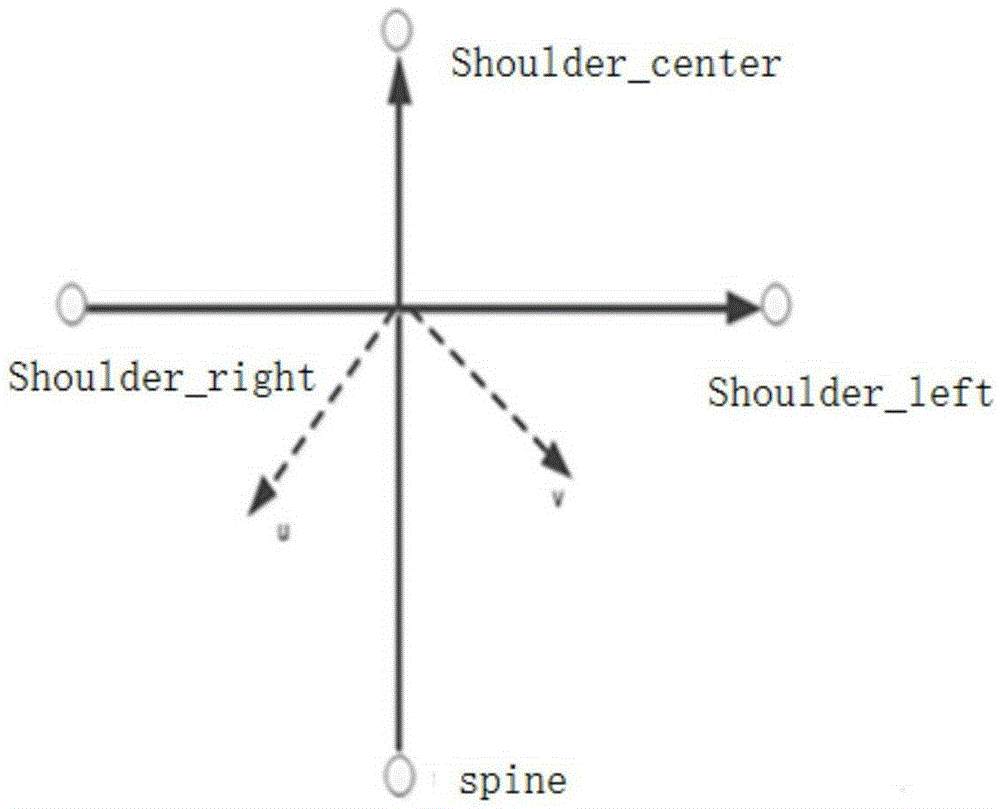

[0051] S2, according to the obtained three-dimensional human skeleton data, in the local coordinate system, store the organization of the skeleton model through a tree structure, and construct a limb tree model;

[0052] S3, according to the constructed limb tree model, combined with motion history images and motion energy images, Hu invariant moments describing the characteristics of human motion are obtained.

[0053]The action feature extraction method described in the embodiment of the present invention obtains three-dimensional human skeleton data; according to the obtained three-dimensional human skeleton data, in the local coordinate system, the organization of the skeleton model is stored through a tree structure, and a limb tree model is constructed; The constructed limb tree mo...

Embodiment 2

[0097] The present invention also provides a specific implementation of an action feature extraction device. Since the action feature extraction device provided by the present invention corresponds to the specific implementation of the aforementioned action feature extraction method, the action feature extraction device can perform the above method. The purpose of the present invention is realized by the process steps in the above, so the explanations in the specific implementation of the above-mentioned action feature extraction method are also applicable to the specific implementation of the action feature extraction device provided by the present invention, in the following specific embodiments of the present invention Will not repeat them.

[0098] Such as Figure 6 As shown, the embodiment of the present invention also provides an action feature extraction device, including:

[0099] Obtaining module 101: for obtaining three-dimensional human skeleton data;

[0100] Con...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com