Image Visual Salient Region Detection Method Based on Deep Autoencoder Reconstruction

An autoencoder and image vision technology, applied in the field of image processing, can solve the problems of decreased accuracy of salient areas, difficulty in highlighting salient areas, etc., and achieve good universality, scalability, and high-efficiency detection results.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

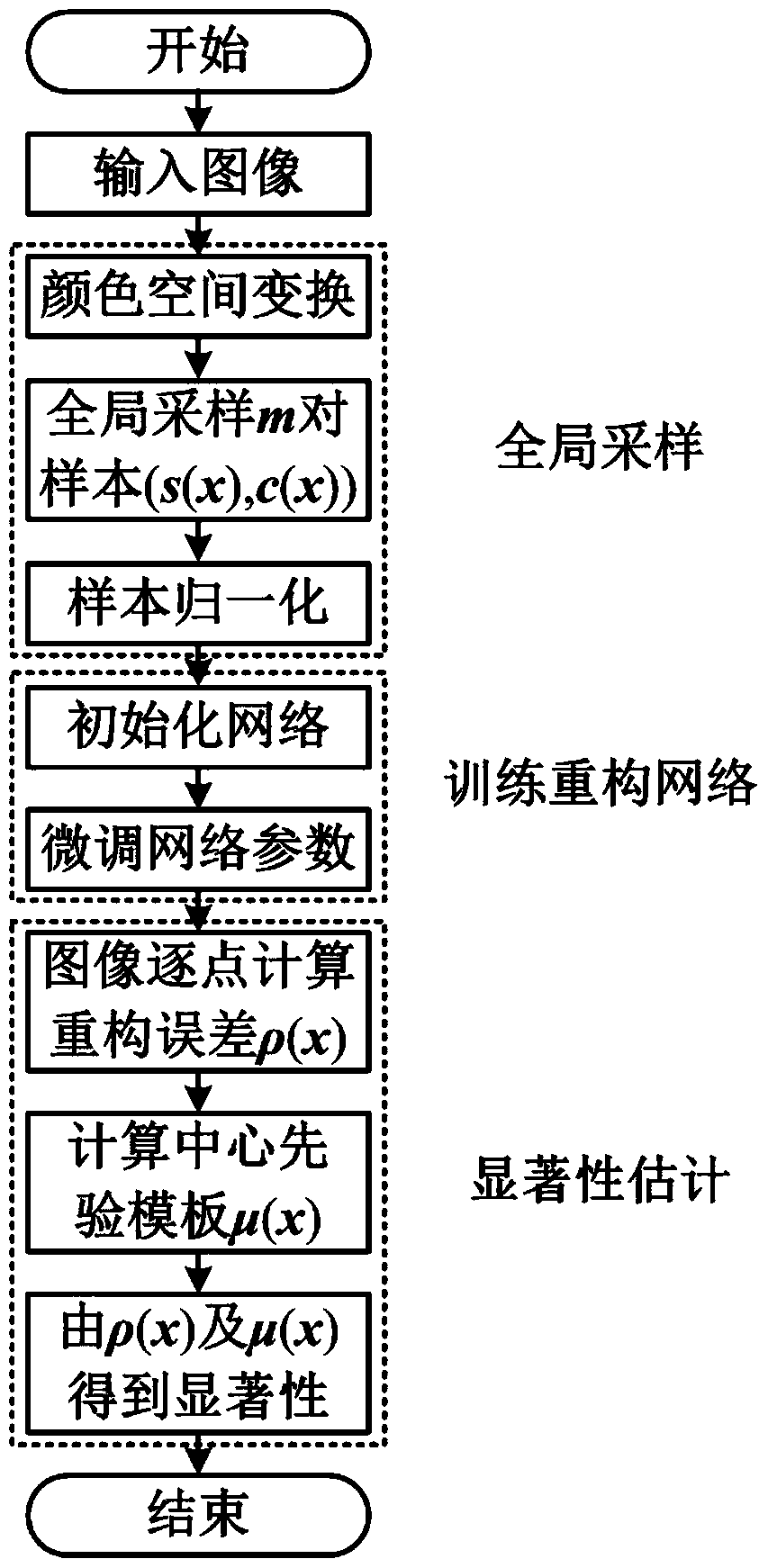

[0021] refer to figure 1 , the specific implementation steps of the present invention are as follows:

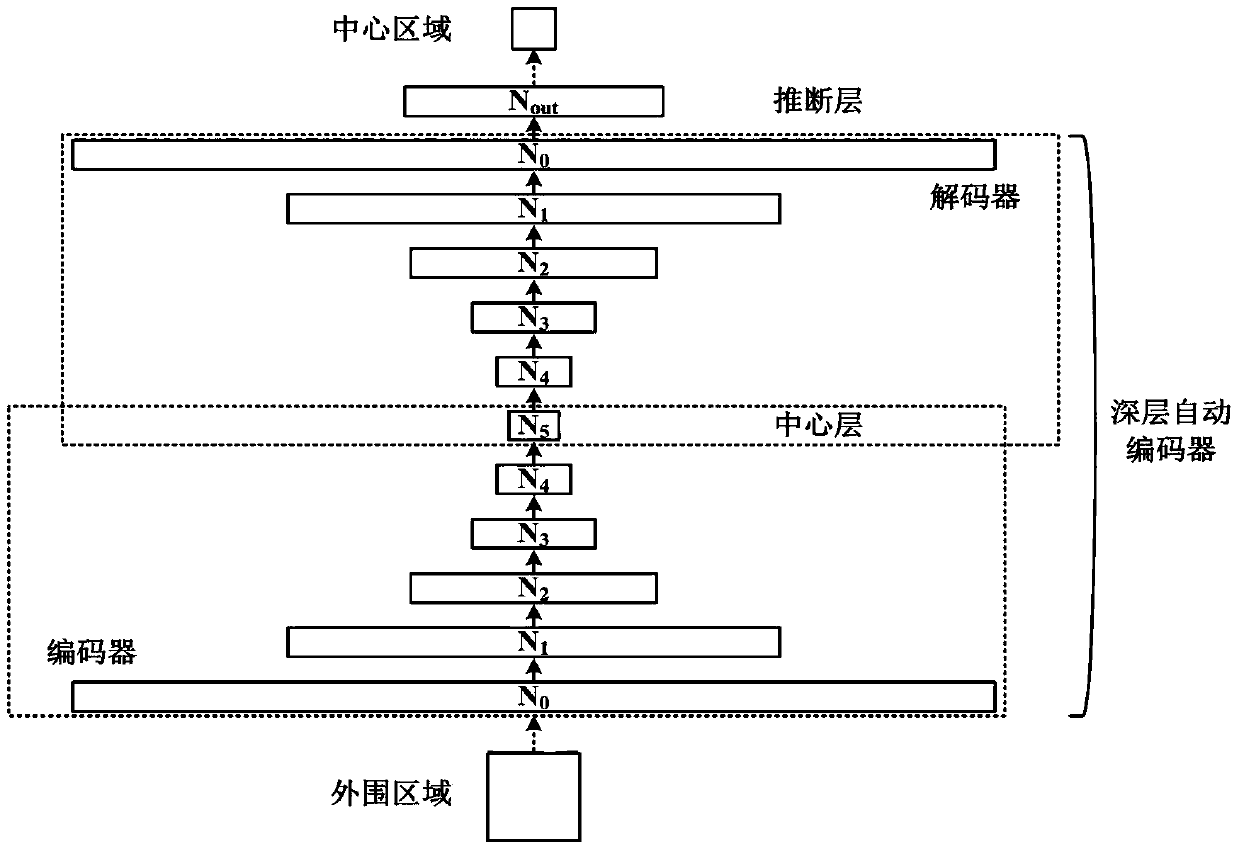

[0022] Step 1, build a center-periphery reconstruction network

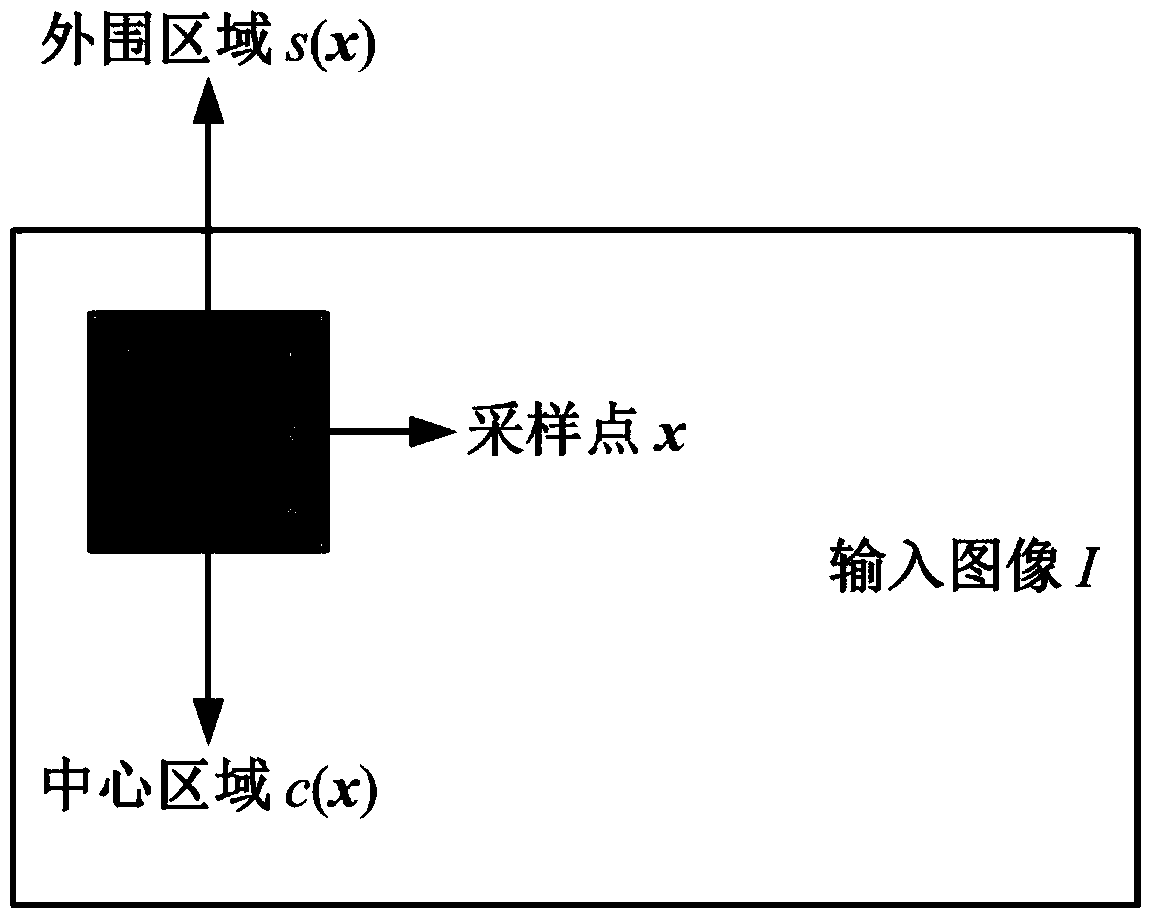

[0023] refer to figure 2 , the deep reconstruction network established by the present invention mainly includes three parts of an encoding module, a decoding module and an inference layer; wherein the encoding module is composed of L layer neurons, 10 , N 0 The size of is determined by the dimension of the peripheral block s(x), N in the example scheme 0 The number of neurons in each other layer is 256, 128, 64, 32, 8; the structure of the decoding module is symmetrical to that of the encoding module; the inference layer is located above the decoding module, and the number of neurons it contains is N out Determined by the dimension of the center vector c(x) of the sampling point x, N in the example scheme out is 147; the encoding module and the decoding module together constitute an autoencoder network, an...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D Engineer

- R&D Manager

- IP Professional

- Industry Leading Data Capabilities

- Powerful AI technology

- Patent DNA Extraction

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2024 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com