Video abstract key frame extraction method based on abstract space feature learning

A technology of video summarization and extraction method, which is applied in the direction of instruments, character and pattern recognition, electrical components, etc., and can solve problems such as poor quality of key frames

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

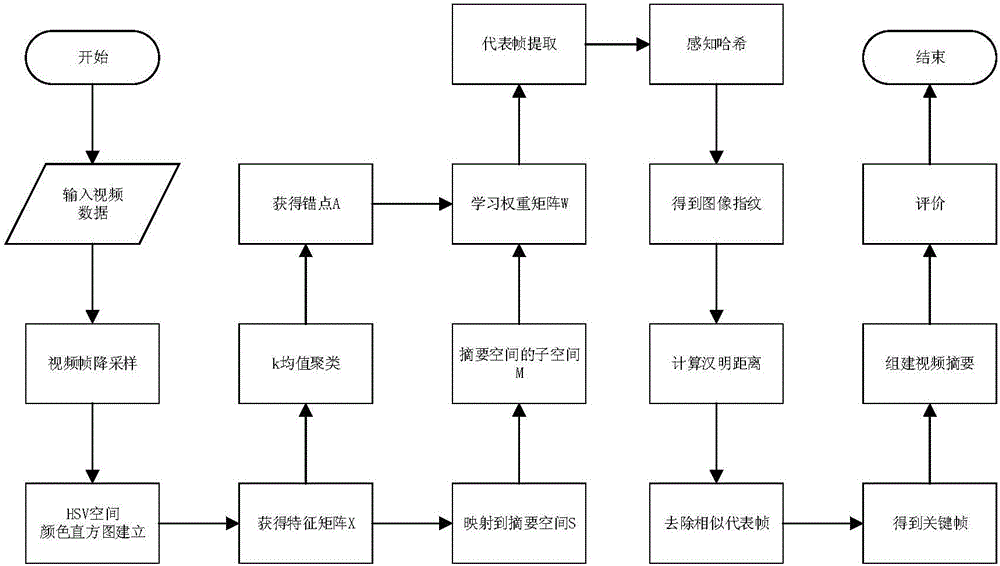

[0039] refer to Figure 1-2 . The present invention is based on the abstract key frame extraction method of abstract spatial feature learning and the specific steps are as follows:

[0040] Step 1, video data preprocessing.

[0041] In order to reduce the redundancy of video data, the video frames are uniformly sampled at first, specifically, one video frame is taken every second for analysis. Then a color histogram in HSV space is established for each selected video frame. Among them, the H channel is divided into 16 equal parts, the S channel and the V channel are divided into 4 equal parts respectively, and the statistical data of the three channels are normalized to obtain the feature vector of each frame. Finally, the feature matrix X={x of the video is obtained 1 ,x 2 ,...,x n}, and use it as the input number. Where n is the number of video frames after uniform sampling, x n is the feature vector of the nth frame.

[0042] Step 2, the video data is mapped to a h...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com