Two-dimensional face key feature point positioning method and system

A key feature and feature point technology, which is applied in the field of two-dimensional face key feature point positioning method and system, can solve the problems of low accuracy of face key feature point positioning and the inability to provide face key feature point positioning methods, etc.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

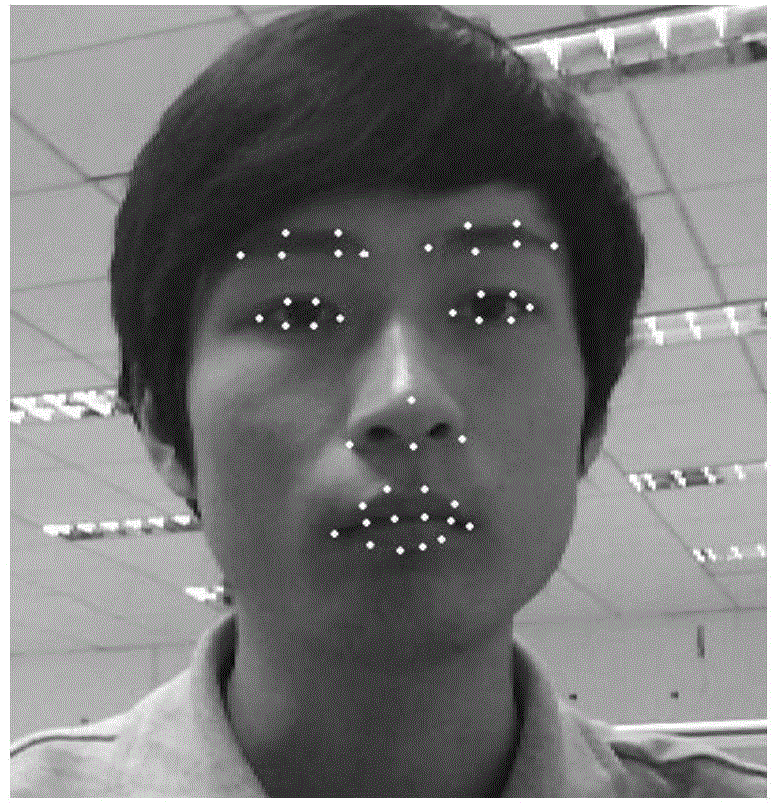

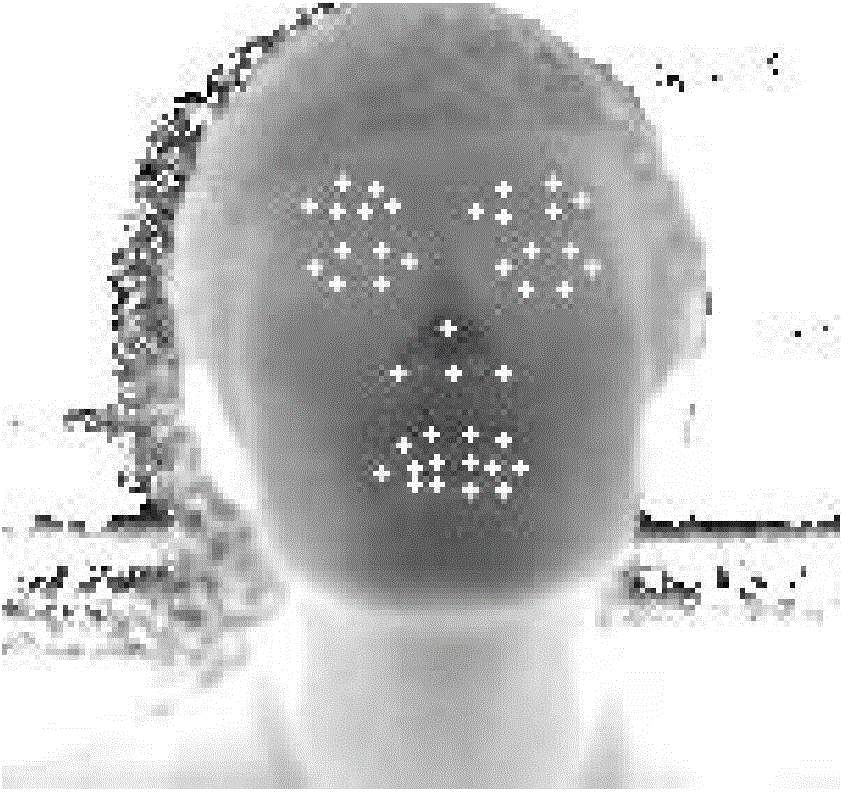

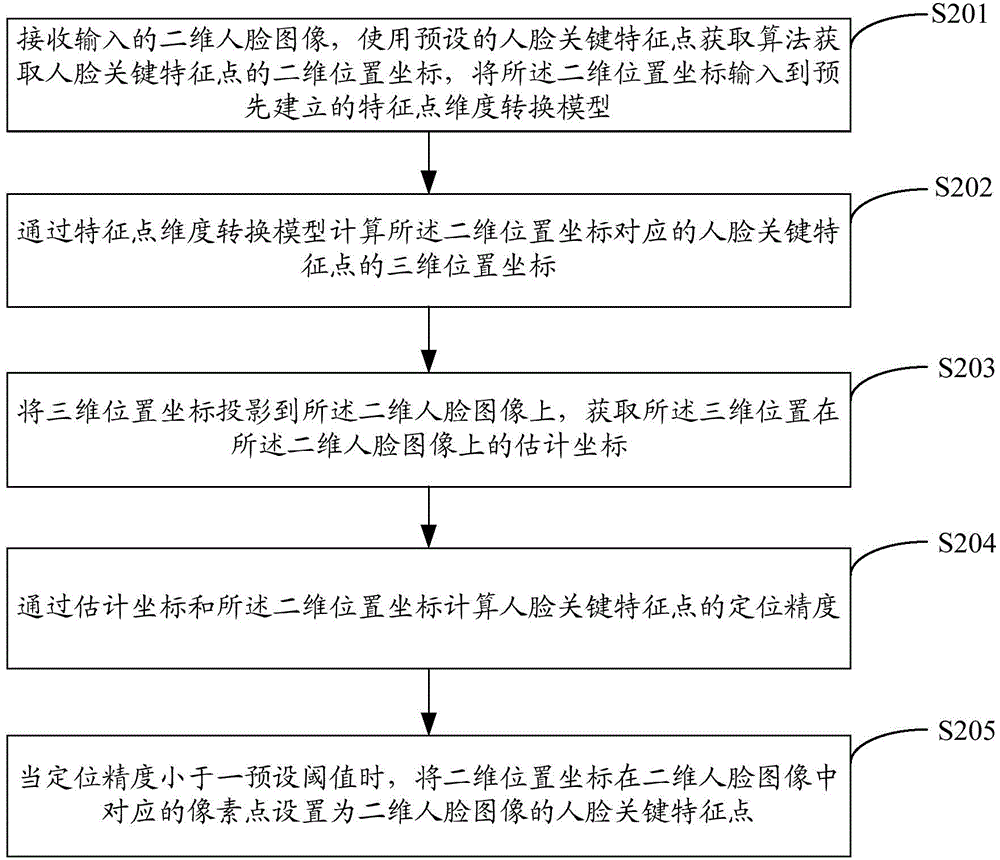

[0029] figure 2 The implementation process of the two-dimensional face key feature point positioning method provided by Embodiment 1 of the present invention is shown, and the details are as follows:

[0030] In step S201, the input two-dimensional face image is received, the two-dimensional position coordinates of the key feature points of the face are obtained using the preset key feature point acquisition algorithm, and the two-dimensional position coordinates are input into the pre-established feature point dimension Convert the model.

[0031] In the embodiment of the present invention, first, the two-dimensional position coordinates of the key feature points of the face are obtained by using the preset key feature point acquisition algorithm of the face, that is, the initial two-dimensional position coordinates of the key feature points of the face, wherein the key feature points of the face The point acquisition algorithm can be an active shape model (Active Shape Mod...

Embodiment 2

[0043] image 3 The implementation flow of the method for establishing a voice conference site provided by Embodiment 2 of the present invention is shown, and the details are as follows:

[0044] In step S301, the input two-dimensional face image is received, the two-dimensional position coordinates of the key feature points of the face are obtained using the preset key feature point acquisition algorithm, and the two-dimensional position coordinates are input into the pre-established feature point dimension Convert the model.

[0045] In step S302, the three-dimensional position coordinates of the key feature points of the face corresponding to the two-dimensional position coordinates are calculated through the feature point dimension conversion model.

[0046] In step S303, the coordinates of the three-dimensional position are projected onto the two-dimensional face image, and the estimated coordinates of the three-dimensional position on the two-dimensional face image are ...

Embodiment 3

[0057] Figure 4 The structure of the two-dimensional face key feature point positioning system provided by the third embodiment of the present invention is shown. For the convenience of description, only the parts related to the embodiment of the present invention are shown, including:

[0058] The key point two-dimensional coordinate acquisition unit 41 is used to receive the input two-dimensional face image, use the preset human face key feature point acquisition algorithm to obtain the two-dimensional position coordinates of the key feature points of the face, and convert the two-dimensional position coordinates to Input to the pre-established feature point dimension conversion model;

[0059] A key point three-dimensional coordinate calculation unit 42, configured to calculate the three-dimensional position coordinates of the key feature points of the face corresponding to the two-dimensional position coordinates through the feature point dimension conversion model;

[0...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com