Specular suppression method based on sparse representation of strongly reflective surface-encoded light measurements

A technology of sparse expression and strong reflection, applied in measurement devices, optical devices, instruments, etc., can solve the problems of low extraction accuracy of fringe centers, changing the grayscale distribution of diffuse reflection fringes, and blurring of fringes, so as to improve the extraction accuracy. Effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

specific Embodiment approach 1

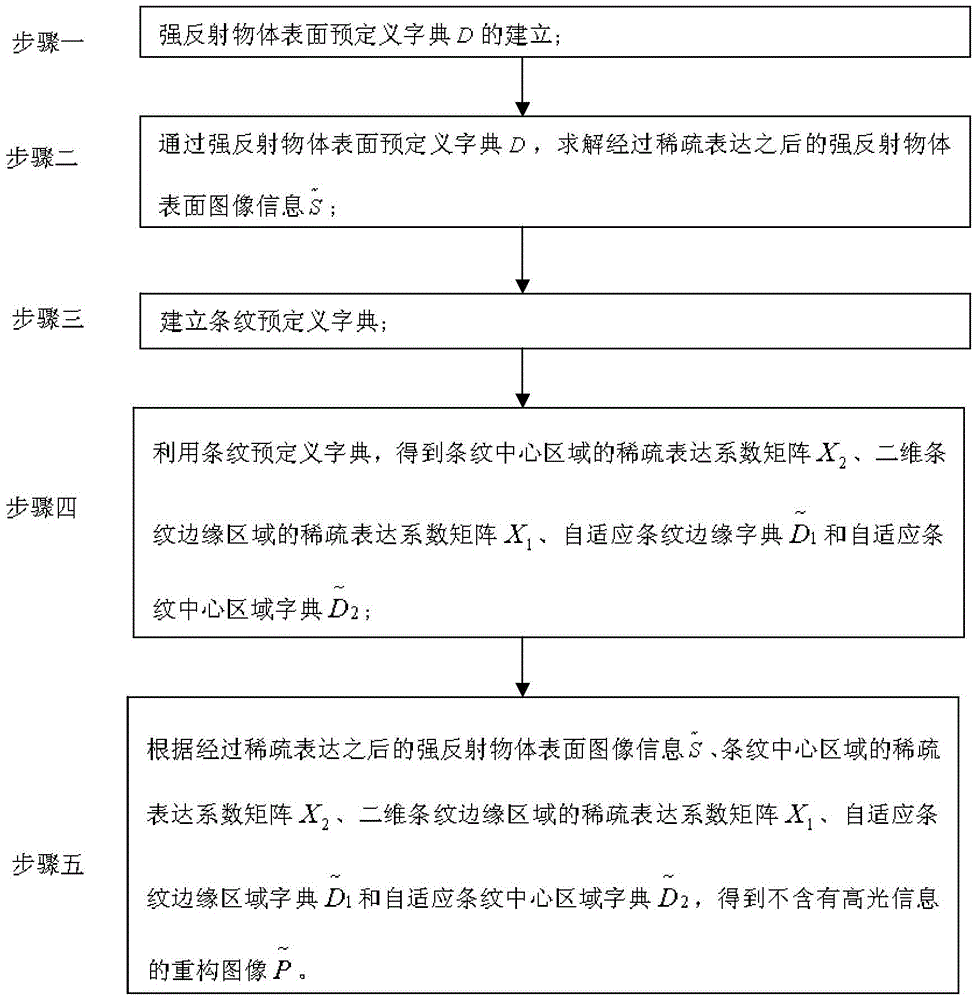

[0026] Specific implementation mode one: combine figure 1 To illustrate this embodiment, the highlight suppression method based on sparsely expressed strongly reflective surface coded light measurement is specifically carried out according to the following steps:

[0027] Step 1, the establishment of a predefined dictionary D on the surface of a strongly reflective object; Figure 8 , Figure 9 , Figure 10 , which illustrates the selection of the predefined dictionary on the surface of the object to be measured;

[0028] Step 2. Solve the image information of the surface of the strongly reflective object after sparse expression through the predefined dictionary D on the surface of the strongly reflective object like Figure 12 , which describes the surface information of the object the build process;

[0029] Step 3, establish a predefined dictionary of stripes;

[0030] Step 4. Use the stripe predefined dictionary to obtain the sparse expression coefficient matrix X...

specific Embodiment approach 2

[0032] Specific embodiment two: the difference between this embodiment and specific embodiment one is: the establishment of a predefined dictionary D on the surface of a strongly reflective object in the step one, the specific process is:

[0033] Strongly reflective objects have the characteristics of smooth surface and uneven surface brightness, while the two-dimensional Gabor function has anisotropy and multi-directionality, which can match the structure and content of the surface image of strongly reflective objects, and is immune to highlights, so the two-dimensional Gabor function is selected The dimensional Gabor function is used as the basis function of the predefined dictionary D on the surface of the strongly reflective object, and the basis function of the predefined dictionary D on the surface of the strongly reflective object is defined as follows:

[0034] The two-dimensional Gabor function is used as the base function of the predefined dictionary D on the surface...

specific Embodiment approach 3

[0053] Specific embodiment 3: The difference between this embodiment and specific embodiment 1 or 2 is that in the step 2, the dictionary D of the strongly reflective object surface is predefined to solve the image information of the strongly reflective object surface after sparse expression The specific process is:

[0054] 1) The OMP algorithm is used to match the predefined dictionary D of the surface of the strongly reflective object with the image information of the surface of the strongly reflective object, and the coefficient matrix A is obtained, then the image information S of the surface of the strongly reflective object can be expressed as:

[0055] S=AD;

[0056] The full name of the OMP algorithm is the Orthogonal Matching Pursuit algorithm, that is, the Orthogonal Matching Pursuit algorithm, which is a representative sparse expression approximation algorithm in the field of signal and image processing. Its basic idea is to select the column that best matches th...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com