Training method and system for language model

A language model and training method technology, applied in the field of language model training methods and systems, can solve the problems of language models easily losing the original statistical distribution of big data, reducing language recognition rate, and large amount of computing resources, etc., so as to improve the speech recognition rate. , The effect of reducing the amount of computing resources and reasonable parameters

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

[0052] refer to figure 2 , which shows a flow chart of the steps of Embodiment 1 of a language model training method of the present invention, which may specifically include the following steps:

[0053] Step 201, obtaining seed corpus in various fields, and training a seed model in a corresponding field according to the seed corpus in each field;

[0054] In the embodiment of the present invention, the field may refer to the application scenarios of the data, such as news, place names, website addresses, people's names, map navigation, chatting, short messages, Q&A, Weibo, etc. are common fields. In practical applications, the corresponding seed corpus can be obtained through professional crawling and cooperation for specific fields. The cooperation can be with the website operator to obtain the corresponding seed corpus through the log files of the website, such as through The corresponding seed corpus is obtained from the log file of the microblog website, and the embodim...

Embodiment 2

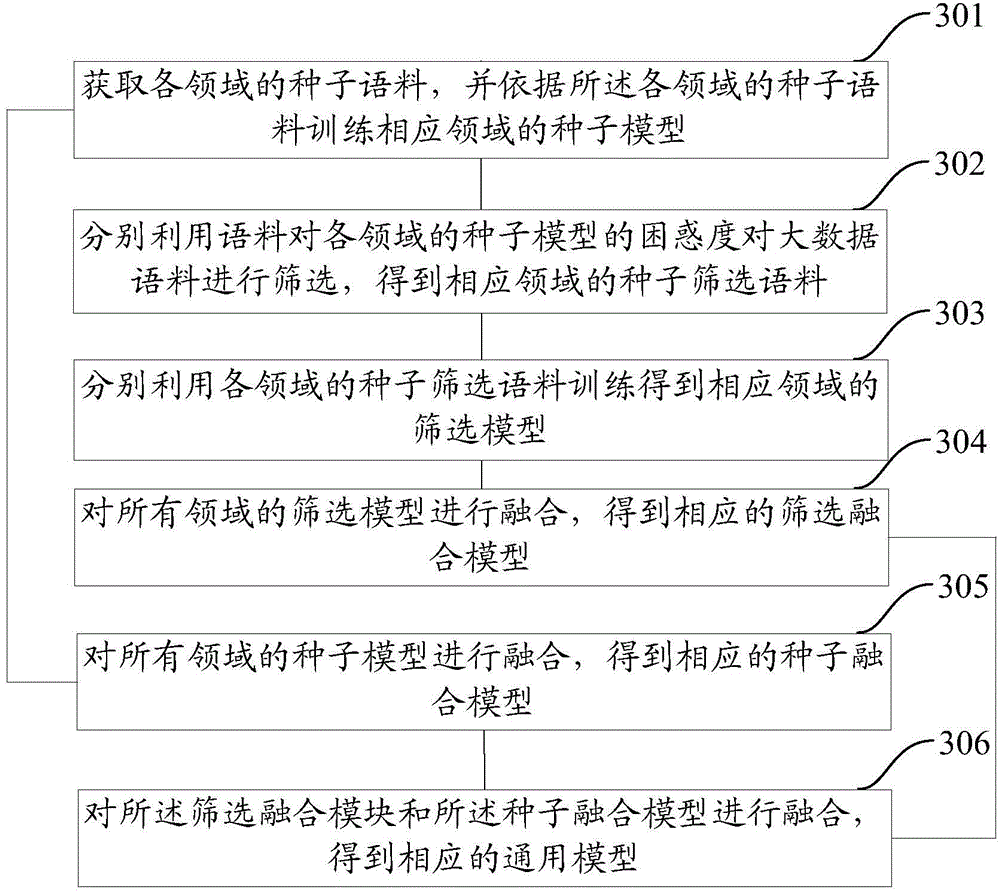

[0102] refer to image 3 , which shows a flow chart of the steps of Embodiment 2 of an information search method of the present invention, which may specifically include the following steps:

[0103] Step 301. Obtain seed corpus in each field, and train a seed model in a corresponding field according to the seed corpus in each field;

[0104] Step 302, using the perplexity of the corpus to the seed model in each field to screen the big data corpus to obtain the seed screening corpus in the corresponding field;

[0105] Step 303, respectively use the seed screening corpus training in each field to obtain the screening model in the corresponding field;

[0106] Step 304, fusing the screening models of all domains to obtain a corresponding screening fusion model.

[0107] Step 305, fuse the seed models of all domains to obtain the corresponding seed fusion model;

[0108] Step 306: Fusion the screening fusion module and the seed fusion model to obtain a corresponding general m...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com