Feature extraction and matching method and feature extraction and matching system in visual navigation

A feature extraction and matching method technology, which is applied in image analysis, image enhancement, instruments, etc., can solve the problems of high noise and low matching accuracy, and achieve the effects of improving matching speed, eliminating affine transformation, and reducing data processing

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

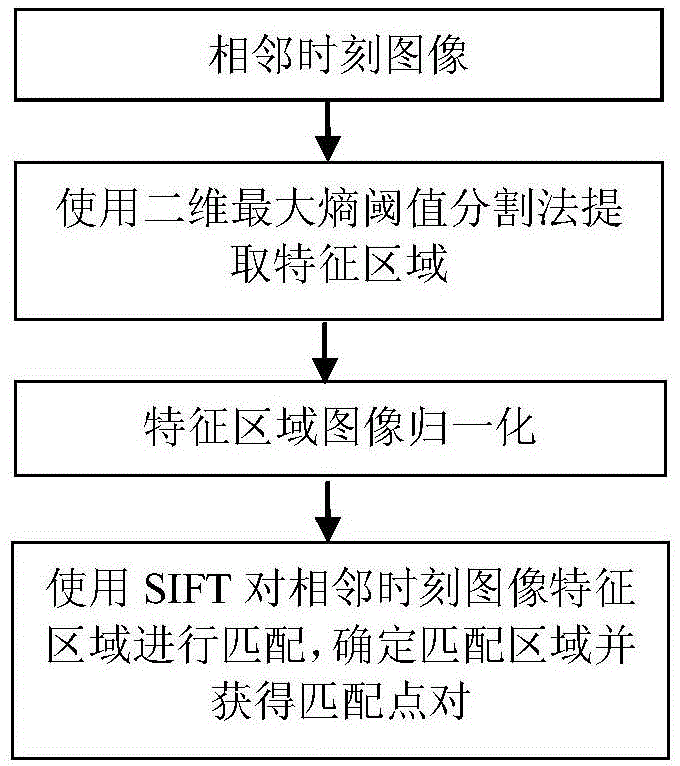

[0051] A feature extraction and matching method in visual navigation is provided in this embodiment, including the following steps:

[0052] (1) Using the two-dimensional maximum entropy threshold segmentation method to extract the feature region. The two-dimensional maximum entropy threshold segmentation method is a conventional method of feature area extraction. The two-dimensional maximum entropy threshold segmentation method is used to extract small feature areas that are prominent in the subject: calculate the entropy value of each point in the picture, and determine the segmentation threshold so that the picture Entropy reaches a maximum. In this process, in order to improve the anti-noise performance of the two-dimensional maximum upper threshold segmentation method, the pixel points will be denoised. Take each pixel and its adjacent pixels as a neighborhood; calculate the pixel mean value in the neighborhood, form a pixel-mean pair, and establish a two-dimensional fun...

Embodiment 2

[0064] This embodiment provides a feature extraction and matching method in visual navigation. On the basis of the above embodiments, after the feature region is extracted using the two-dimensional maximum entropy threshold segmentation method, it also includes filtering out the included pixels. Steps for feature regions smaller than a preset threshold. Due to the fine texture of the image, the feature area obtained by using the two-dimensional maximum entropy threshold segmentation method will have a feature area composed of only a few pixels. These feature areas are not obvious and easy to cause matching errors. Therefore, a larger area is selected as In the area for the next step of processing, removing the feature area of small pixels can reduce matching errors, improve the matching speed, and reduce the amount of data processing.

[0065] In this embodiment, a feature extraction and matching method in visual navigation is provided, and the specific design of the main li...

Embodiment 3

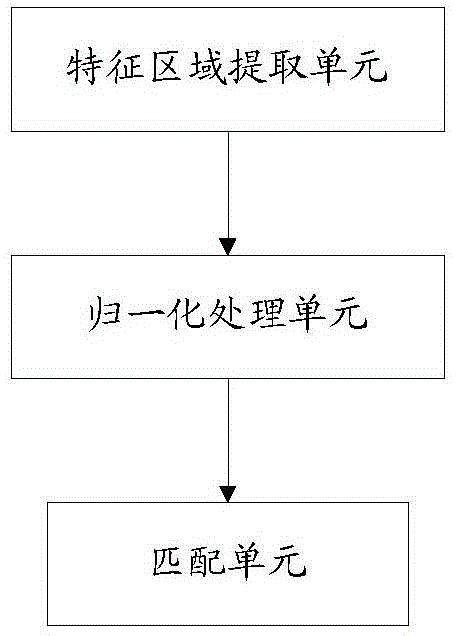

[0083] This embodiment also provides a feature extraction and matching system in visual navigation using the above method, including the following parts:

[0084] Feature area extraction unit: use the two-dimensional maximum entropy threshold segmentation method to extract the feature area;

[0085] Normalization processing unit: perform image normalization processing on the extracted feature region;

[0086] Matching unit: use the SIFT algorithm to obtain the feature vector of the feature point, match each feature point in each feature area in the first image with the feature point in each feature area in the second image, and obtain the matching point The number of matching points, select the two feature areas with the largest number of matching points as the matching area, and the matching feature points as the matching feature points.

[0087] Wherein, the feature region extracting unit further includes a filtering subunit to filter out feature regions containing pixels s...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com