Bottom-up visual saliency generating method fusing local-global contrast ratio

A technology of global contrast and local contrast, applied in computer parts, image data processing, instruments, etc., can solve the problem of not being able to highlight well, only considering the global contrast or local contrast of the image, etc.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

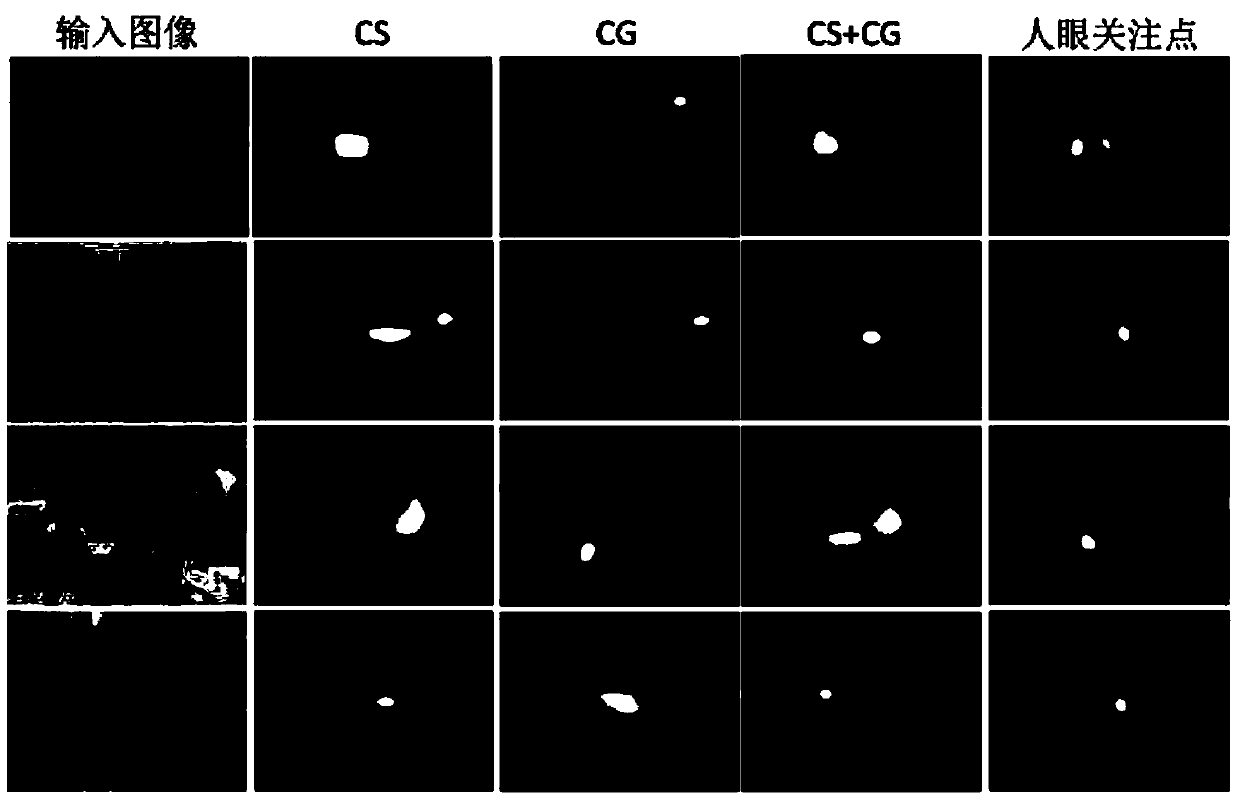

[0023] Now in conjunction with embodiment, accompanying drawing, the present invention will be further described:

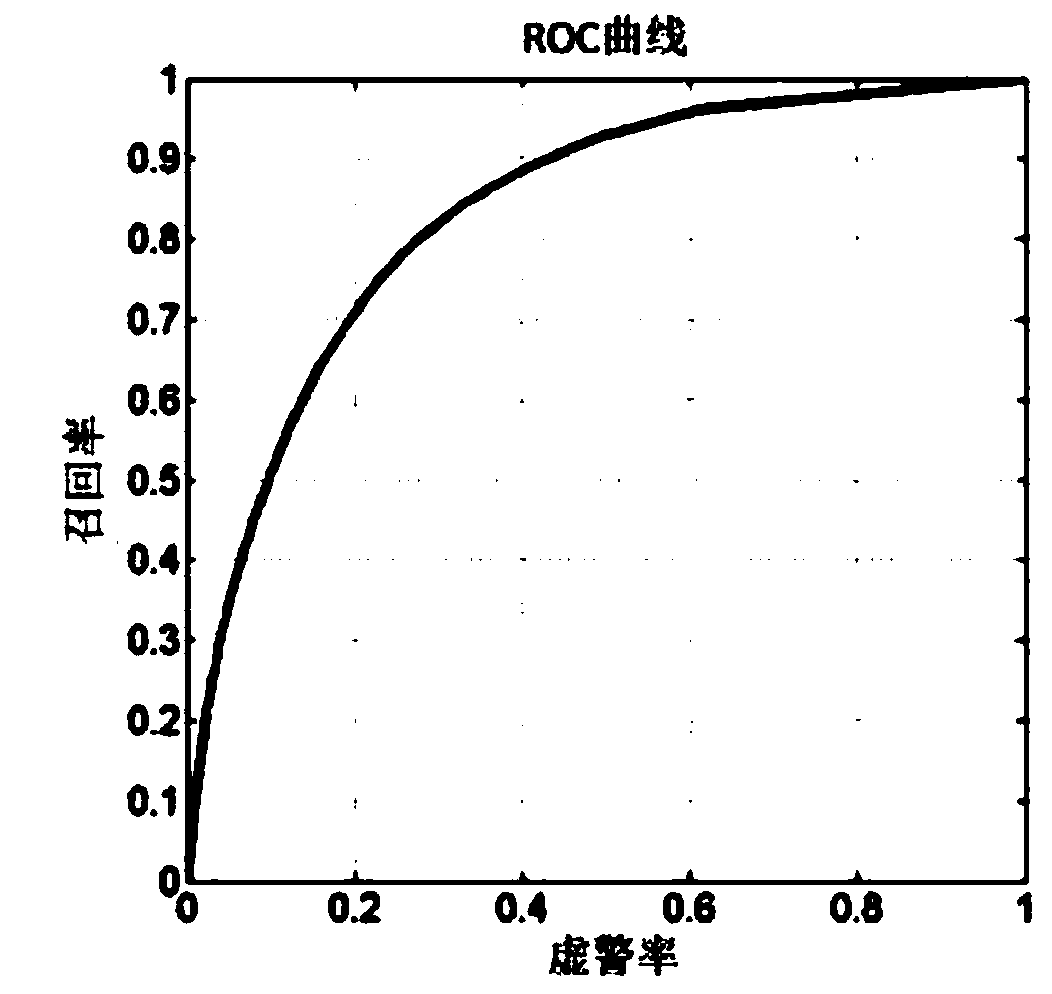

[0024] The hardware environment used for implementation is: Intel Pentium2.93GHz CPU computer, 2.0GB memory, the software environment of operation is: Matlab R2011b and Windows XP. All the images in the BRUCE database are selected as test data in the experiment. The database contains 120 natural images, and it is an internationally open database for testing visual saliency calculation models.

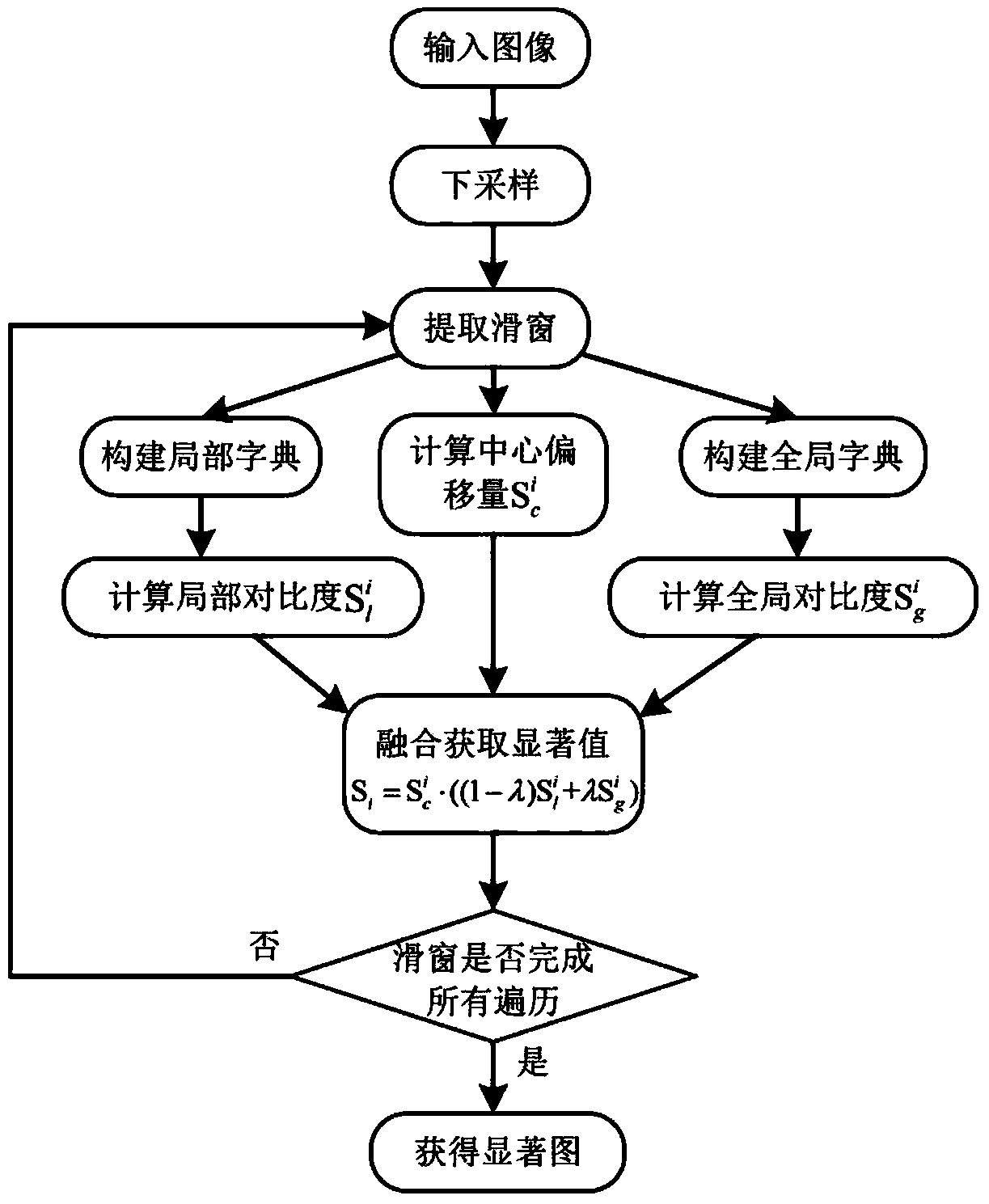

[0025] The present invention is specifically implemented as follows:

[0026] 1. Extract the tiles and their features in the image: first downsample the image to N×N pixels, and then use the size ∈ [5,50] with a step size of A square sliding window of , extracts the patch p in the downsampled image i , tile p i The vector formed by the pixel values in will be used as the feature x of the block i ; where i∈[1,M], M is the number of tiles in an image.

[0027] 2. Con...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com