Co-range partition for query plan optimization and data-parallel programming model

A technology of range partitioning and data partitioning, which is applied in the fields of electrical digital data processing, digital data information retrieval, special data processing applications, etc.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

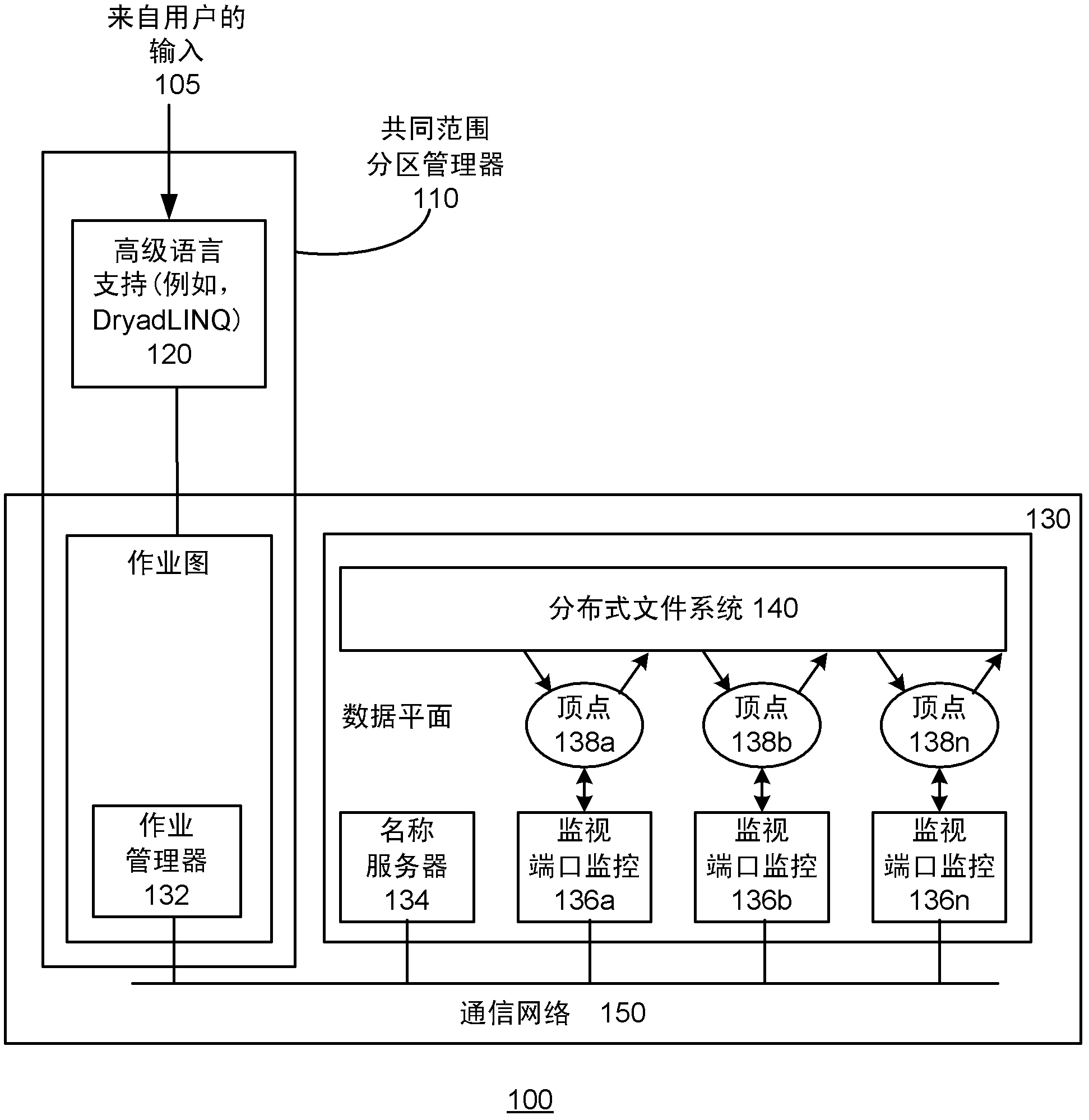

[0018] figure 1 An exemplary data-parallel computing environment 100 is shown, comprising a common scope partition manager 110, a distributed execution engine 130 (e.g., MapReduce, Dryad, Hadoop, etc.) with high-level language support 120 (e.g., Sawzall, Pig Latin, SCOPE, DryadLINQ, etc.). etc.), and the distributed file system 140. In one embodiment, distributed execution engine 130 may include Dryad, and high-level language support 120 may include DryadLINQ.

[0019] The distributed execution engine 130 may include a job manager 132 responsible for generating vertices (V) 138a, 138b...138n on available computers with the assistance of remote execution and monitor port monitors (PD) 136a, 136b...136n . Vertices 138a, 138b . . . 138n exchange data via files, TCP pipes, or shared memory channels as part of distributed file system 140 .

[0020] Job execution on distributed execution engines 130 is coordinated by job manager 132, which may do one or more of the following: ins...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D Engineer

- R&D Manager

- IP Professional

- Industry Leading Data Capabilities

- Powerful AI technology

- Patent DNA Extraction

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2024 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com