Parallel data processing method based on latent dirichlet allocation model

A technique of implicit Dirichlet and distribution model, applied in the direction of electric digital data processing, special data processing applications, instruments, etc., can solve problems such as high data sparsity, large loss of information, unfavorable text information processing, etc.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

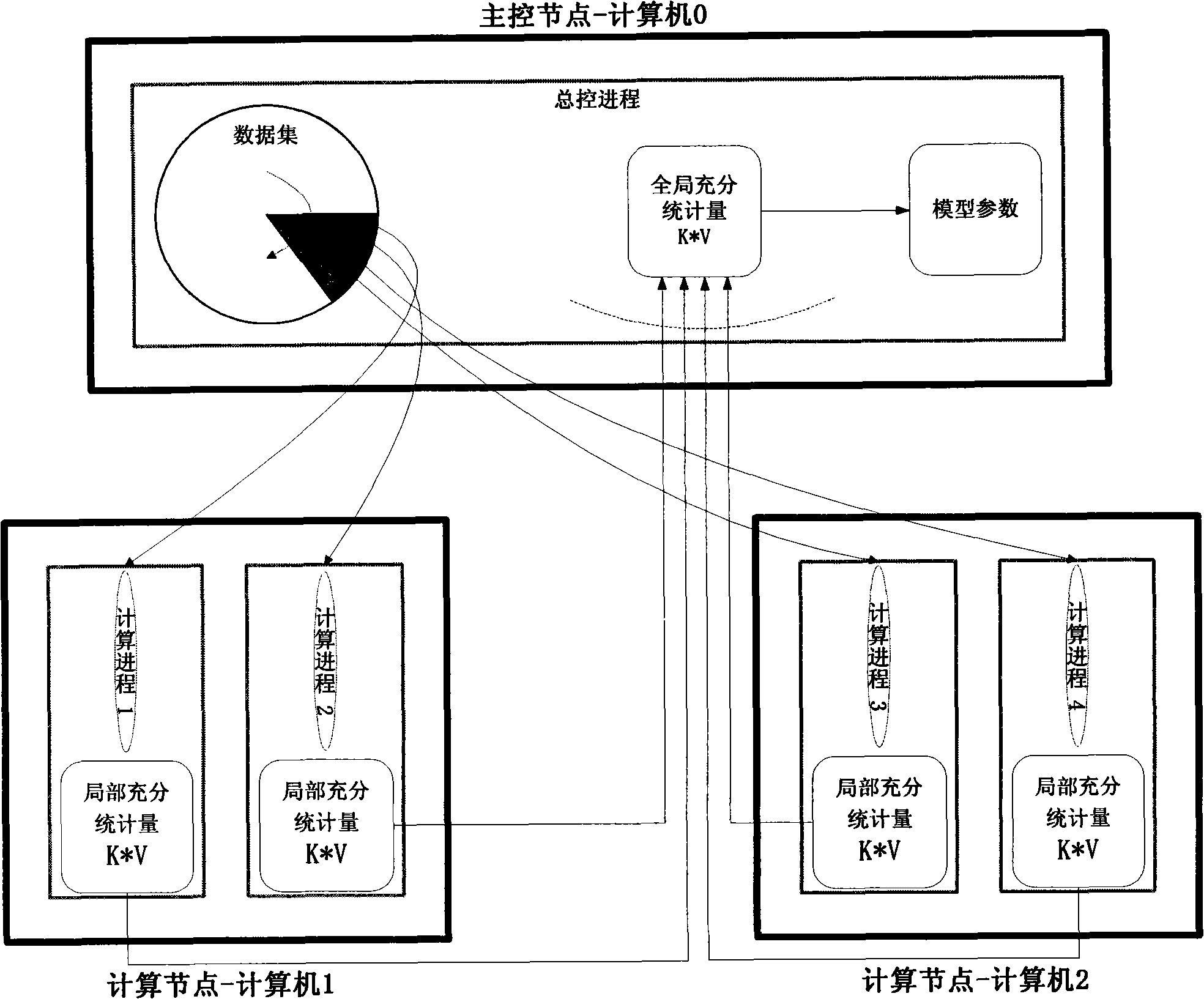

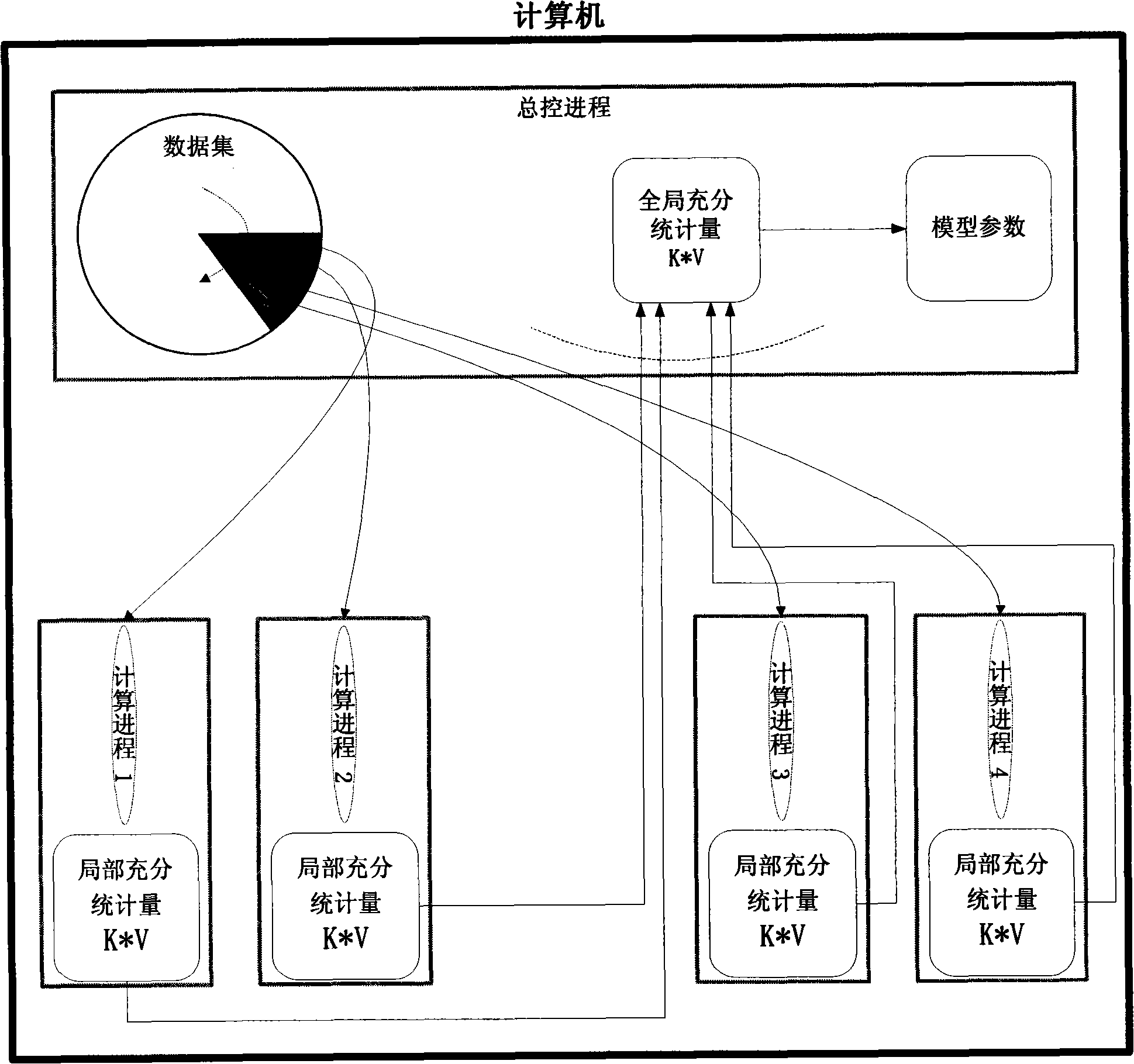

[0130] The three parallel data processing schemes designed by the present invention are aimed at the two most widely used high-performance computing environments, namely, the first is a multi-core (including multi-processor) parallel architecture on a single machine and a cluster parallel architecture on multiple machines. Multi-core design and implementation have been widely adopted in various types of computers at present, and the present invention can be directly used on such machines; the use of the present invention for multi-machine clusters such as network topologies such as Figure 6 As shown, it is composed of 2 basic components, namely: a master control node and several computing nodes. Only one main control node is needed, which is mainly responsible for functions such as interface interaction, data distribution, and result summary. There are multiple computing nodes (in principle, there is no limit to the number) and different types of computers can be selected. The com...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com