Local positioning and motion estimation based camera viewing system and methods

a technology of local positioning and motion estimation, applied in the field of camera system and camera view control, can solve the problems of hardly available or affordable assistance services for common exercisers, professional coaches can only provide training in a limited region and time schedule, and the service system has not been available in common public sport or activity places. achieve the effect of high accuracy and agile camera view and recording services

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Benefits of technology

Problems solved by technology

Method used

Image

Examples

first embodiment

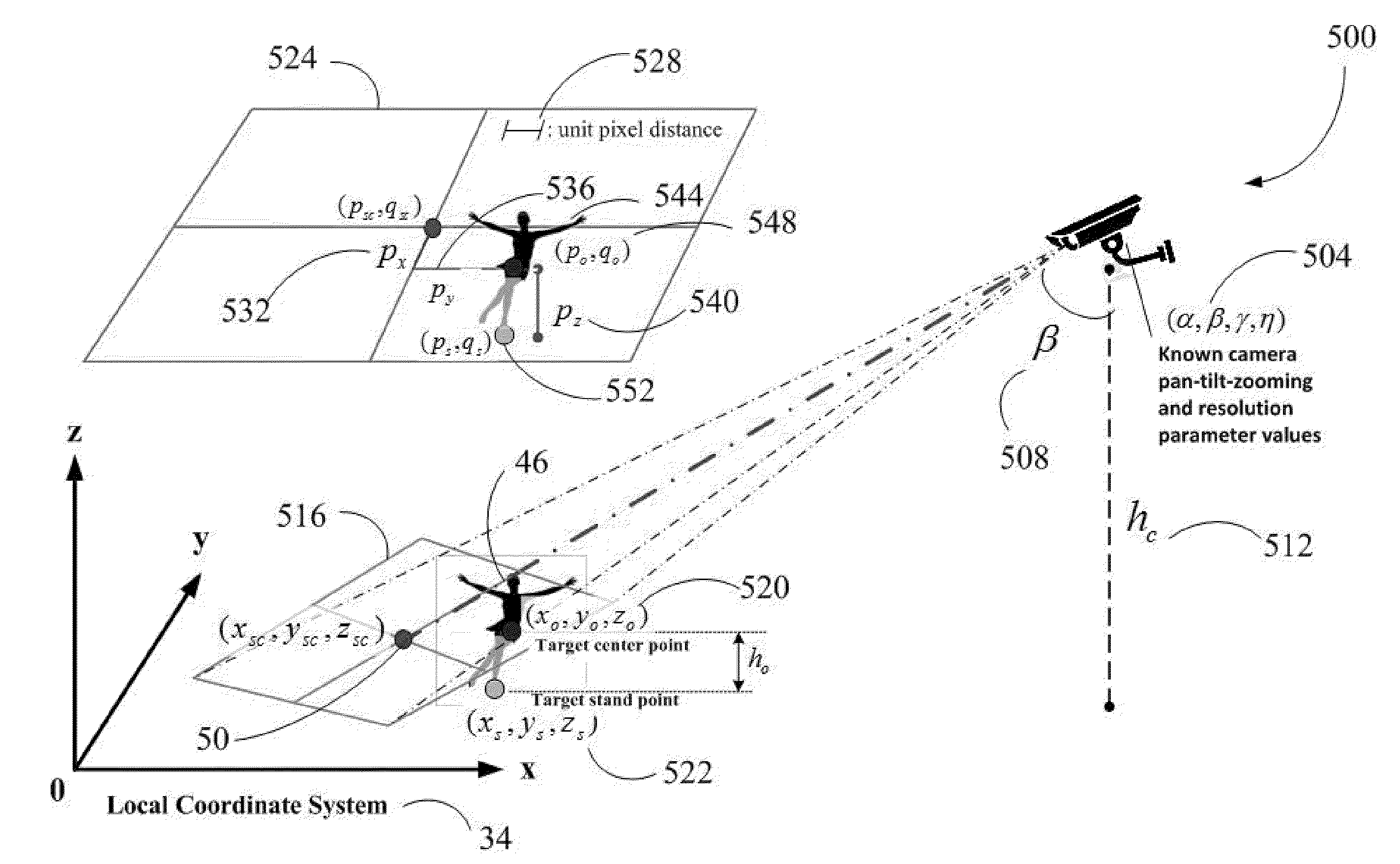

[0103]With reference to FIG. 13, an alternative embodiment of the vision positioning process to determine the location of an object captured in the camera picture frame is illustrated in accordance with one or more embodiments and is generally referenced by numeral 1400. The process starts at step 1404. While capturing a picture frame from the camera system, the present camera system orientation is obtained in the camera system coordinate system at step 1408. Based on the camera system orientation data, predetermined and calibrated coordinate transformation formula, like the perspective transform equation (6), and its parameters are loaded from a database at step 1412. 3D projection transformation methods are used for such transformation formula to convert positions between the camera frame coordinate and the local coordinate system. Perspective transform and estimation method is an exemplary embodiment of the 3D projection transformation methods for the transformation formulation a...

case 1

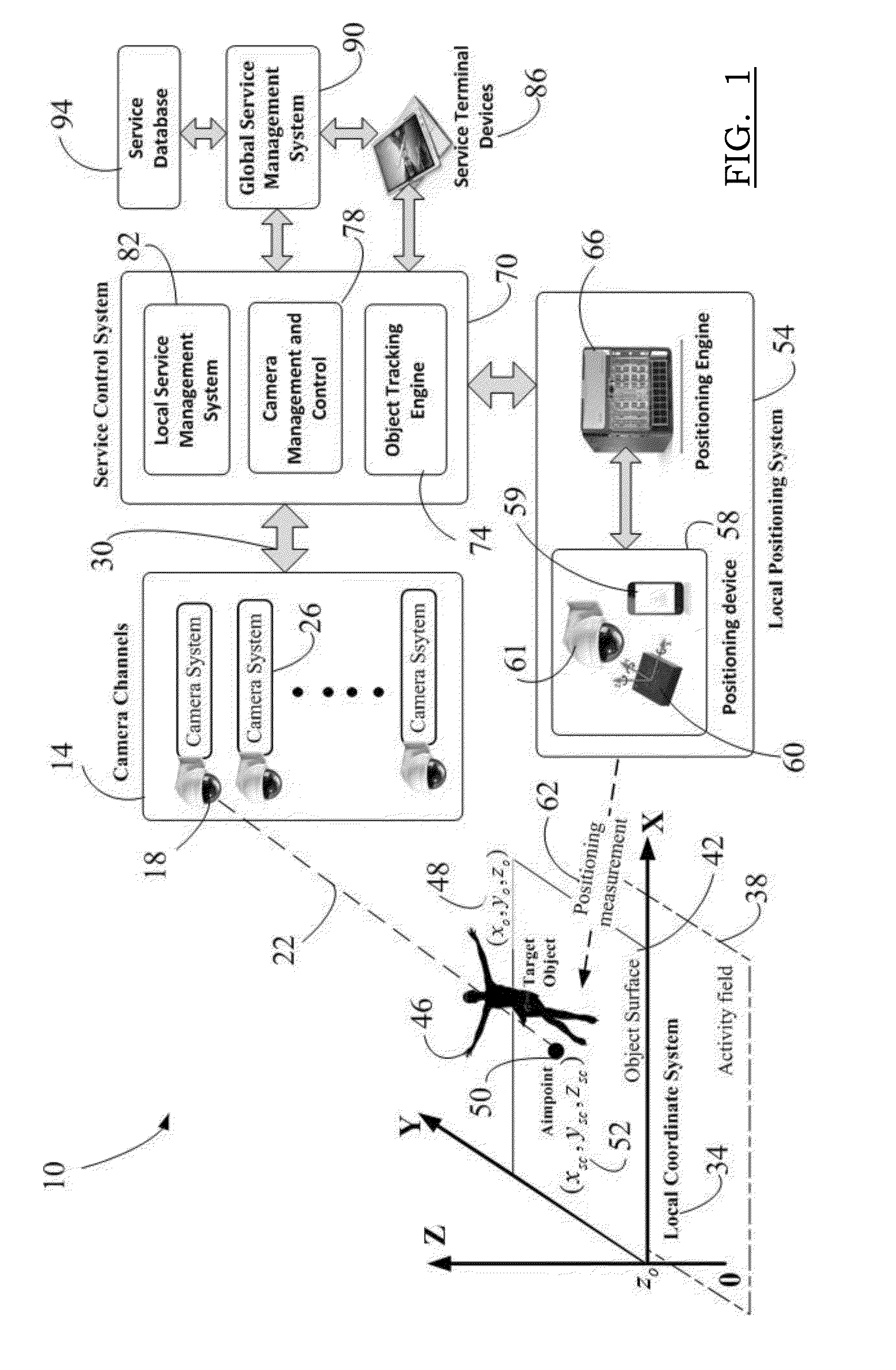

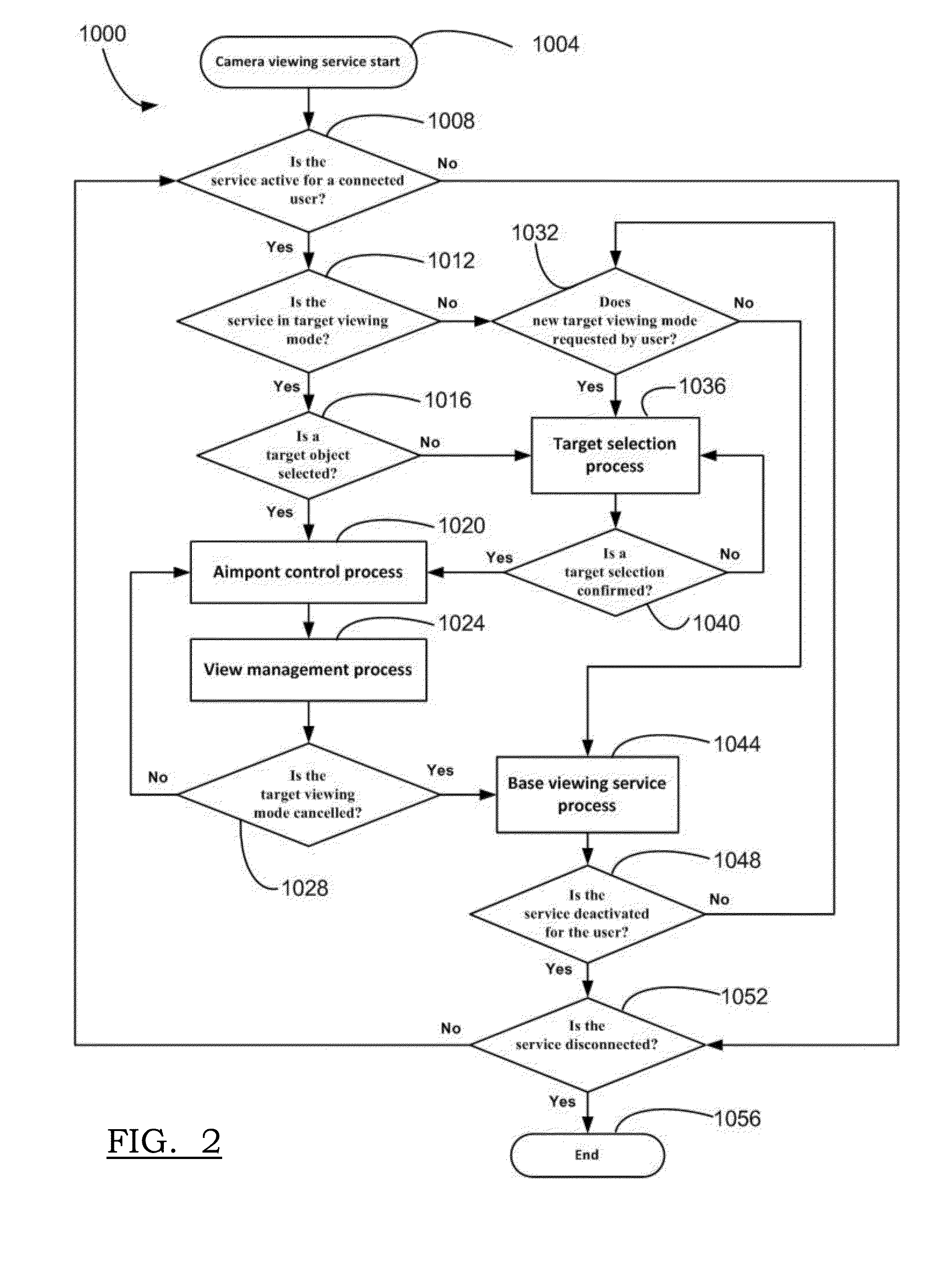

[0139][Use Case 1]: In a sport arena, for instance an ice rink, a user connects to the camera viewing service through WiFi network. The user loads the service application on his / her smartphone and then checked out an available camera channel. Based on the WiFi signal, the position of the user is quickly identified by the WiFi positioning subsystem. Immediately after that, the camera channel orients to focus its view center at the recognized user position. Meanwhile, the camera channel view is displaying on the user's smartphone screen. Now the user is in the camera view. Objects identified from the view will be highlighted with colored object outline envelops and object center points. Among all the identified objects, the user points on himself / herself to define the target object. After that, the camera channel will start control the camera channel view to achieve the best exhibition of the user by adjusting the camera view switch, pan and tilt angle, pan and tilt speed, zooming rat...

case 3

[0141][Use Case 3]: In a sport arena installed with the invented public accessible camera viewing system, a camera channel is used to capture view and to transmit the view to the big screen above the arena. When a service user can check out the camera channel using his / her smartphone device, the user's location in the local positioning system is estimated. The camera's pan and tilt angles will be changed to focus at the user's location with proper zooming ratio. Then the camera view with the user in it will be shown on the big screen. The user can also control the camera's orientation and zooming to scan the field and to focus on a desired area with his / her interested view shown on the big screen. After certain time duration expires, the big screen will switch its connection to another camera channel that is ready to transfer view focusing on another user. Before the camera view is ready to be shown on the big screen, present camera channel user will have the camera view showing on ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com