Probabilistic boosting tree framework for learning discriminative models

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

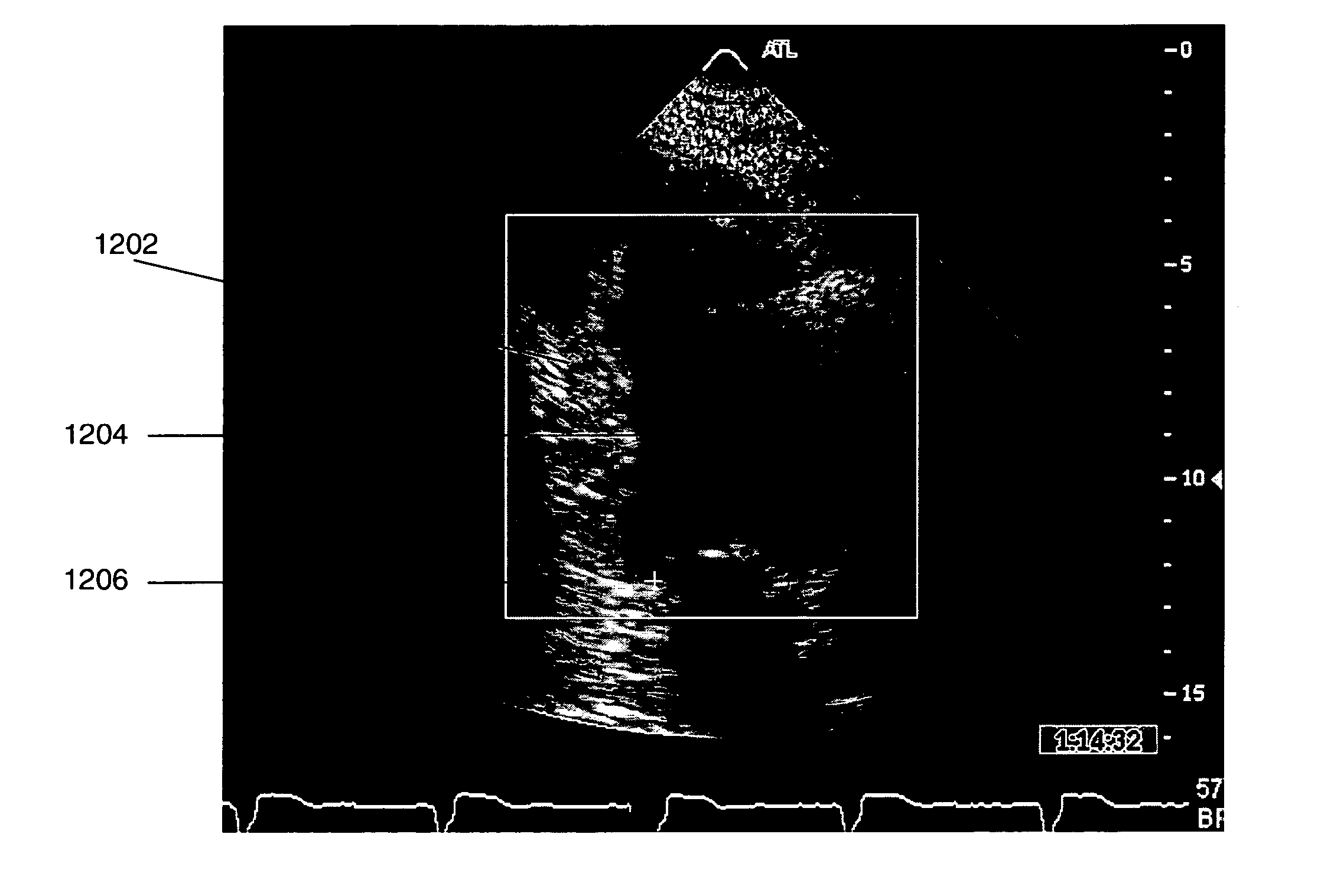

Image

Examples

Embodiment Construction

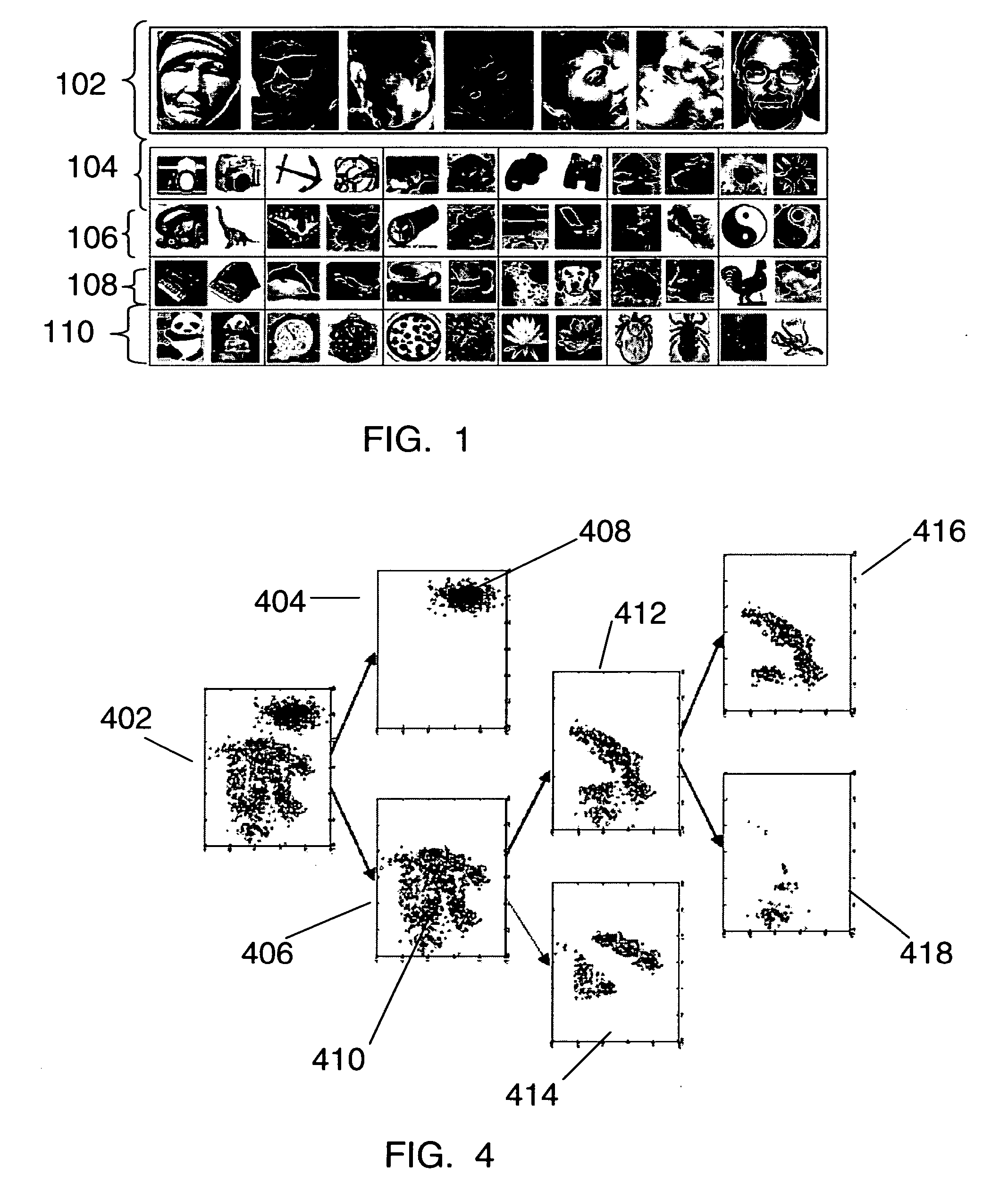

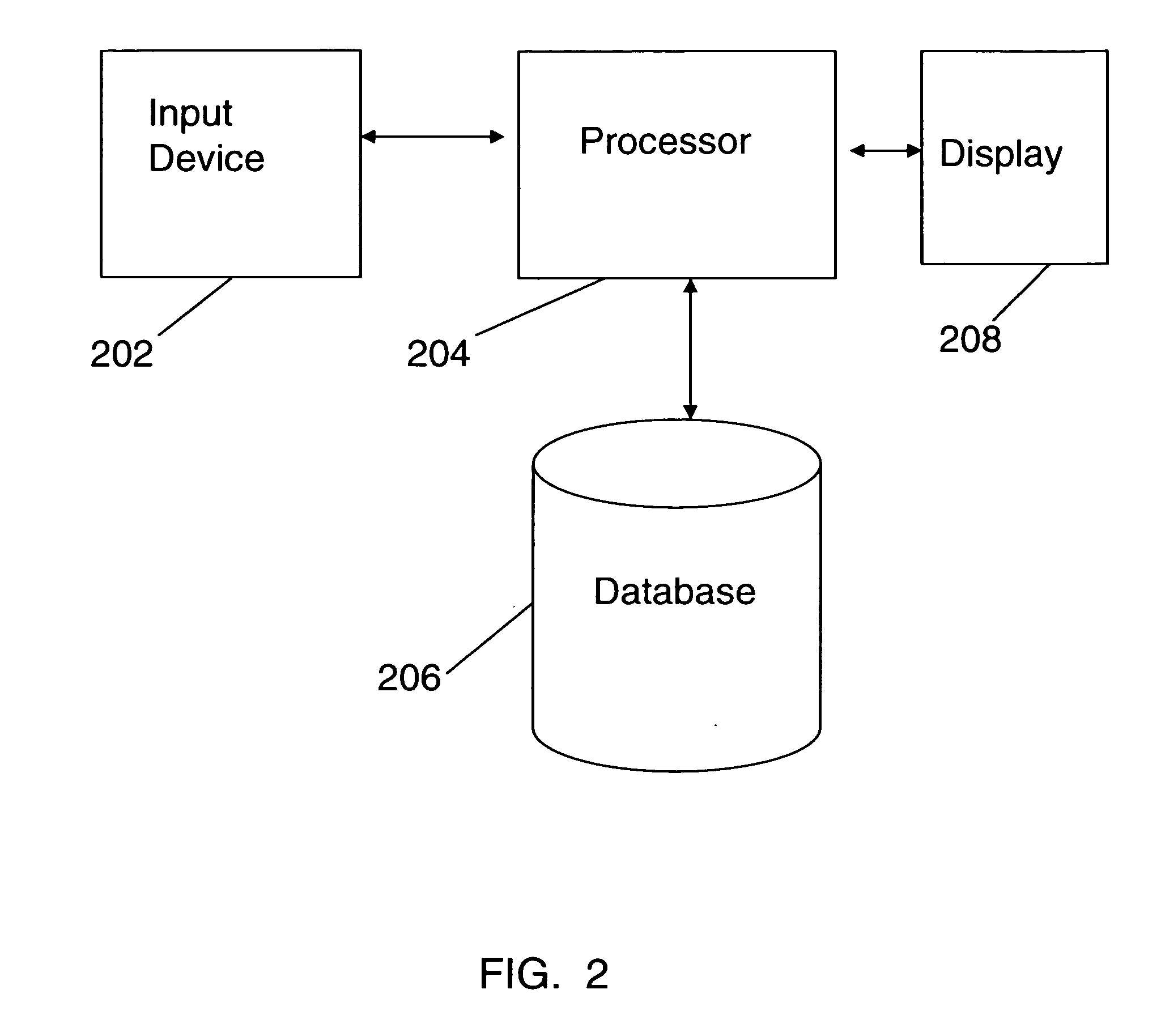

[0031] The present invention is directed to a probabilistic boosting tree framework for computing two-class and multi-class discriminative models. In the learning stage, the probabilistic boosting tree (PBT) automatically constructs a tree in which each node combines a number of weak classifiers (e.g., evidence, knowledge) into a strong classifier or conditional posterior probability. The PBT approaches the target posterior distribution by data augmentation (e.g., tree expansion) through a divide-and-conquer strategy.

[0032] In the testing stage, the conditional probability is computed at each tree node based on the learned classifier which guides the probability propagation in its sub-trees. The top node of the tree therefore outputs the overall posterior probability by integrating the probabilities gathered from its sub-trees. Also, clustering is naturally embedded in the learning phase and each sub-tree represents a cluster of a certain level.

[0033] In the training stage, a tree...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com