Rapid movement estimating method

A fast motion and motion estimation technology, applied in TV, standard conversion, electrical components, etc., can solve the problems of slowing motion estimation convergence speed and decreasing smoothness of motion vector field, and achieve the effect of accelerating convergence speed and improving smoothness

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

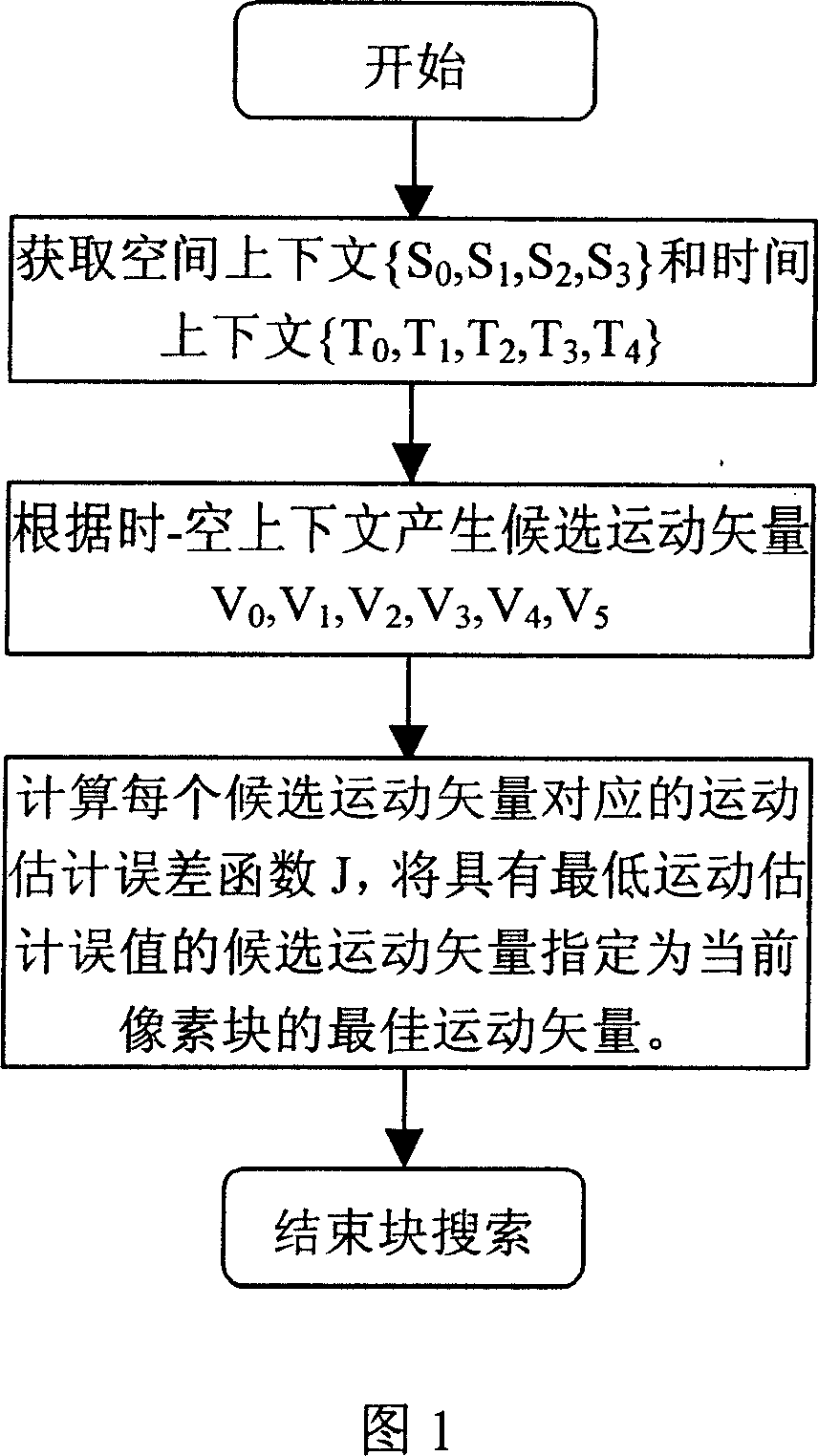

[0031] Please refer to FIG. 1, the fast motion estimation method of the present invention includes the following steps:

[0032] First, divide each frame of video image into non-overlapping image blocks; secondly, determine the spatial context {S for each current pixel block 0 , S 1 , S 2 , S 3} and temporal context {T 0 , T 1 , T 2 , T 3 , T 4}; Generate six candidate motion vectors (V 0 , V 1 , V 2 , V 3 , V 4 , V 5); calculate the motion estimation matching error function J corresponding to each candidate motion vector, and designate the candidate motion vector with the lowest matching error value as the best motion vector of the current pixel block.

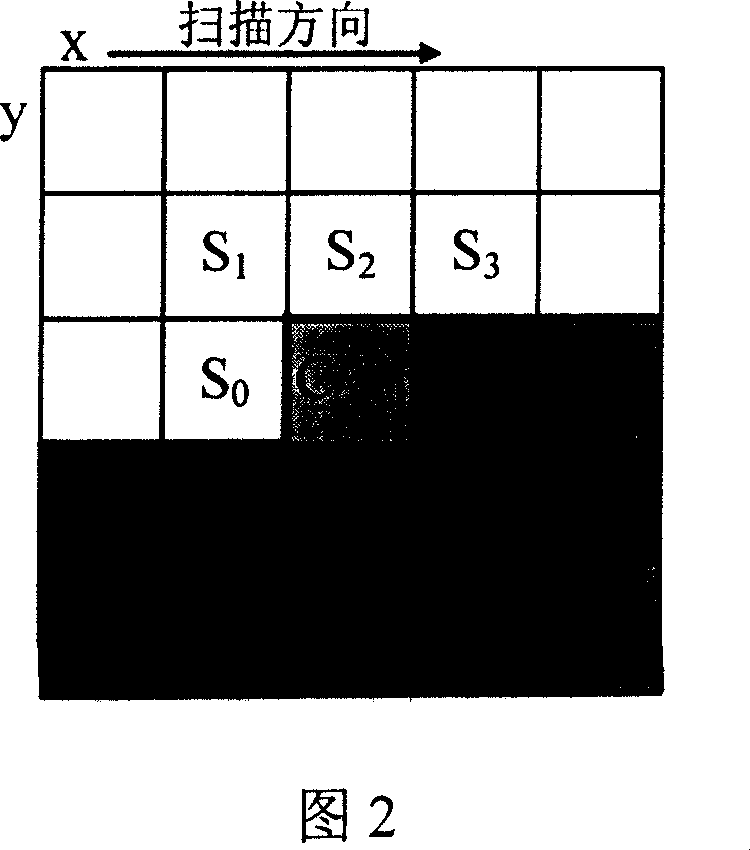

[0033] Please also refer to Figure 2, the method of determining the spatial context and temporal context for each current sub-block in the above steps is as follows:

[0034] First, in the current frame, select the motion vector S of the right and left sub-block of the current block (C) 0 , the motion vector S ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com