Fine-grained image-text retrieval method and system based on Transform model

A fine-grained, model-based technology, applied in unstructured text data retrieval, digital data information retrieval, biological neural network models, etc., can solve problems such as unsatisfactory retrieval accuracy, achieve excellent retrieval results, improve performance and The effect of high precision and retrieval accuracy

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment

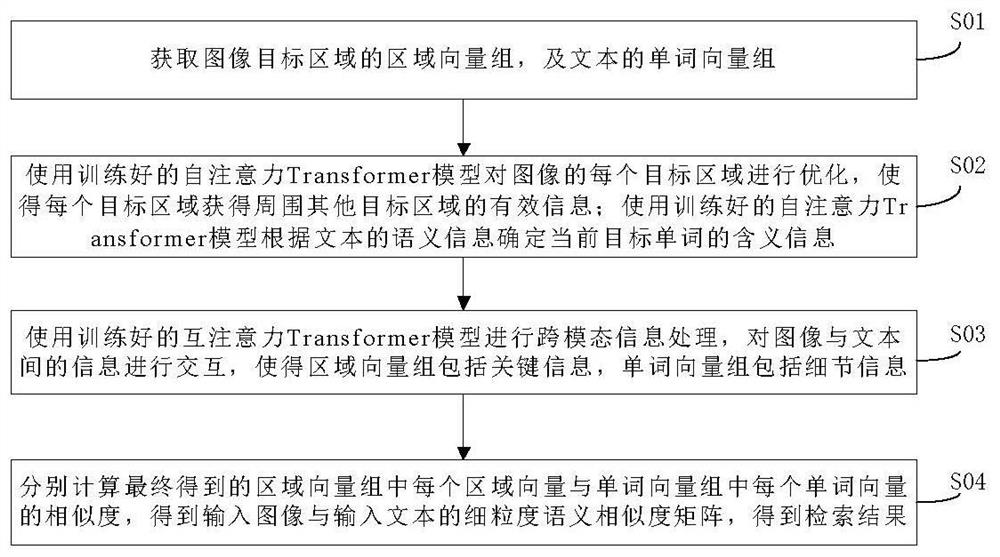

[0076] like figure 1 As shown, a fine-grained image and text retrieval method based on Transformer model includes the following steps:

[0077] S01: Obtain the area vector group of the target area of the image and the word vector group of the text;

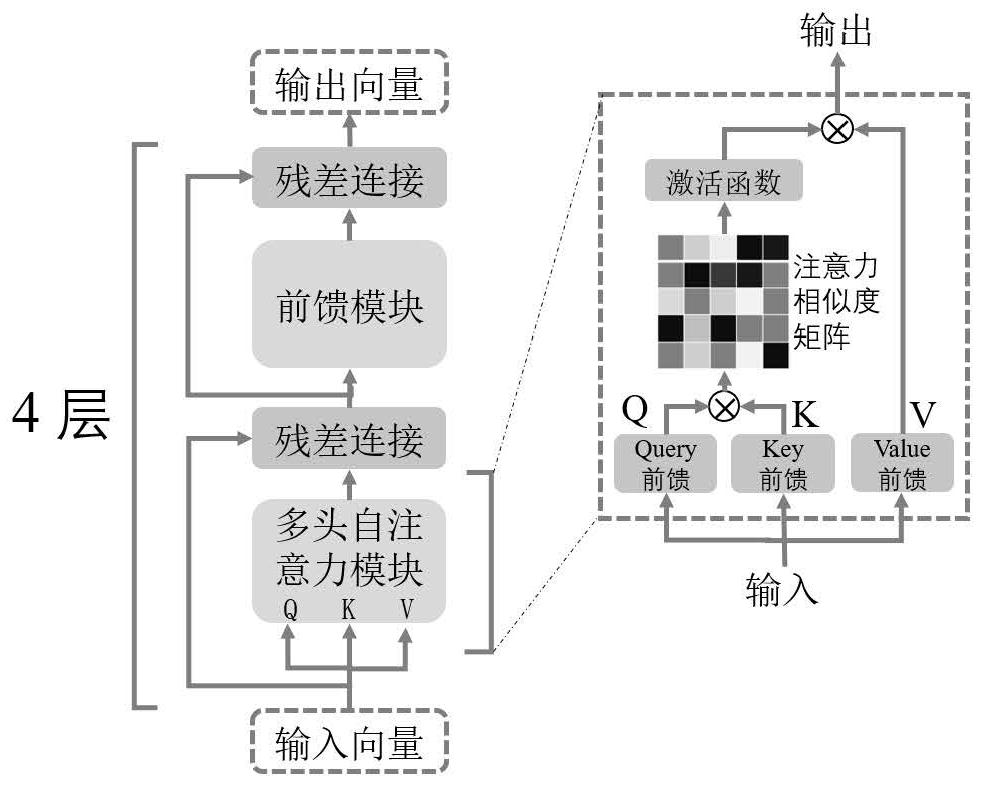

[0078] S02: Use the trained self-attention Transformer model to optimize each target area of the image, so that each target area can obtain effective information of other surrounding target areas; use the trained self-attention Transformer model to determine according to the semantic information of the text Meaning information of the current target word;

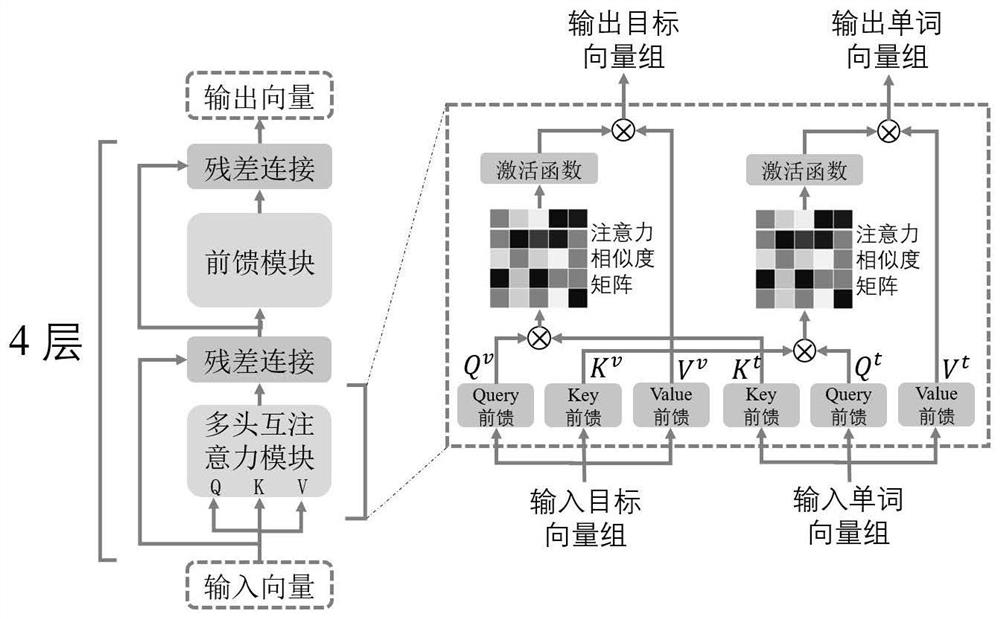

[0079] S03: Use the trained mutual attention Transformer model to process cross-modal information, and interact with the information between the image and the text, so that the regional vector group includes key information, and the word vector group includes detailed information;

[0080] S04: Calculate the similarity between each region vector in the finally obtained reg...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - Generate Ideas

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com