Multi-laser radar and multi-camera sensor spatial position automatic calibration method

A technology of laser radar and spatial location, which is applied in the directions of instruments, image analysis, calculation, etc., can solve the problems that the reliability of the calibration results cannot be guaranteed, and achieve the effect of improving the accuracy of automatic calibration, accurate calibration results, and reducing the overall error

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

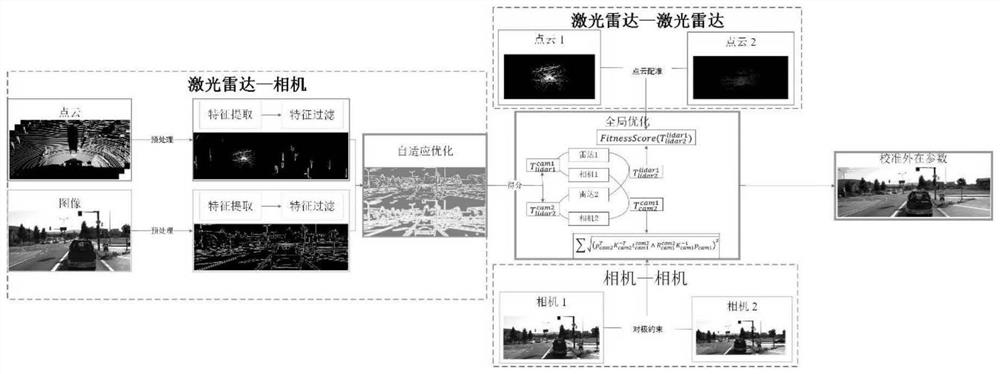

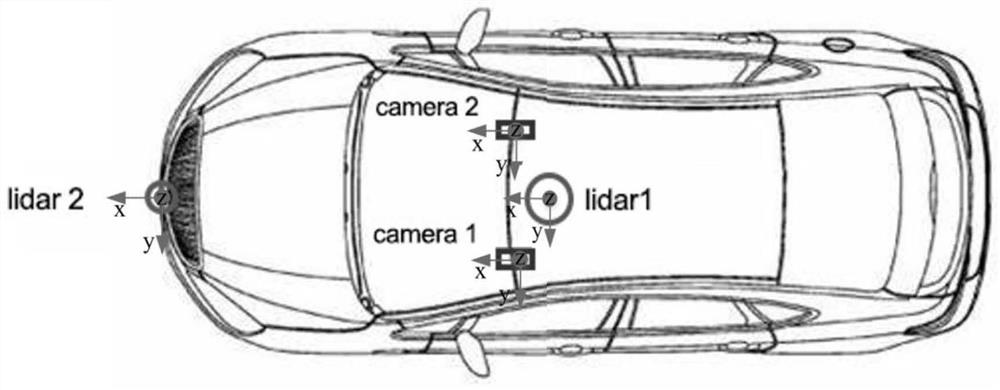

[0062] like figure 1 As shown, Embodiment 1 of the present invention proposes a method for automatically calibrating the spatial positions of multiple lidar and multi-camera sensors. figure 2 Set, but not limited to the above number. The method includes:

[0063] Obtain the spatial position of the radar relative to the camera sensor, the spatial position between the two cameras, and the spatial position between the two lidars, and obtain the spatial position relationship between multiple sets of lidars and camera sensors;

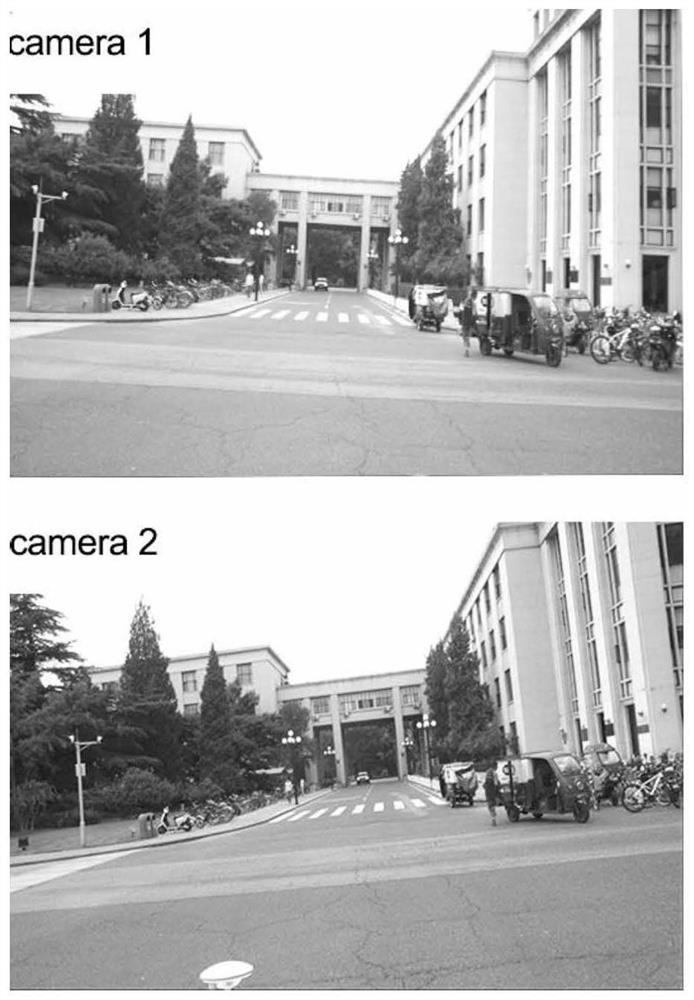

[0064] As shown in Figure 3(a) and Figure 3(b), the images collected by two cameras and the point cloud data collected by two lidars are respectively.

[0065] For the spatial position relationship between lidar and camera, filter the data that conforms to the line feature from the lidar point cloud data; filter the data conforming to the line feature from the camera sensor image data; project the lidar data conforming to the line feature to the camera s...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com