Pre-training method and device, equipment and storage medium

A pre-training and character technology, applied in the computer field, can solve the problem of spending a lot of data computing resources and computing time text sentences, and achieve the effect of reducing data computing resources and computing time

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0063] In order to make the purpose, technical solution and advantages of the present application clearer, the implementation manners of the present application will be further described in detail below in conjunction with the accompanying drawings.

[0064] The embodiment of the present application provides a pre-training method, which can be implemented by a server. The server may be a single server or a server cluster composed of multiple servers.

[0065] The server may include a processor, a memory, and a communication component, and the processor is respectively connected to the memory and the communication component.

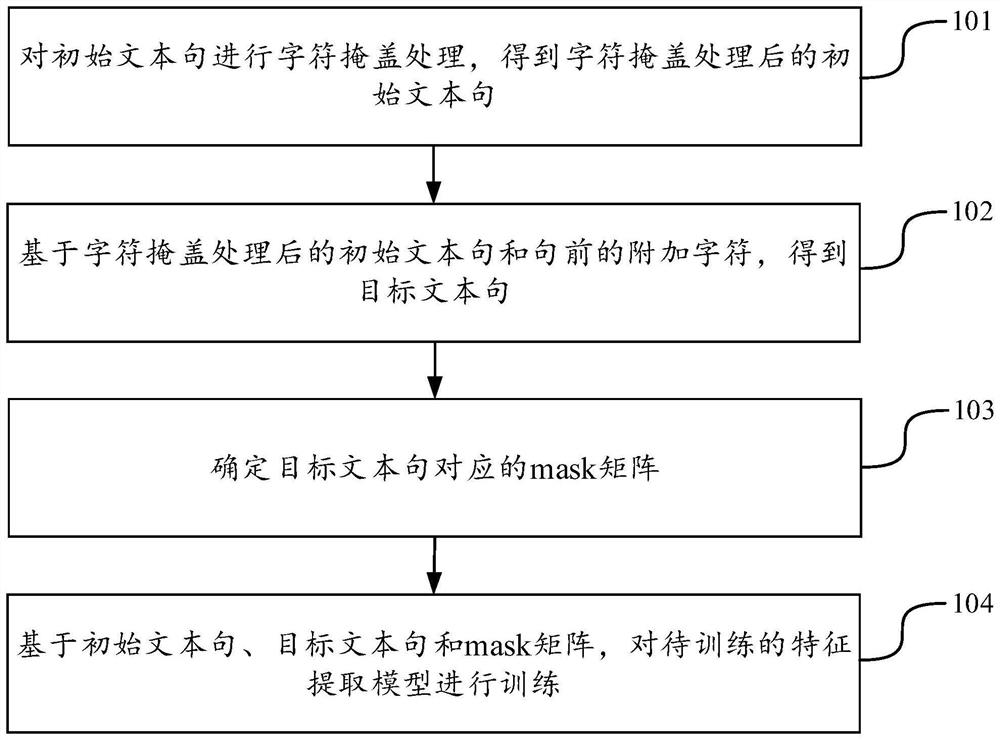

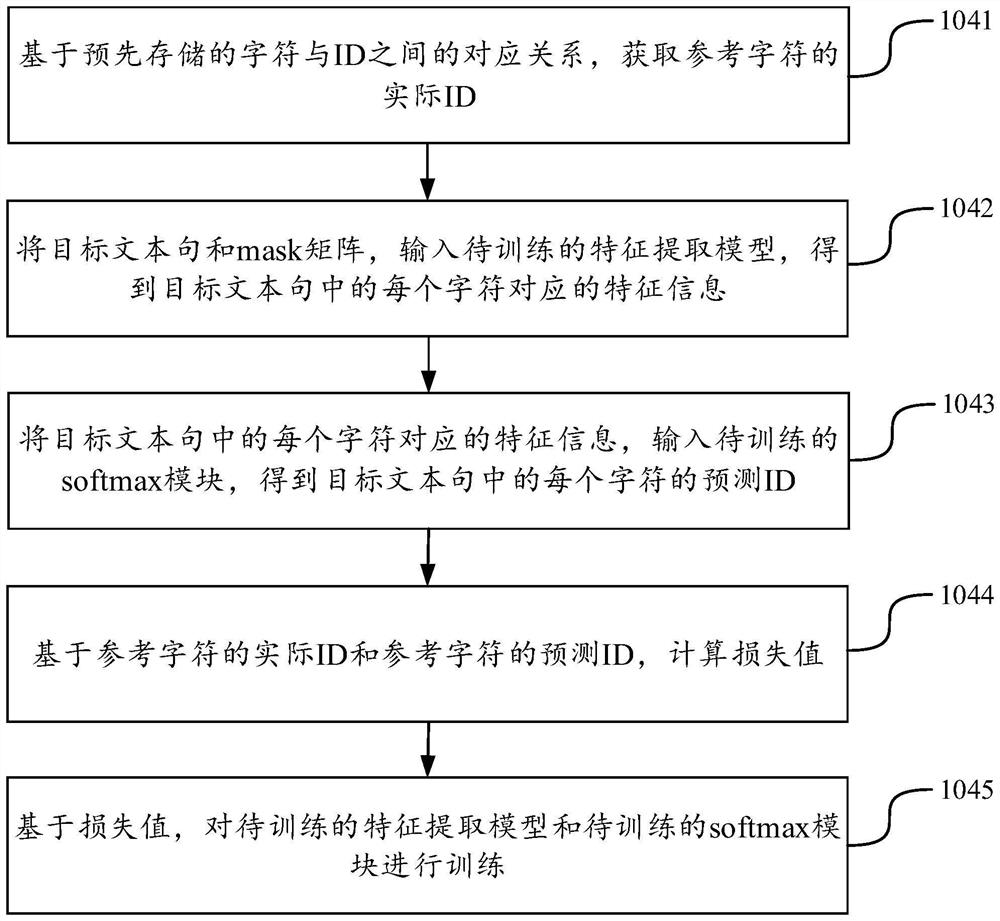

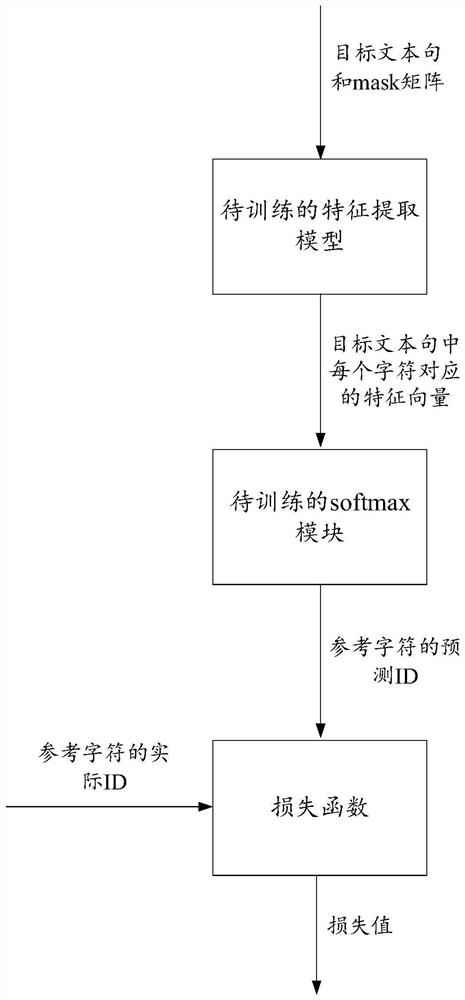

[0066] The processor may be a CPU (Central Processing Unit, central processing unit). The processor can be used to read instructions and process data, for example, perform character masking on the initial text sentence, determine the target text sentence, determine the mask matrix corresponding to the target text sentence, train the feature extraction mo...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com