Machine vision behavior intention prediction method applied to intelligent building

A machine vision and intelligent building technology, applied in the direction of instruments, computer components, character and pattern recognition, etc., can solve the problem that the prediction method cannot accurately identify and predict user behavior in real time, achieve high accuracy and reduce manual operations , Improve the effect of intelligence

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

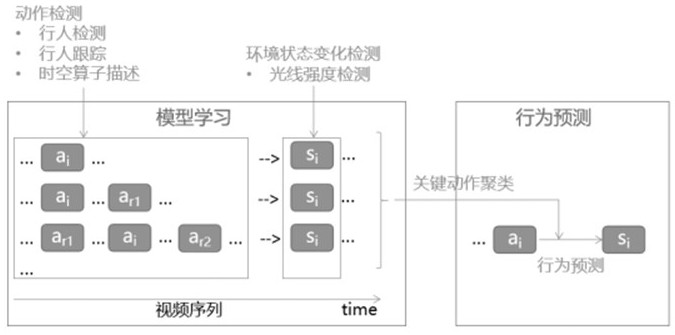

[0039] see figure 1 , figure 2 , the present embodiment provides a machine vision behavior intention prediction method applied to intelligent buildings, including the following steps:

[0040] S1. First build a pedestrian detection model, use computer vision technology to judge whether there are pedestrians in the video image sequence and give precise positioning, and collect pedestrian pictures, then use the residual network to extract features from pedestrian pictures, and use multi-scale detection module detection Pedestrians of different scales, then the algorithmic full connection layer of the residual network is based on the prior frame regression to output the bounding box, confidence and category probability of pedestrian detection, and obtain the pedestrian detection result;

[0041] The pedestrian detection model is trained based on the COCO data set, and the pedestrian data set in the multi-type target data set is extracted through the script to obtain the pre-trai...

Embodiment 2

[0078] The method of the present invention is tested and verified, and the video data of behaviors such as entering and exiting the conference room and switching lights are collected, wherein entering the door a1 is a key action, touching tables and chairs a2, a3 is an irrelevant action, and the light changes from dark to bright after turning on the light. The environmental state changes s1;

[0079] Through the study of a video (including 6 times of a1 and s1, several times of a2 and a3), cluster the action set before each environmental state change s1, cluster the key action as a1, and use the prediction method to analyze another video For prediction, a total of 6 people entered the meeting room, and the prediction was performed when the key action a1 occurred and the light was dark, which shows that the method of the present invention can meet the requirement of behavior prediction accuracy.

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D Engineer

- R&D Manager

- IP Professional

- Industry Leading Data Capabilities

- Powerful AI technology

- Patent DNA Extraction

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2024 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com