Unmanned aerial vehicle obstacle avoidance method based on deep reinforcement learning

A technology of reinforcement learning and unmanned aerial vehicles, applied in the direction of vehicle position/route/height control, non-electric variable control, instruments, etc., can solve the problem of unstable training process, and achieve the problem of unstable training, applicability and reliability Scalable Effects

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0028] In order to enable those skilled in the art to better understand the technical solutions of the present invention, the present invention will be further described in detail below in conjunction with specific embodiments.

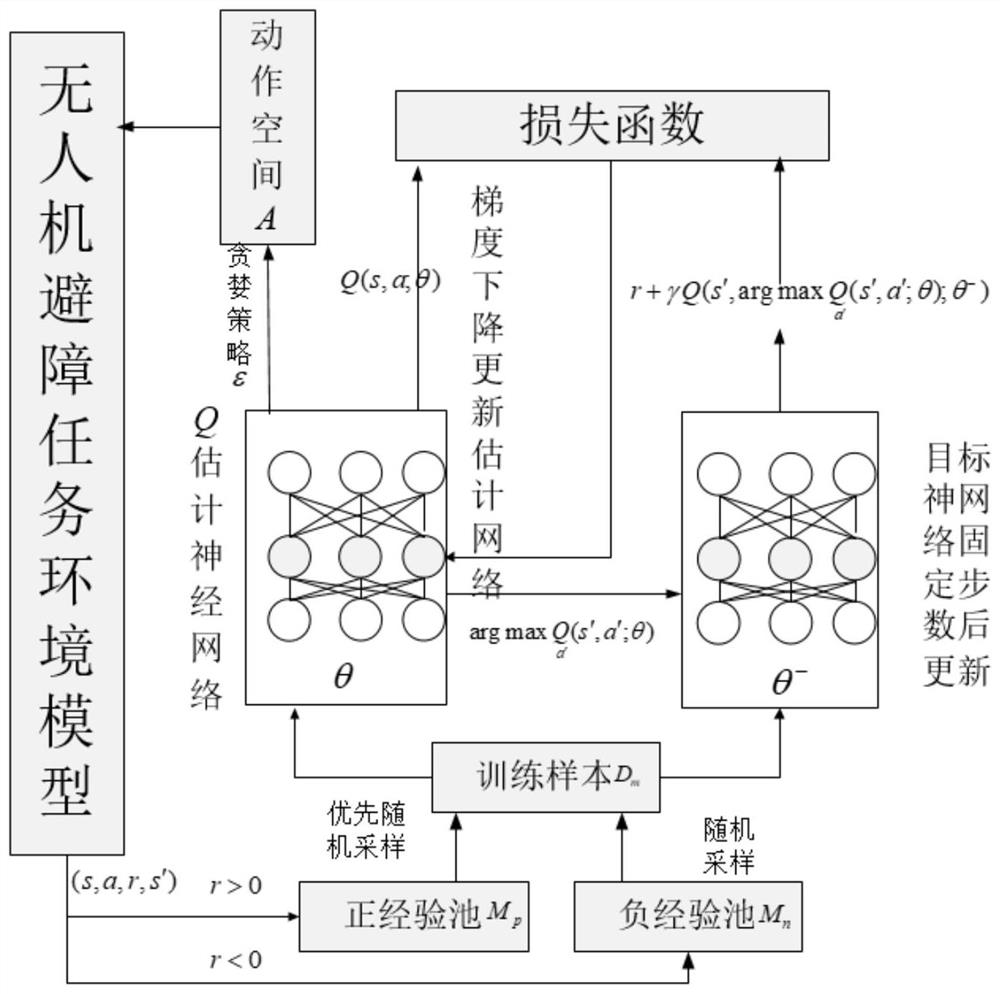

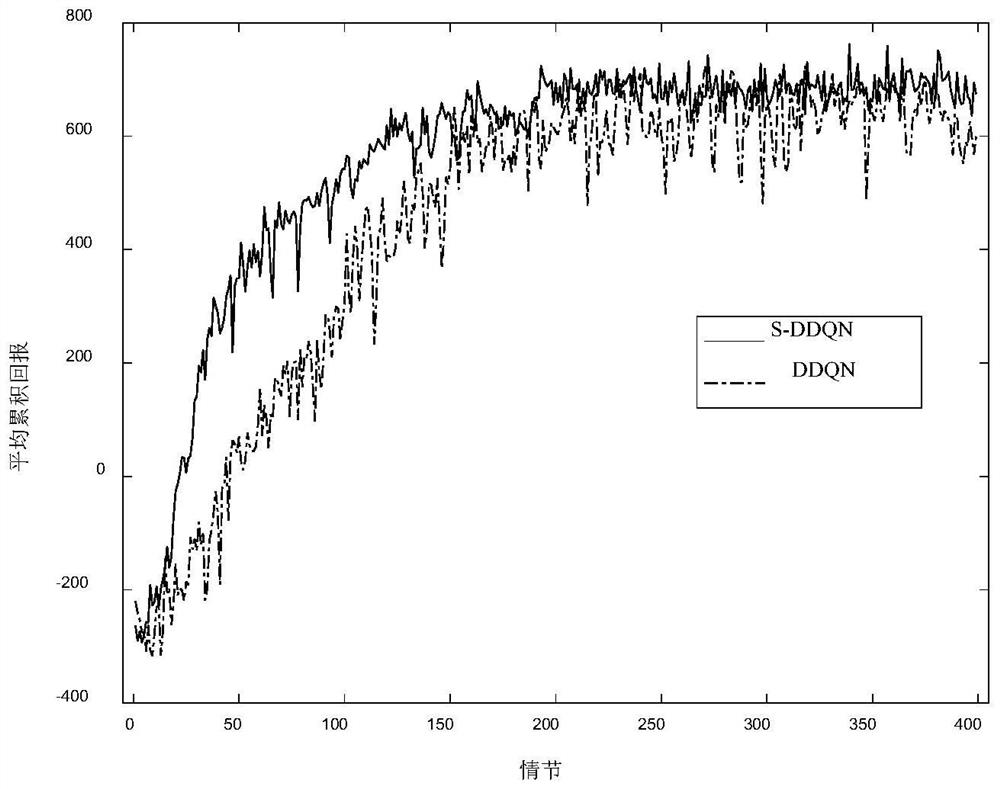

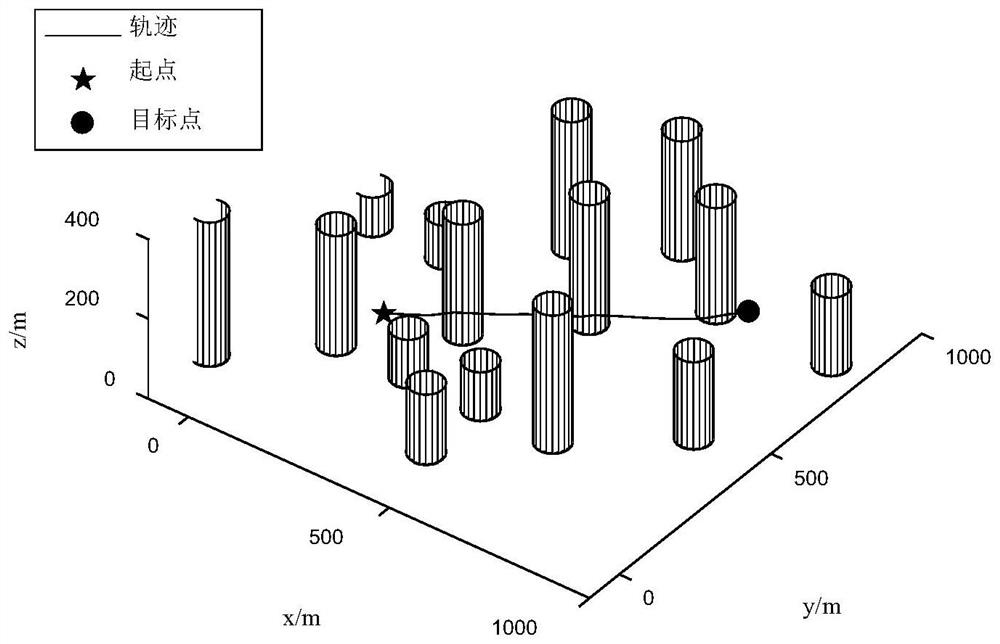

[0029] A UAV obstacle avoidance method based on deep reinforcement learning of the present invention, the method flow chart is as follows figure 1 As shown, the UAV is flying in an environment containing unknown obstacles. After the action is selected according to the greedy strategy, a new state will be generated after the execution of the action interacts with the environment and the reward generated by the state change will be calculated. The algorithm will execute the action of the UAV The previous state, the action taken, the reward obtained, and the state after executing the action are stored in positive and negative experience pools according to the size of the reward value. The algorithm draws samples from the two experience pools to form train...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - Generate Ideas

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com