Cross-modal pedestrian re-identification method based on double-transformation alignment and blocking

A pedestrian re-identification, cross-modal technology, applied in the direction of character and pattern recognition, instruments, computer components, etc., can solve the semantic dislocation of image space, limit the robustness and effectiveness of person re-identification technology, and different content semantics, etc. question

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

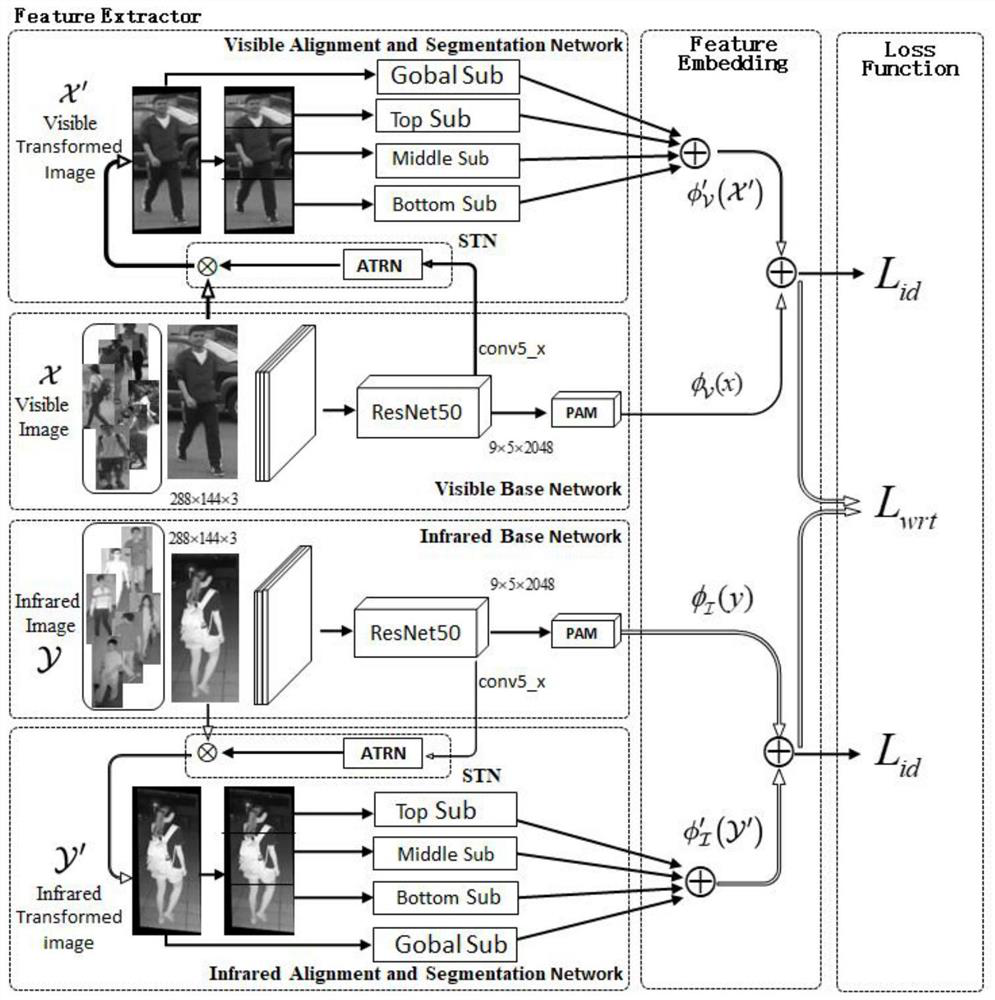

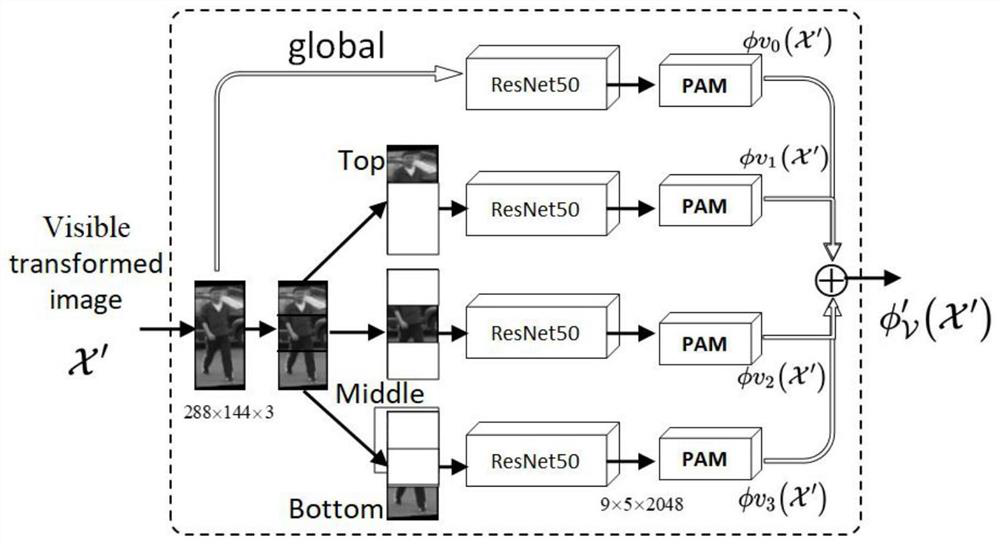

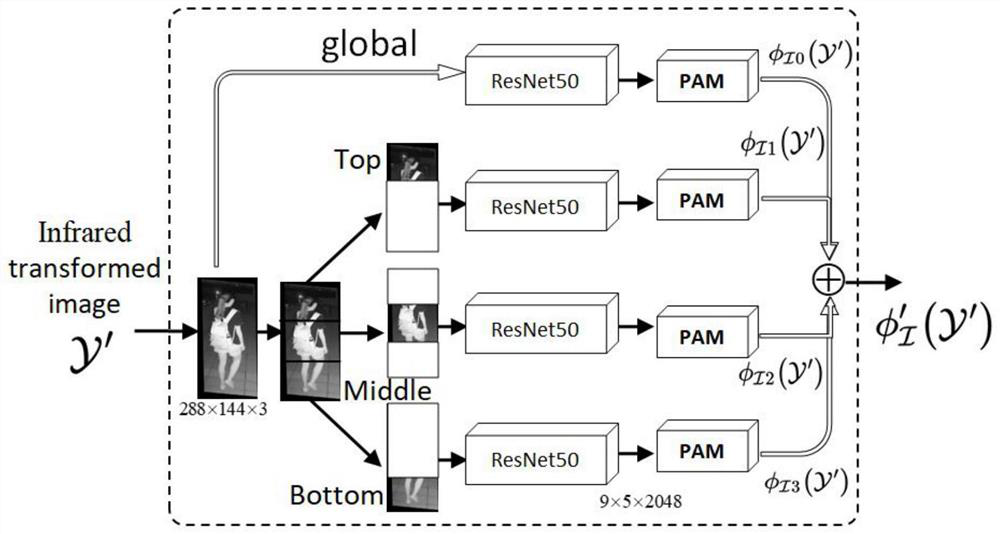

[0016] Attached below figure 1 , attached figure 2 And attached image 3 The present invention is described further:

[0017] The network structure and principle of the DTASN model are as follows:

[0018] This network model framework learns feature representations and distance metrics in an end-to-end manner through multipath dual-alignment and block networks while maintaining high discriminability. The framework consists of three components: (1) feature extraction module, (2) feature embedding module, and (3) loss calculation module. The backbone network of all paths is the deep residual network ResNet50. Due to the lack of available data, in order to speed up the convergence speed of the training process, the present invention uses a pre-trained ResNet50 model to initialize the network. To strengthen the attention on local features, the present invention applies a position attention module on each path.

[0019] For visible light and infrared cross-modal pedestrian r...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com