Underwater acoustic sensor network deep sea target positioning method based on glancing angle sound ray correction

An underwater acoustic sensor and target positioning technology, which is used in positioning, direction finders and instruments using ultrasonic/sonic/infrasonic waves, etc., can solve the problems of sound ray propagation distance error, uneven sound speed, easy node drift, etc., to achieve correction Influence and improve the effect of positioning accuracy

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

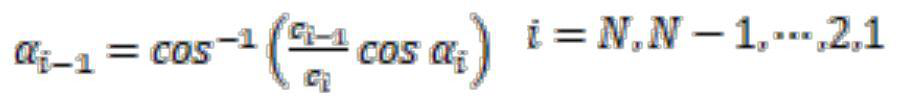

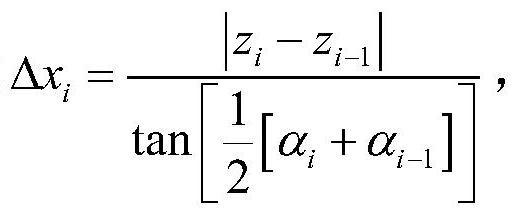

Method used

Image

Examples

Embodiment 1

[0049] Embodiment 1: Simulation of the influence of the sound ray propagation time measurement value error on the performance of the traditional sound ray correction method.

[0050] The target location method based on the traditional sound ray correction requires the clock synchronization between the node and the target. When the clock between the node and the target is asynchronous, there will be errors in the measurement value of the sound ray propagation time. When there is an error in the measurement value of the sound ray propagation time, the effect of the traditional sound ray correction method is simulated.

[0051] The node position coordinates are (50m, 100m, 35m), and the target position coordinates are (1000m, 800m, 1000m). Set the parameters to be modified in MunkB_ray.env, and then run the bellhop command to generate the MunkB_ray.arr file to obtain the propagation time of the sound ray. When the propagation time measurement has no error and the error variance ...

Embodiment 2

[0054] Embodiment 2: In the case of asynchronous clocks, a fixed horizontal distance, and targets at different depths of the sound velocity profile, the performance simulation of the deep-sea target positioning method based on glancing angle sound ray correction.

[0055] The underwater environment is complex, making it difficult to achieve strict clock synchronization between underwater nodes and targets. When there is a small error in the measurement value of the sound ray propagation time caused by the asynchronous clock of the node and the target, the positioning method based on the traditional sound ray correction is compared. And the performance of the deep-sea target positioning method based on the grazing angle sound ray correction proposed by the present invention.

[0056] There are 6 nodes in the sensor network, and the position coordinates of the nodes are s 1 = (50m, 100m, 35m), s 2 = (103m, 600m, 22m), s 3 = (500m, 670m, 30m), s 4 = (620m, 300m, 40m), s 5 = (...

Embodiment 3

[0065] Embodiment 3: In the case of asynchronous clock, when the depth is constant, and the target is at different horizontal distances, the performance simulation of the deep-sea target positioning method based on the glancing angle sound ray correction.

[0066] In order to verify the effect of the deep-sea target precise positioning method based on grazing angle sound ray correction proposed by the present invention when the horizontal distance between the target and the node is different, it is assumed that the depth of the target is constant, and the horizontal distance between the target and the node is gradually increased. From the simulation and analysis of the second part of the embodiment, we know that when the target and the node clock are asynchronous, the traditional sound ray correction method can no longer meet the accuracy requirements, but the positioning method proposed in the present invention can obtain high-precision distance estimation, significantly improv...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - Generate Ideas

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com