Double-stage time sequence action detection method and device, equipment and medium

An action detection, two-stage technology, applied in neural learning methods, character and pattern recognition, instruments, etc., can solve the problems of low recognition accuracy, low judgment accuracy, special requirements for the length of the video to be detected, etc., and achieve recognition stability High and robust effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

[0172] During the public data set thumos-14 and the ActivityNet-1.3, the two-stage timing action detection is performed, followed by:

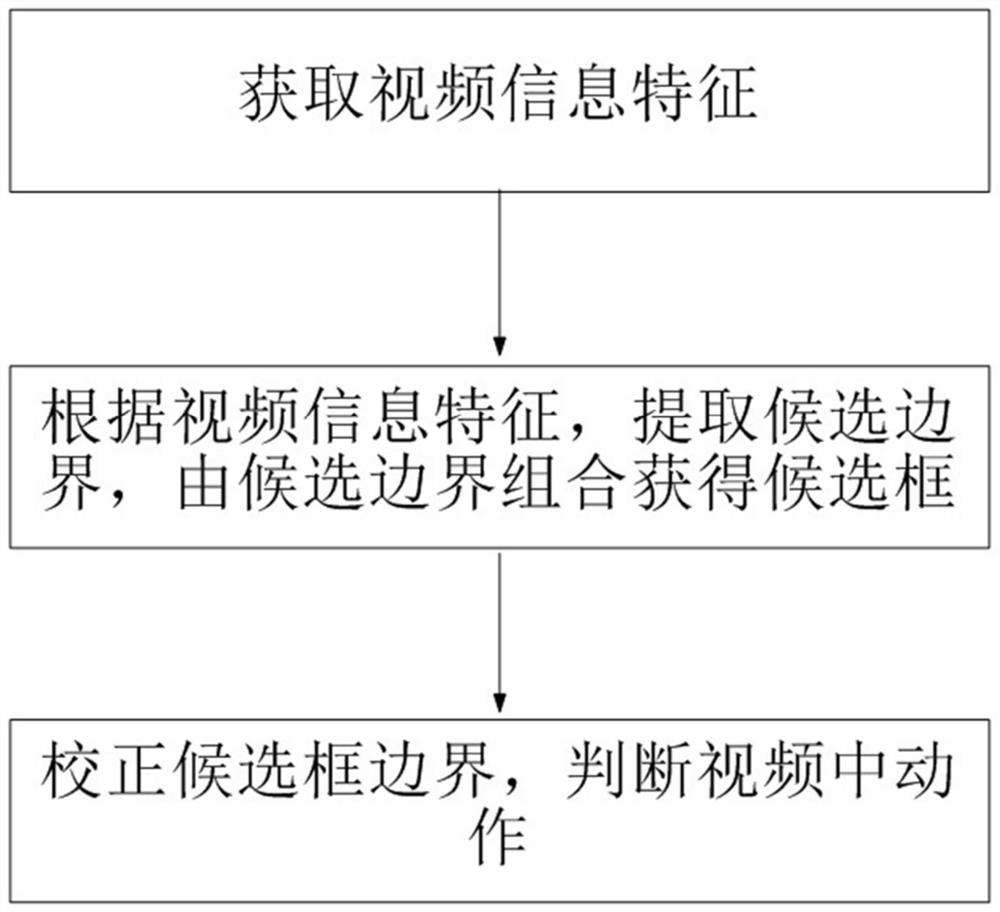

[0173] S1, get video information characteristics

[0174] S2, according to the video information characteristics, extract candidate boundaries, and obtain candidate boxes by the candidate boundary;

[0175] S3, correct the candidate frame boundary, determine the action in the video.

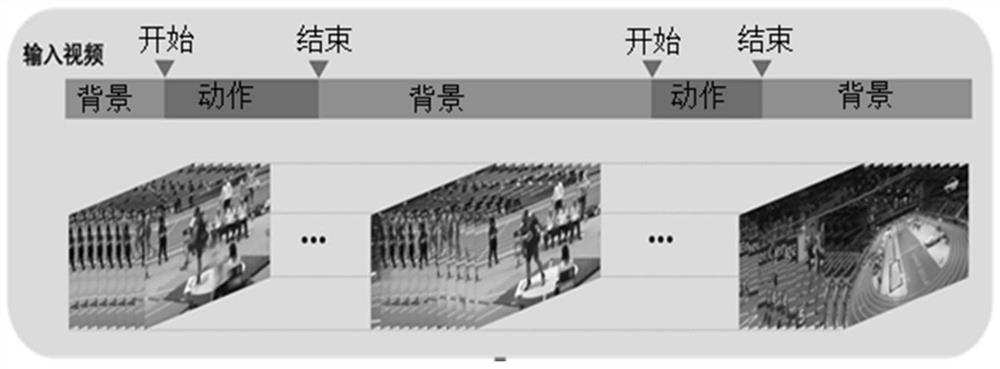

[0176] In step S1, the video is cut into the same N-segment segment as the length in order, extracts the RGB stream of all segments, and the RGB stream and the optical stream are input to the 3D action recognition model to extract RGB features and optical flow characteristics, and then fuse RGB features and optical flow characteristics, characterizes the characteristics of the entire video information, wherein each segment in the n segment segment is 16 frames.

[0177] The following subsections are included in step S2:

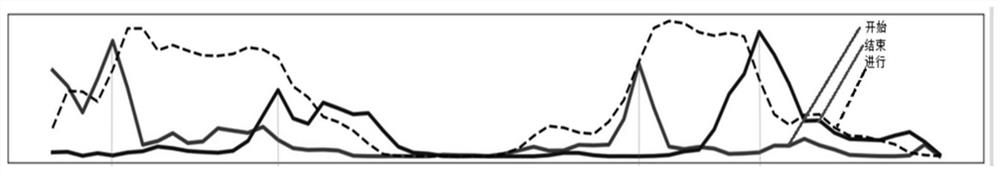

[0178] S21, convert video information charact...

experiment example

[0256] Comparative Example 1 The result of the candidate box in the ThumoS-14 data center, as shown in Table 1

[0257] Table I

[0258]

[0259] Among them, @ 50, @ 100, @ 200 indicates that the average recall rate when each video is generated in 50, 100,200 candidate boxes. The higher the average recall rate, the better performance, which can be seen from the table, this application The recall rate in Example 1 was significantly higher than the recall of other means.

[0260] Comparative Example 1 The result of the candidate box is generated in the ActivityNet-1.3 data set, as shown in Table 2.

[0261] Table II

[0262]

[0263] Among them, AR @ AN = 100 means that the average recall rate when each video is generated in 100 candidate frames, the higher the average recall rate, the better the performance. AUC is Ar @ an = 100 curve and the area enclosed in the coordinate axis, the greater the value, the better performance. As can be seen from the table, the candidate frame i...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - Generate Ideas

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com