Model training method and device, map drawing method and device, computer equipment and medium

A technology for training pictures and network models, applied in the computer field, can solve problems such as target object recognition deviation, outsourcing frame positioning deviation, and low efficiency

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

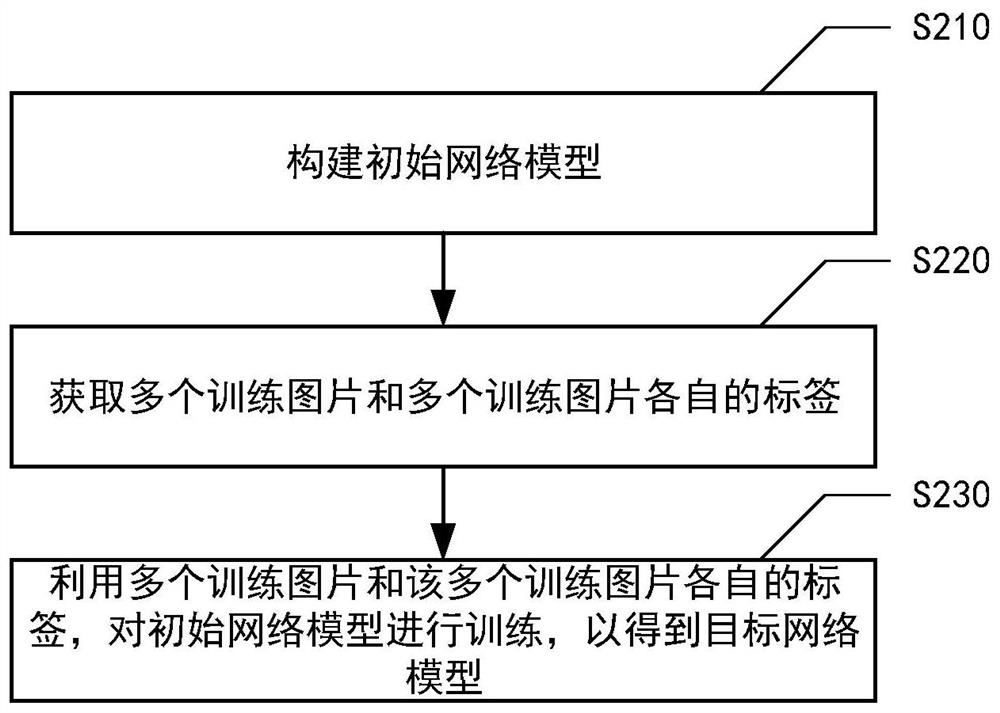

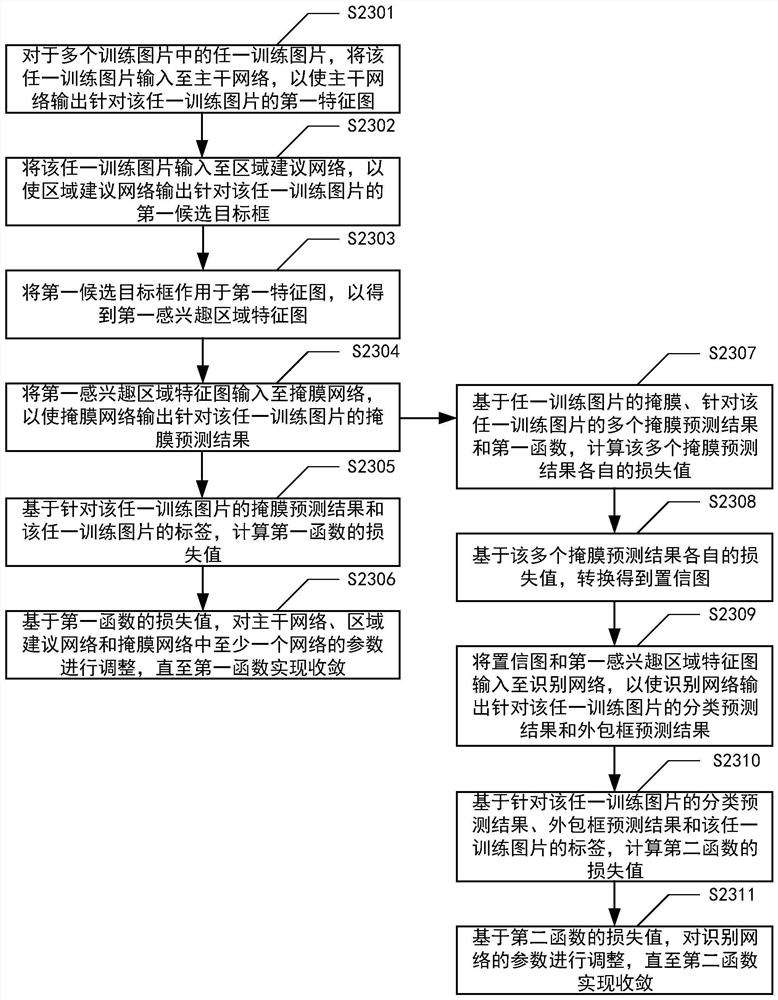

Method used

Image

Examples

Embodiment Construction

[0034] Hereinafter, embodiments of the present invention will be described with reference to the accompanying drawings. However, it should be understood that these descriptions are only exemplary, and are not intended to limit the scope of the embodiments of the present invention. In the following detailed description, for convenience of explanation, numerous specific details are set forth in order to provide a thorough understanding of the embodiments of the present invention. It will be apparent, however, that one or more embodiments may be practiced without these specific details. Also, in the following description, descriptions of well-known structures and techniques are omitted to avoid unnecessarily obscuring the concepts of the embodiments of the present invention.

[0035] The terms used herein are only used to describe specific embodiments, and are not intended to limit the embodiments of the present invention. The terms "comprising", "comprising" and the like as us...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D Engineer

- R&D Manager

- IP Professional

- Industry Leading Data Capabilities

- Powerful AI technology

- Patent DNA Extraction

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2024 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com