Video stitching method based on artificial intelligence technology

A technology of artificial intelligence and video stitching, applied in the field of video stitching, can solve the problems of low registration rate of video editing, affecting video effect, and low efficiency of video editing

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

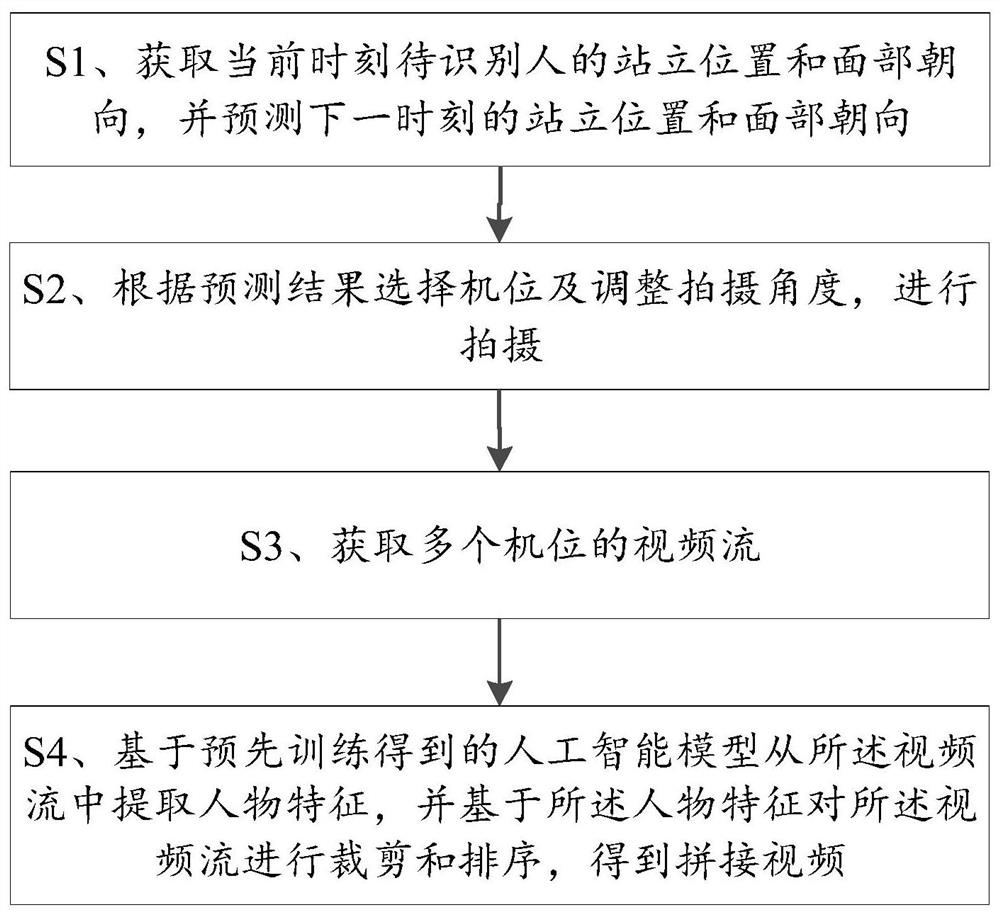

[0068] Please refer to figure 1 , Embodiment 1 of the present invention is:

[0069] A video splicing method based on artificial intelligence technology, comprising steps:

[0070] S1. Obtain the standing position and face orientation of the person to be recognized at the current moment, and predict the standing position and face orientation at the next moment.

[0071] Wherein, in this embodiment, step S1 specifically includes the following steps:

[0072] S11. The camera collects the video in the current shooting period, and obtains the current standing position and the current face orientation of the person to be identified; obtains the current standing orientation of the person to be identified from the video in the current shooting period.

[0073] S12. Predict the next standing position in the next shooting cycle according to the movement track change of the current standing position in the video captured in the current shooting cycle; The next standing orientation wi...

Embodiment 2

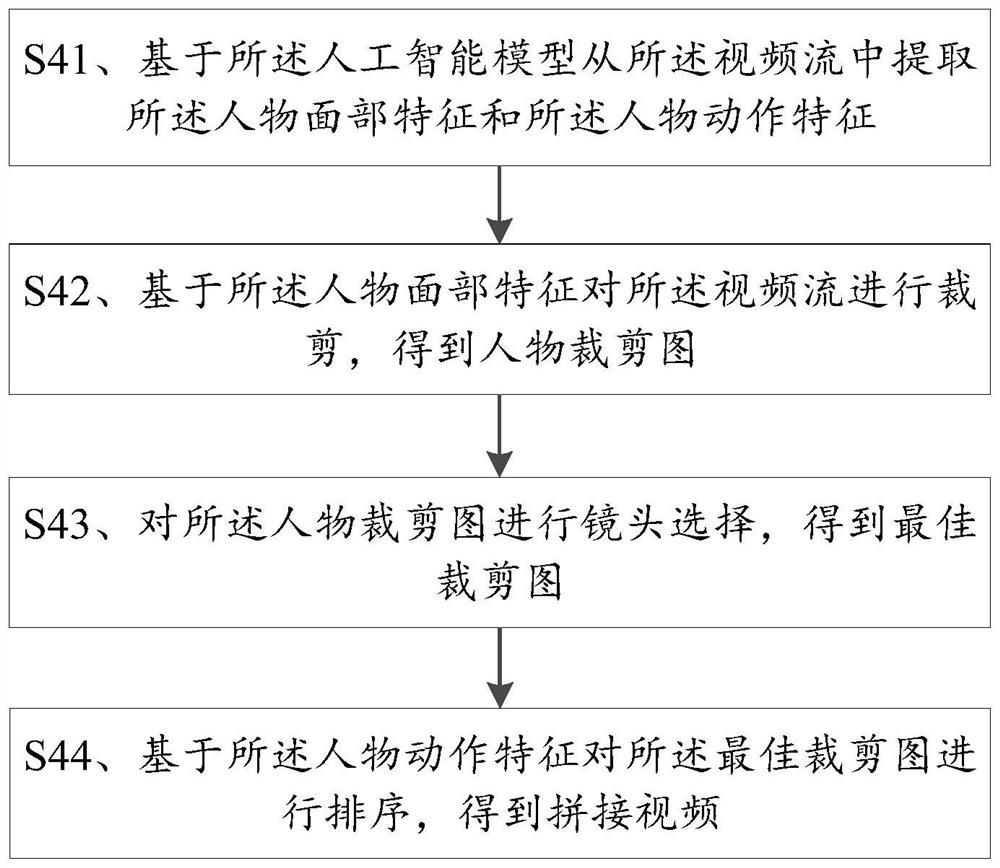

[0096] Please refer to figure 2 , the second embodiment of the present invention is:

[0097] On the basis of the first embodiment above, this embodiment continuously trains and matches the geometric motion model of the video by adopting a deep learning method, and finally obtains an artificial intelligence model through training. In this embodiment, a convolutional neural network model is used as the artificial intelligence model.

[0098] The convolutional neural network model is a feedforward neural network with strong representation learning ability. Since the convolutional neural network avoids the complicated preprocessing of the image and can directly input the original image, it is widely used in image recognition, object Recognition, behavior cognition, pose estimation and other fields. Its artificial neurons can respond to surrounding units within a part of the coverage area, which can limit the number of parameters and mine local structure.

[0099]In this embod...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - Generate Ideas

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com