Vehicle radar data and camera data fusion method and system

A radar data and fusion method technology, applied in the field of data fusion, can solve problems such as poor ranging accuracy, easily affected by light, and non-semantic measurement data, and achieve the effects of low performance requirements, reduced computing power requirements, and improved efficiency

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0021] The specific implementation manners of the present invention will be further described in detail below in conjunction with the accompanying drawings and embodiments. The following examples are used to illustrate the present invention, but are not intended to limit the scope of the present invention.

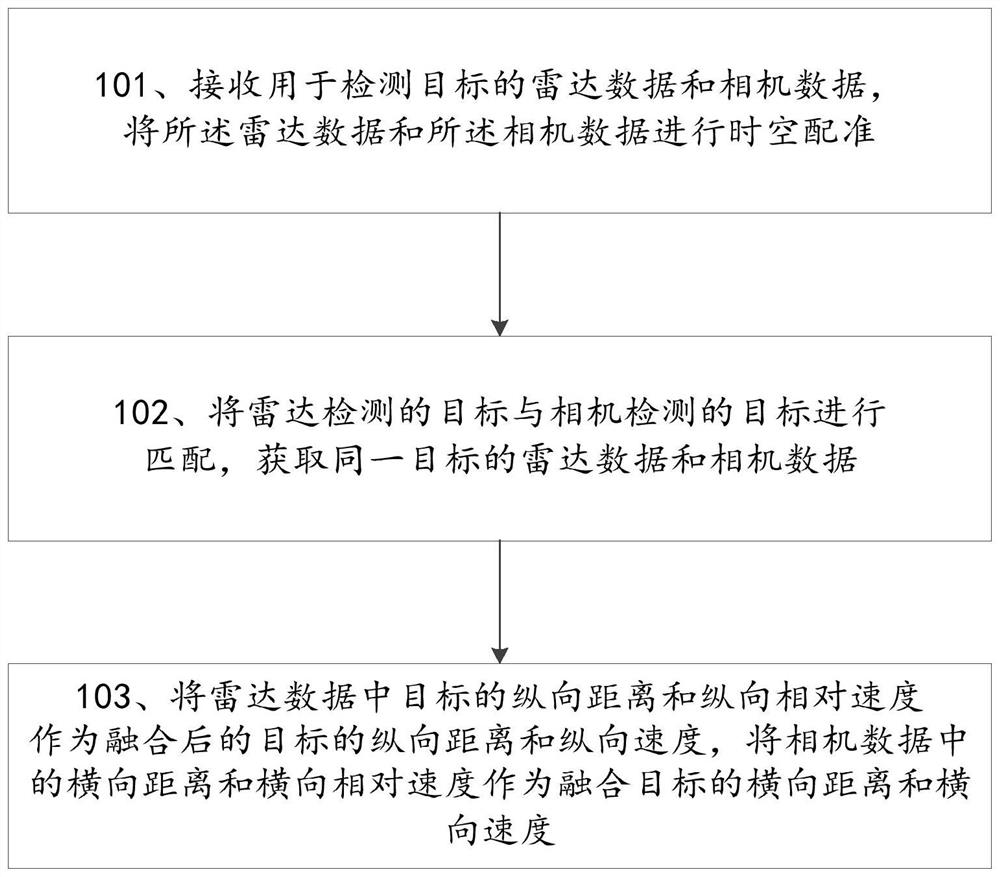

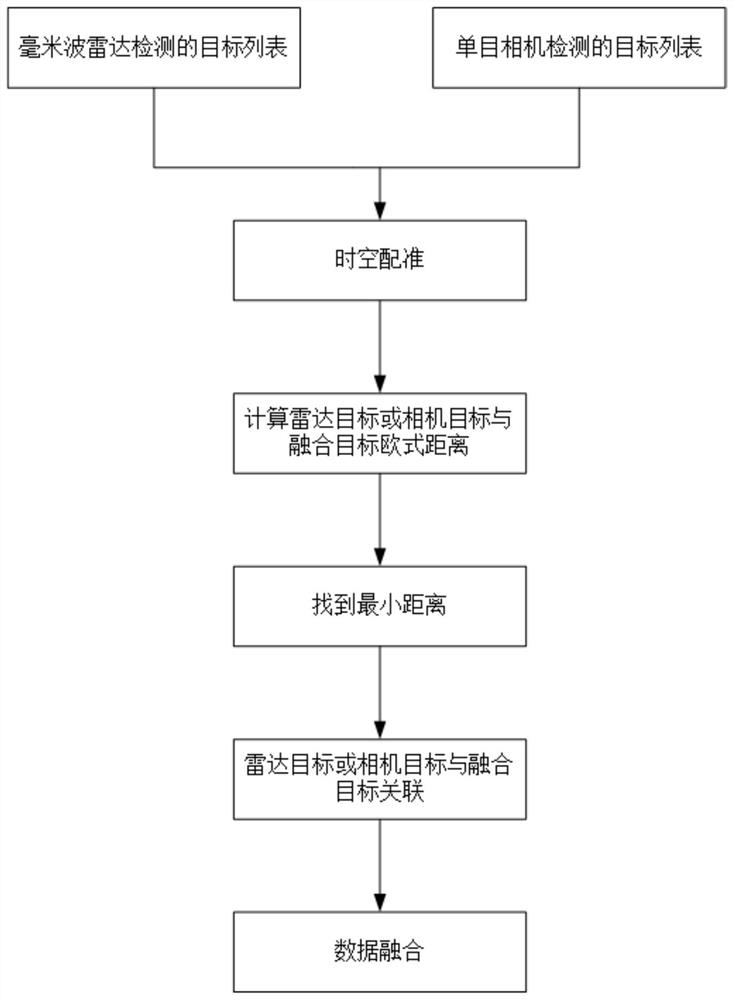

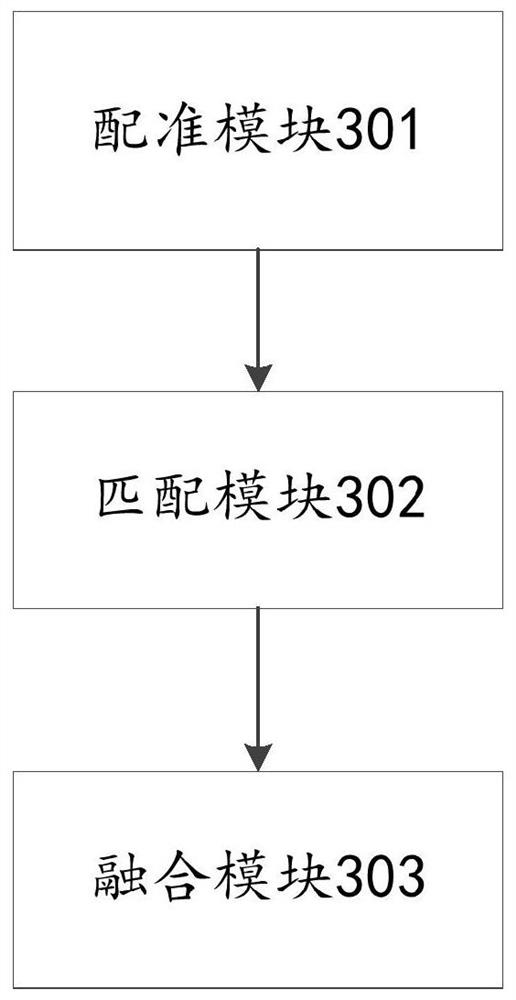

[0022] figure 1 A flow chart of a fusion method for vehicle radar data and camera data provided by an embodiment of the present invention, as shown in figure 1 As shown, the method includes: 101. Receive radar data and camera data for detecting a target, and perform time-space registration on the radar data and the camera data; 102. Match the target detected by the radar with the target detected by the camera, Obtain radar data and camera data of the same target; 103. Use the longitudinal distance and longitudinal relative velocity of the target in the radar data as the longitudinal distance and longitudinal velocity of the fused target, and use the lateral distance and l...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D Engineer

- R&D Manager

- IP Professional

- Industry Leading Data Capabilities

- Powerful AI technology

- Patent DNA Extraction

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2024 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com