Unmanned aerial vehicle autonomous flight training method based on reinforcement learning and transfer learning

A technology of reinforcement learning and transfer learning, applied in the direction of integrated learning, adaptive control, instruments, etc., can solve the problems that the flight strategy cannot be applied and cannot deal with complex and changeable environments, so as to reduce adverse effects and make the algorithm more robust sticky effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0024] Below in conjunction with specific embodiment, further illustrate the present invention, should be understood that these embodiments are only used to illustrate the present invention and are not intended to limit the scope of the present invention, after having read the present invention, those skilled in the art will understand various equivalent forms of the present invention All modifications fall within the scope defined by the appended claims of the present application.

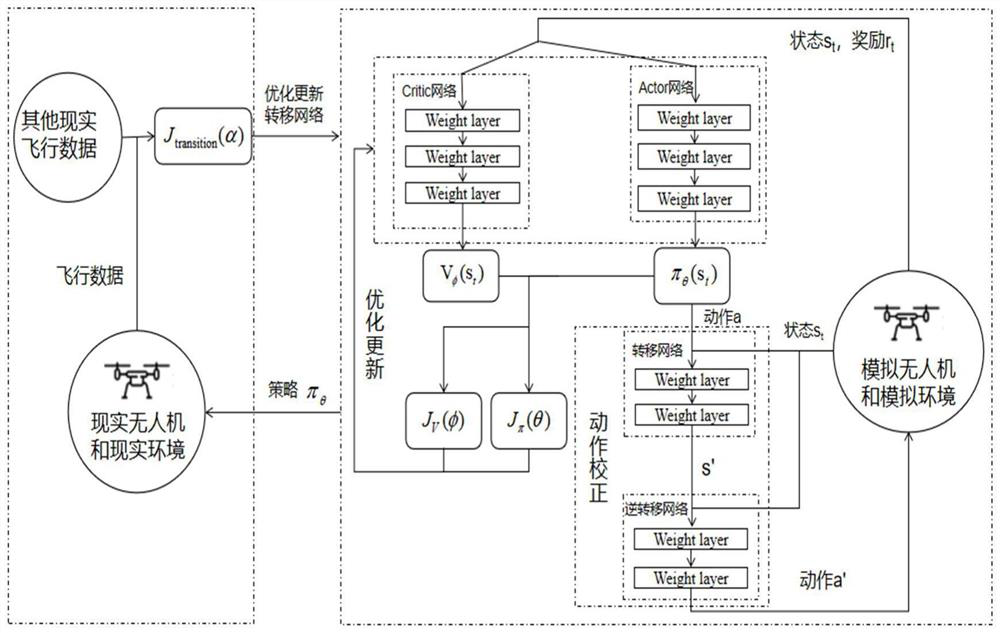

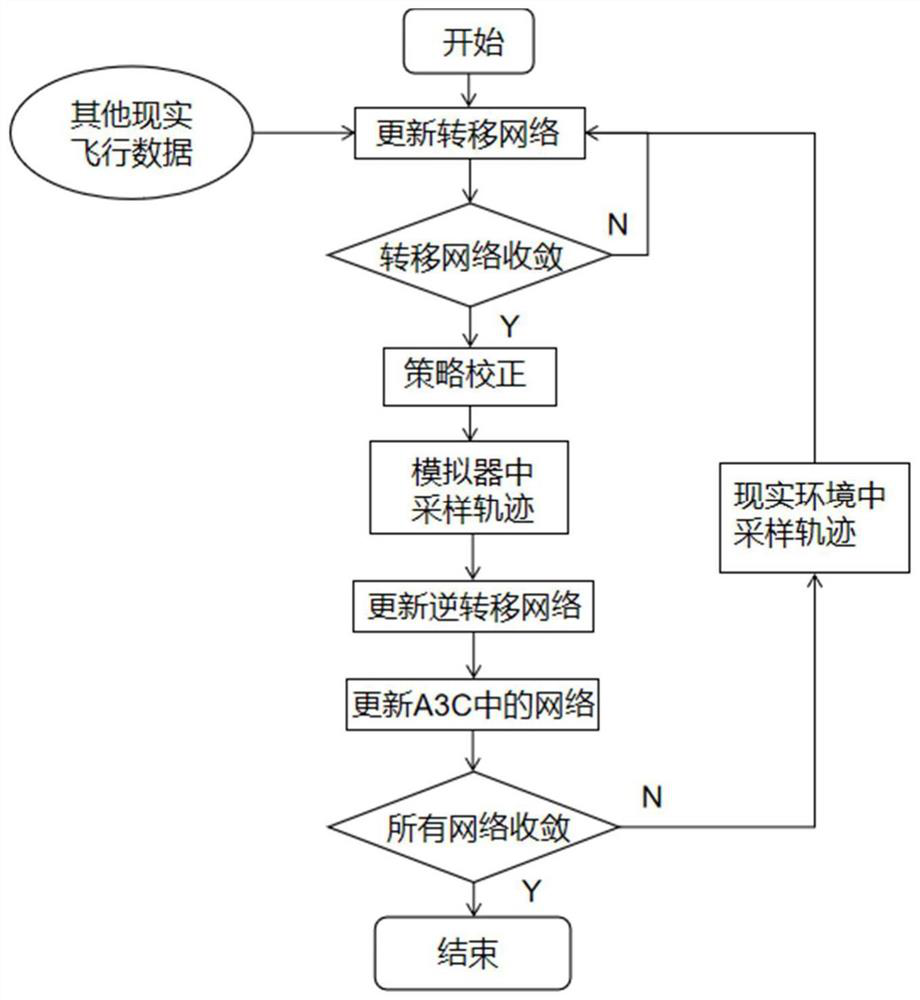

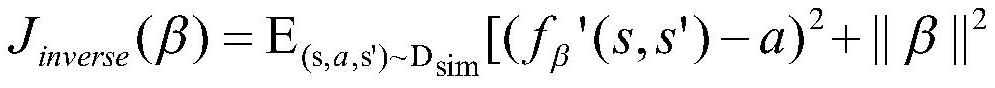

[0025] UAV autonomous flight training method based on reinforcement learning and transfer learning, collect flight data in the real environment, and learn the state transition model of the real environment; simultaneously train the UAV flight strategy and the inverse transfer model of the simulator environment in the simulator , and use the transfer model of the real environment and the inverse transfer model of the simulated environment to correct the flight maneuvers to be performed in the simula...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D Engineer

- R&D Manager

- IP Professional

- Industry Leading Data Capabilities

- Powerful AI technology

- Patent DNA Extraction

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2024 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com