Robot space positioning and grabbing control method based on template matching

A space positioning and template matching technology, applied in the field of visual positioning, can solve the problems of height deviation and inability to achieve precise positioning, and achieve the effect of flexible grasping

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

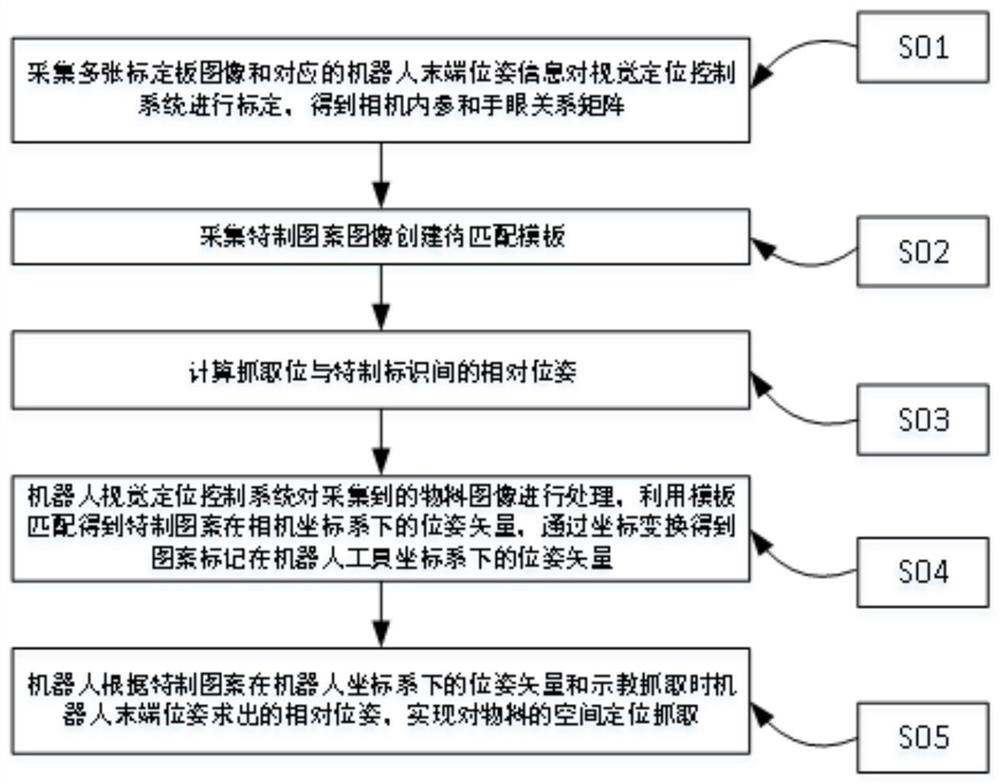

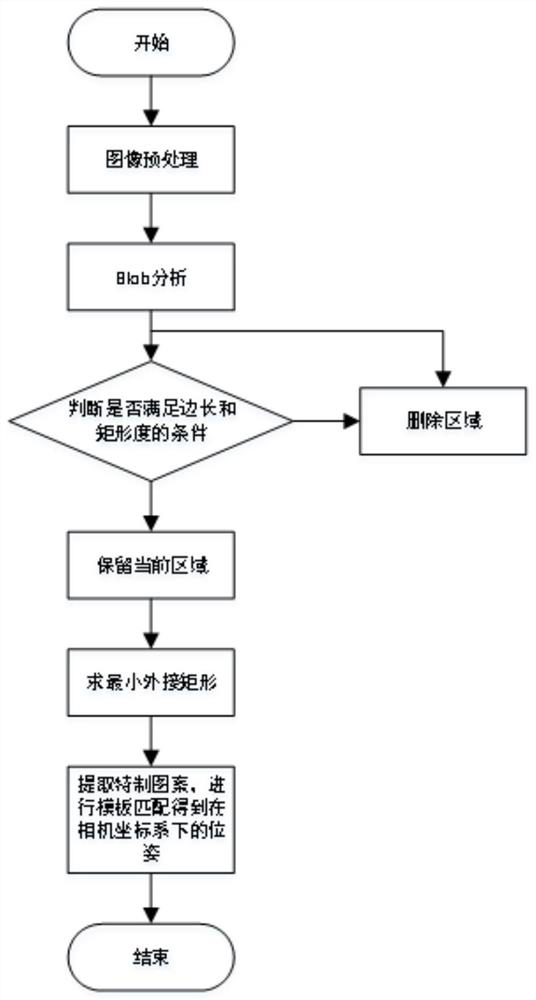

[0071] like figure 1 Shown is the robot space positioning and grasping control method based on template matching in the present invention, the method includes four processes of calibration, template creation, relative pose calculation, pose measurement, and positioning and grasping, such as figure 2 As shown in the figure, it is a flow chart of the pose evaluation algorithm of the specially-made logo in the camera coordinate system in the method of the present invention.

[0072] The robot spatial positioning and grabbing control method based on template matching in the present invention specifically includes the following steps:

[0073] S01 calibration: collect multiple calibration board images and corresponding robot end pose information to calibrate the visual positioning control system, and obtain the camera internal parameters and hand-eye relationship matrix

[0074] The specific steps in step S01 are:

[0075] S01-1 Install the monocular CCD camera at the end of t...

Embodiment 2

[0128] like image 3 As shown, the special logo designed by the present invention based on the template matching robot space positioning and grasping control method is a square, surrounded by black borders, and the interior is a black pattern, which can represent the material tilt information. This special logo is only a special logo and Can be transformed as needed.

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - Generate Ideas

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com