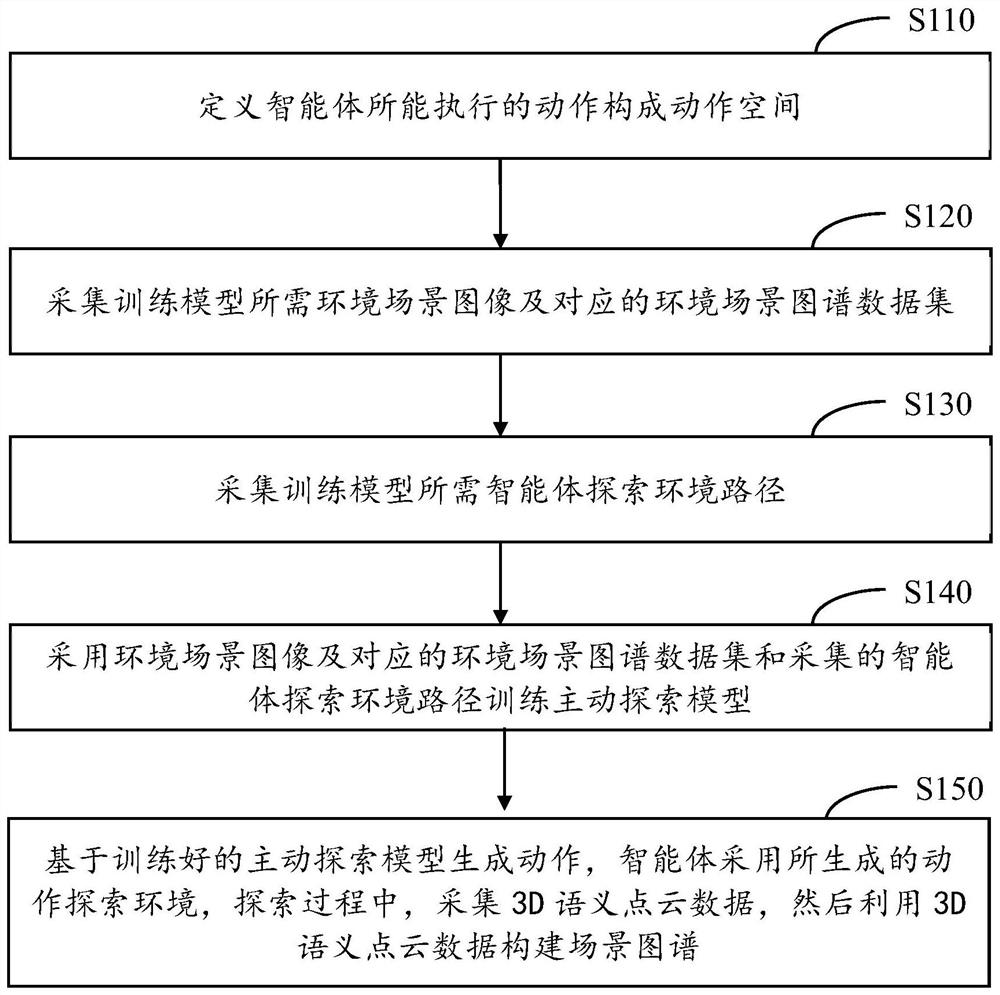

Method, device and exploration method for intelligent agent to actively construct environmental scene map

A technology of intelligent body and scene image, applied in the field of computer vision, can solve problems such as ignoring

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0037] Before describing specific embodiments of the present invention, some terms used herein are first explained.

[0038] Environmental scene graph: An environmental scene graph can be defined as {N,E}, where N is a node and E is an edge. The environmental scene graph is a graph structure composed of a series of nodes and edges, where nodes represent entities in the scene (entities ), while expressing the relationship between them, for example: support (support), support by (supported), standing on (standing at), sitting on (sitting on), lying on (lying on), has on top (in Top), above (above), below (below), close by (close to), embedded on (embedded on...), hanging on (hanging on...), pasting on (pasted on...) , part of (part of...), fixed on (fixed on...), connect with (connected with...), attach on (attached to... on). Each relationship can be represented by a triple, such as ((floor, support, table)) or ((table, supported, floor)).

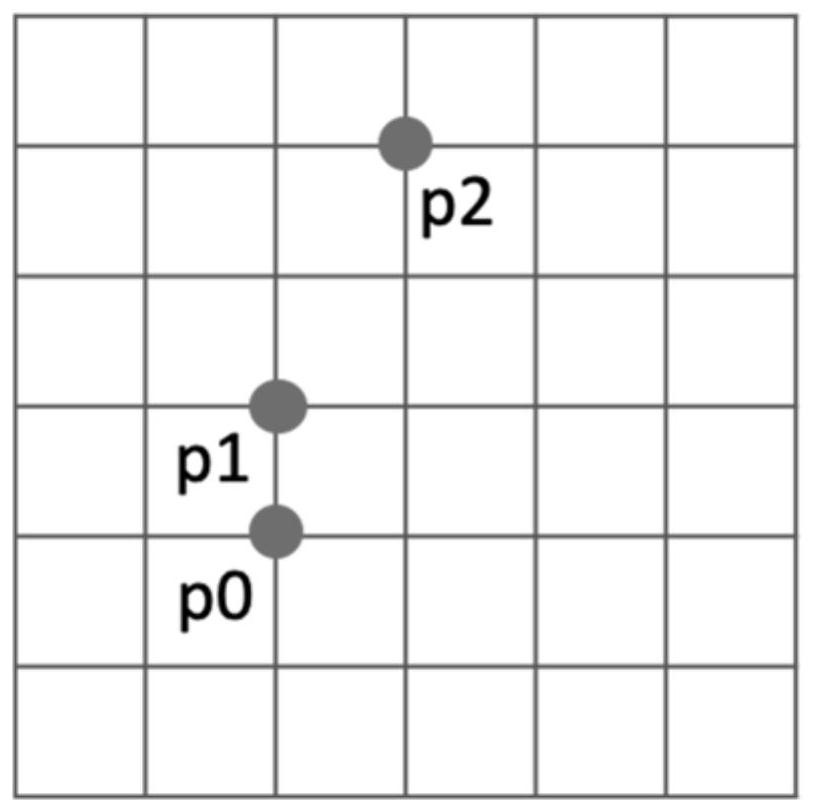

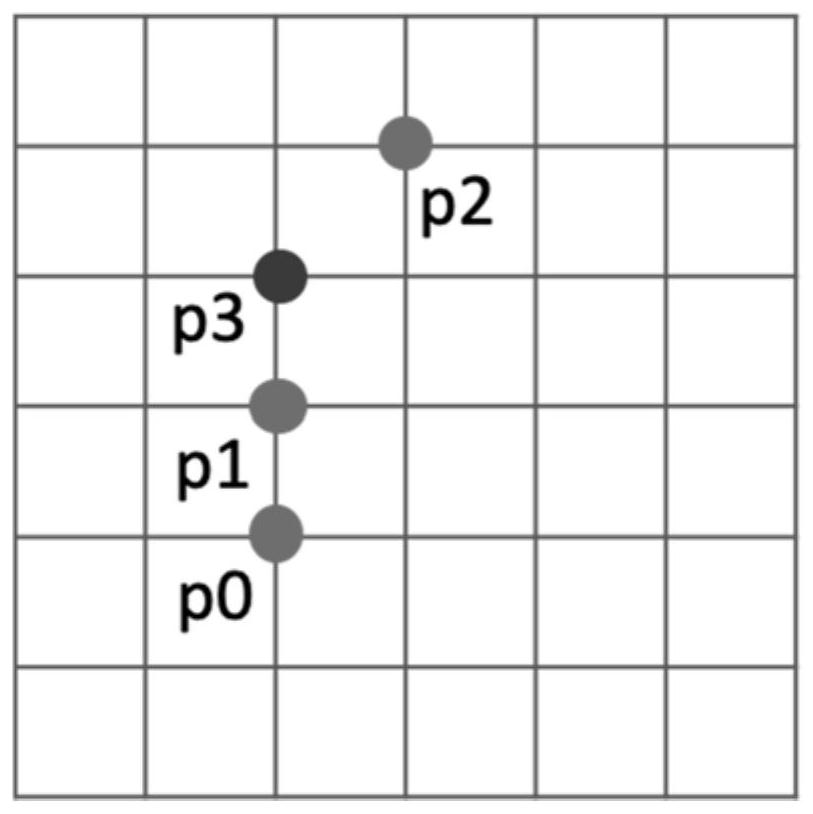

[0039] Node confidence: The enti...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com