Character input method based on combination of brain signals and voice

A character input and voice input technology, which is applied in the field of human-computer interaction, can solve the problems of reduced operation accuracy, long time-consuming input of a Chinese character, and unguaranteed accuracy.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

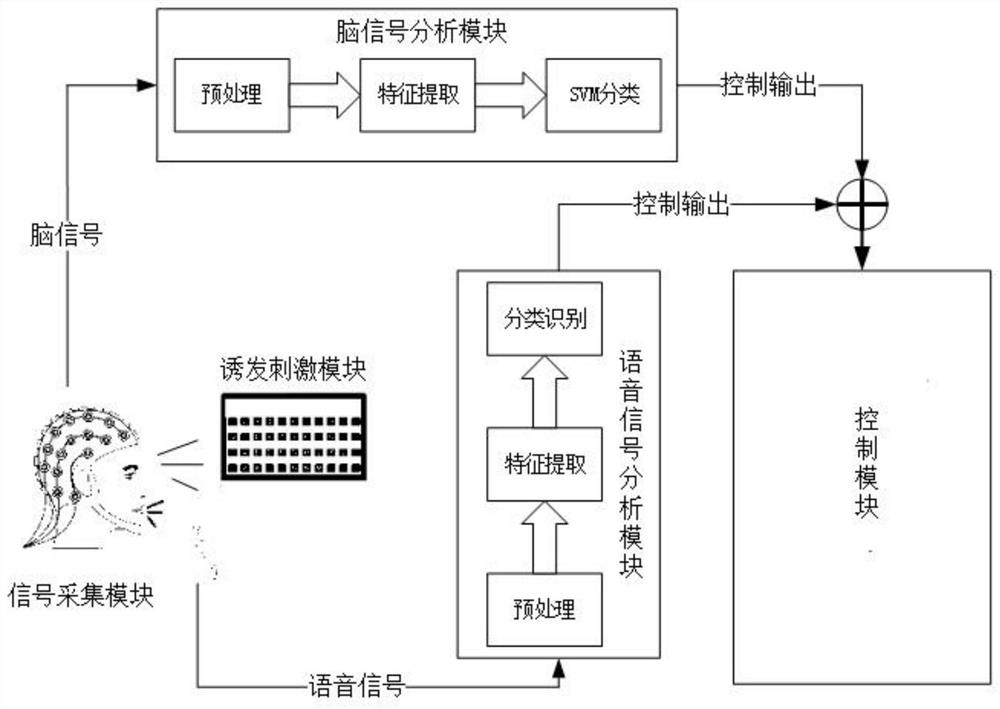

[0026] see Figure 1 to Figure 6 , the present invention provides a technical solution: a character input method based on the combination of brain signals and voice, the system is composed of four parts: an evoked stimulus module, a signal acquisition module, a signal analysis module and a control module; it is characterized in that: the method Specific steps are as follows:

[0027] (1) System initialization: the user puts on the electrode cap, puts on the conductive paste, connects the electrode cap to the amplifier, connects the amplifier to the computer, starts the EEG acquisition software, and sets the parameters; connects the microphone to the computer;

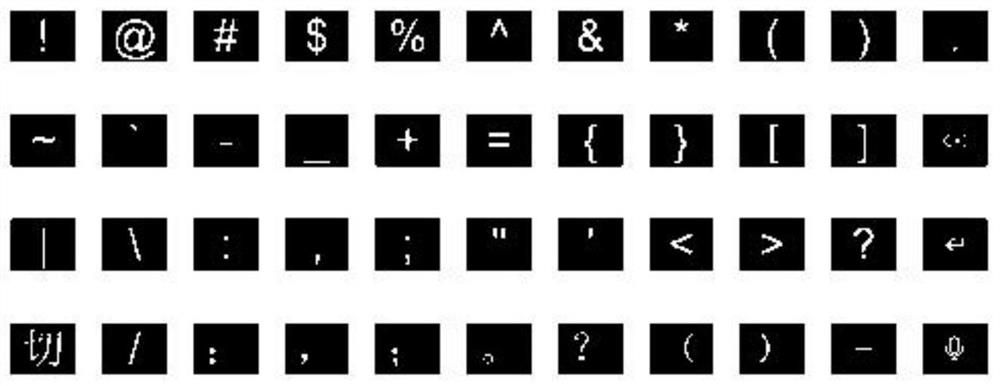

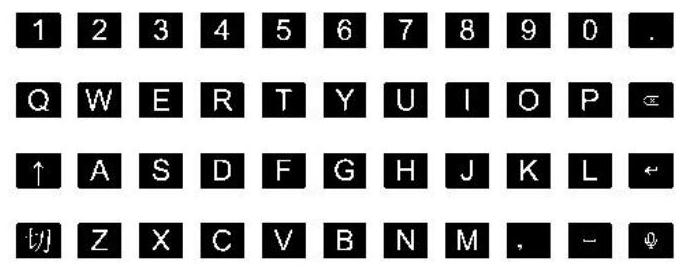

[0028] (2) Brain signal input: start the stimulation paradigm interface of the evoked stimulation module, and start the collection of training data; during the spelling process of each character, the P300 button will flash n rounds (round), and there are 44 P300 buttons in each round It will flash once in a random orde...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D Engineer

- R&D Manager

- IP Professional

- Industry Leading Data Capabilities

- Powerful AI technology

- Patent DNA Extraction

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2024 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com